Key Takeaways

- The creator economy faces a content gap where audiences consume content 100 times faster than humans can produce it.

- Text-to-image AI uses diffusion models that iteratively remove noise from static, guided by text prompts through CLIP and U-Net.

- LoRAs, ControlNet, and IP-Adapters keep characters consistent and photorealistic using as few as three reference photos.

- Modern 2026 models like Stable Diffusion 3 and FLUX1.1 Pro reduce uncanny valley issues with better training and natural compositions.

- Sozee lets creators turn a few photos into infinite consistent content. Sign up with Sozee today to solve your content crisis.

The Creator Content Crunch in the 2026 Economy

Creators now face a simple math problem: demand for content outpaces human production capacity by roughly 100 to 1. This imbalance burns out individual creators who must constantly shoot, post, and engage to stay visible. Production tasks like lighting, locations, and wardrobe changes eat hours for only minutes of usable content. The result is a constant feeling of being behind, even for full-time professionals.

Agencies that manage multiple creators hit hard limits when talent gets sick, travels, or takes time off. Content calendars stall, and revenue pauses when there is nothing new to post. Virtual influencer projects promise consistent output but often fail when character appearance shifts between posts. Audiences quickly notice these inconsistencies and lose trust.

The financial hit is real. Many creators lose thousands of dollars during content gaps. Agencies struggle to retain clients when posting schedules slip. AI content generation is projected to grow 300% by 2026, which shows how strong the demand is for tools that remove human production bottlenecks.

Text-to-image AI finally offers a practical answer by turning content creation into a scalable, always-on process.

Diffusion Models: How Text Prompts Become Photorealistic Images

Text-to-image AI relies on diffusion models that turn random noise into clear images through many small refinement steps. Imagine television static slowly resolving into a sharp photograph. That gradual reveal mirrors how diffusion models work at each timestep.

The process starts with forward diffusion, where Gaussian noise is gradually added to training images until they become pure static. The model then learns the reverse process and predicts how to remove noise at every step. During generation, the system begins with random noise and repeatedly denoises it using what it learned.

Latent Space Compression with VAEs

Latent space is the compressed mathematical space where diffusion actually happens. Instead of working on full-resolution pixels, modern systems use a Variational Autoencoder (VAE) to compress images into a smaller latent representation. This approach cuts compute costs while preserving the meaning and structure of the image.

U-Net Denoising and Modern Training Speed

The U-Net neural network acts as the denoising engine that predicts which noise to remove at each step. It trains on huge datasets of image and text pairs, learning how visual patterns map to language. Stable Diffusion 3 generates 1024×1024 images in under 35 seconds using Rectified Flow Sampling, which speeds up this denoising loop.

5 Core Steps to Photorealistic Images:

- Text Encoding: CLIP converts your prompt into numerical embeddings.

- Noise Initialization: The model starts from pure random noise.

- Iterative Denoising: U-Net removes noise step by step using the text embeddings.

- Latent Decoding: The VAE decoder turns the latent image back into pixels.

- Upscaling: An upscaler sharpens and enlarges the final image.

Recent upgrades such as FLUX1.1 Pro, which delivers 3x faster inference with better composition and consistency, make real-time generation realistic for daily creator workflows.

CLIP and Text Conditioning for Creator-Ready Prompts

CLIP (Contrastive Language-Image Pre-training) connects written prompts to visual concepts. It learned this mapping by training on millions of image and caption pairs from the internet. When you type a phrase like “golden hour lighting,” CLIP turns that phrase into a vector the diffusion model can follow.

Cross-attention layers inside U-Net then read these text vectors at every denoising step. The model focuses on the most relevant parts of the prompt while it updates the image. Classifier-free guidance strengthens this link by comparing guided and unguided predictions and pushing the result closer to your text description.

Richer Prompt Understanding for Social Platforms

Stable Diffusion 3 uses three encoders, CLIP, OpenCLIP, and T5 XXL, to improve prompt understanding and in-image text rendering. This stack allows more nuance for complex prompts such as “Instagram-ready golden hour selfie with soft shadows.”

Creators can now describe platform-specific looks directly in the prompt. Phrases like “TikTok lighting” or “OnlyFans bedroom setup” map to familiar visual styles that match audience expectations.

From Uncanny to Natural: Realism and Consistency Fixes

Hyper-realistic output depends on more than a simple prompt. Prompt engineering adds details about camera type, lens, lighting, and mood. Negative prompts remove unwanted traits such as “plastic skin” or “harsh flash.” Each layer of detail nudges the model toward believable photography.

Tools That Push Toward True Photorealism

LoRAs (Low-Rank Adaptations) fine-tune models for specific styles or subjects without full retraining. ControlNet locks in pose, composition, or depth maps for precise framing. Power users often stack these tools, using LoRAs for style, ControlNet for pose, and detailed prompts for lighting and texture.

Keeping a Character’s Face Consistent

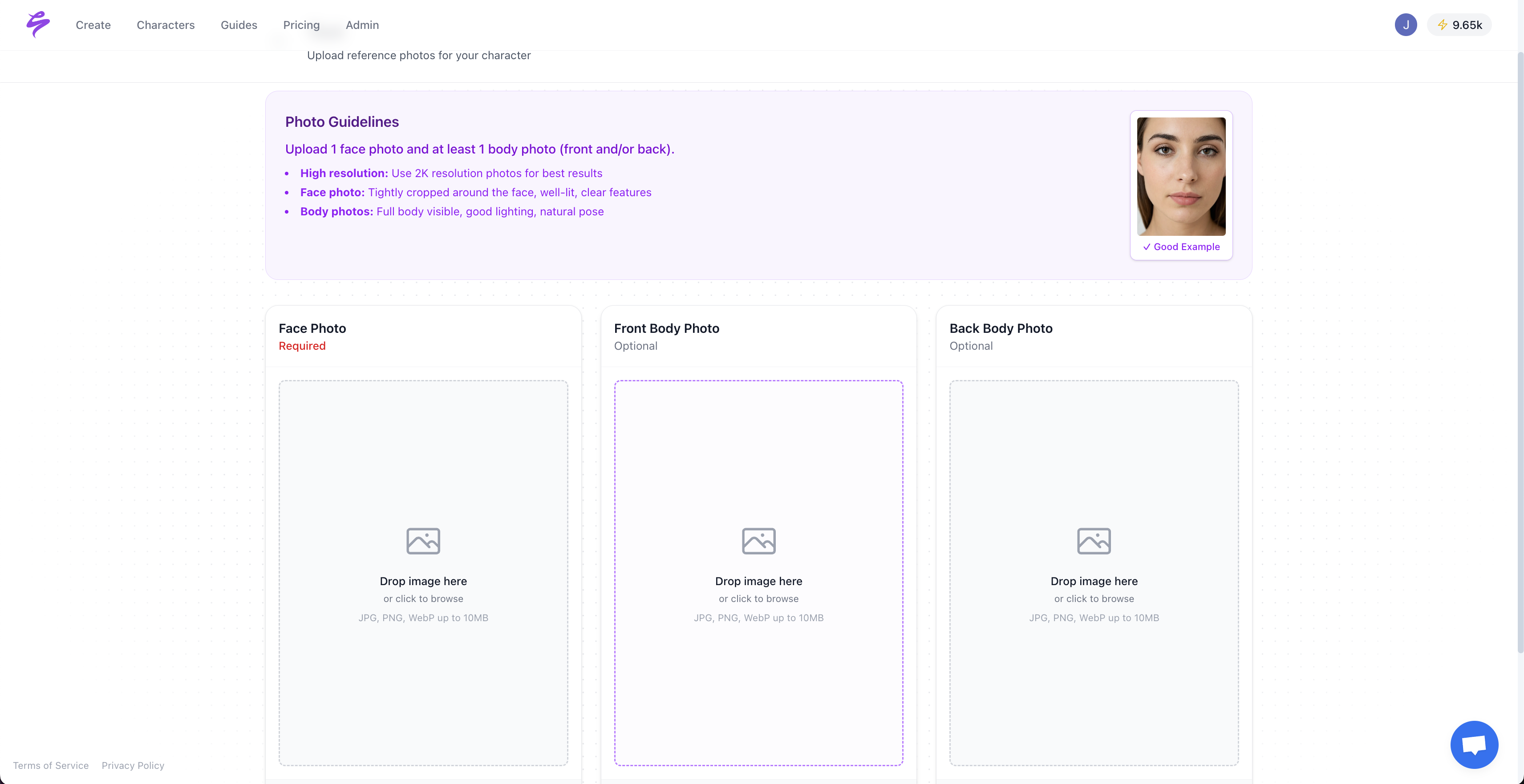

Character consistency across many images remains a core challenge for creators. IP-Adapters and FaceID pipelines reach high consistency by using clear reference photos and dedicated reference modes. The strongest setups work from as few as three photos to lock in a likeness.

Sozee rebuilds likeness instantly from minimal photos with no custom training. This approach removes the usual consistency problems while keeping likeness private and isolated. General tools often drift between generations, but specialized platforms hold the face steady across unlimited scenes.

Modern Fixes for the Uncanny Valley

Advances from 2024 to 2026, including Google’s Nano Banana models, greatly reduced early issues like warped hands or overly glossy skin. Newer diffusion models now favor natural poses and lighting that feel more human.

Training data quality and smarter architectures drive this progress. Systems such as StyleGAN2 and modern diffusion models already create synthetic faces that humans often cannot distinguish from real ones.

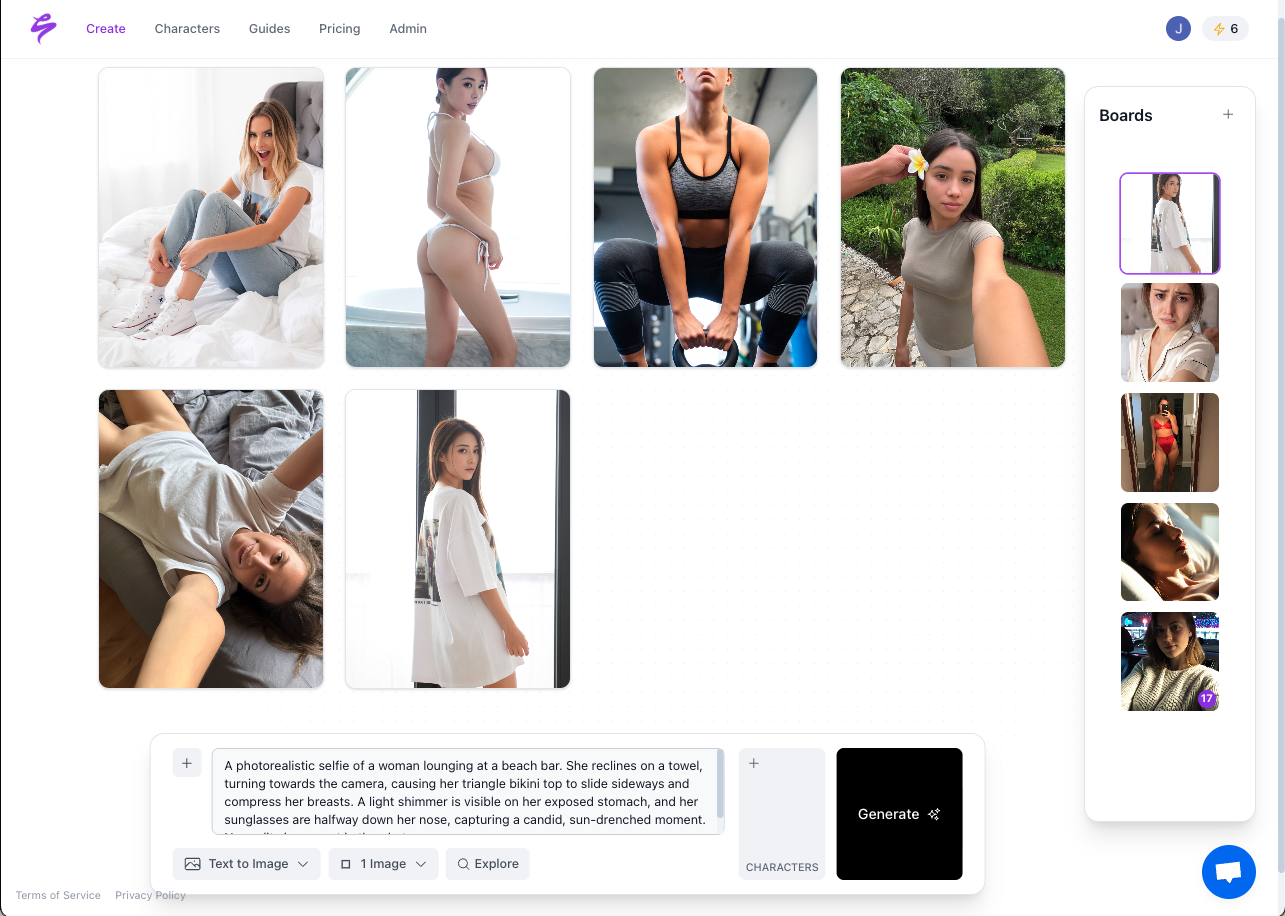

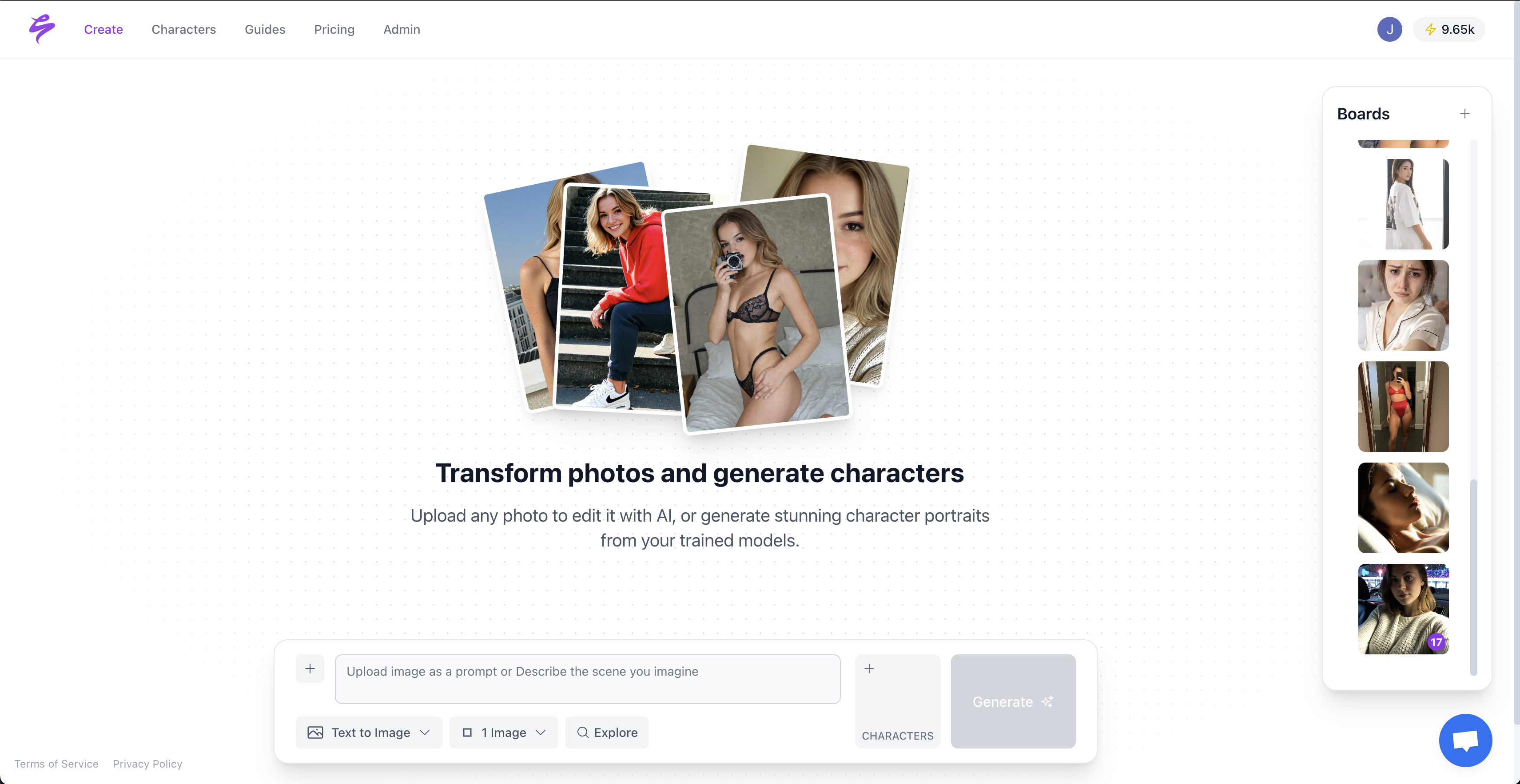

Why Sozee Fits Creator Monetization Workflows

Sozee focuses on creator income rather than general art use cases. The platform turns three uploaded photos into a private, reusable likeness that powers infinite, hyper-realistic content. Likeness stays isolated, which protects privacy while keeping results consistent across every shoot.

Sozee’s Creator-Focused Advantages:

- Minimal Input Requirements: Only 3 photos instead of 12 or more.

- Instant Likeness Recreation: No waiting for model training or tuning.

- SFW-to-NSFW Pipelines: Build funnels from public social posts to paid content.

- Agency Workflow Support: Built-in approvals and collaboration for teams.

- Platform-Specific Outputs: Formats tuned for OnlyFans, TikTok, and Instagram.

- Reusable Styles: Save winning looks and apply them again in new scenes.

| Platform | Input Required | Consistency | Creator Focus |

|---|---|---|---|

| Sozee | 3 photos | Native (private likeness) | Monetization-first |

| Midjourney | 12+ references | Variable | General art |

| Stable Diffusion | Custom training | Prompt-dependent | Technical users |

Midjourney shines for stylized art but often needs large reference sets for solid likeness. Stable Diffusion offers deep control yet expects comfort with tools like ComfyUI and custom training. Sozee skips the technical setup and delivers monetizable, shoot-ready photos in minutes.

Get started with Sozee for infinite content creation and turn a few photos into a reliable content engine.

Scaling From Single Images to Full Revenue Pipelines

Text-to-image AI turns solo creators into scalable content engines. Agencies gain predictable pipelines that no longer depend on physical shoots or travel schedules. Creators can launch pay-per-view drops, themed series, and custom fan sets without booking studios or photographers.

Streamlined Creator Workflow:

- Upload: Three photos define your digital likeness.

- Generate: Produce unlimited looks across outfits, scenes, and moods.

- Refine: Use AI tools to adjust lighting, skin tone, and fine details.

- Export: Download platform-ready sets for social, adult, or promo use.

Legal rules in 2026 remain clear for self-directed content. US proposals like the No FAKES Act protect people from unauthorized likeness use, while allowing creators to control and monetize their own image. EU AI Act transparency rules cover public deepfakes but exempt private commercial workflows, which keeps creator pipelines compliant.

Selling AI-generated content of yourself remains legal when you consent and follow platform disclosure rules. Private models like Sozee’s avoid training on public datasets, which reduces copyright and data concerns.

Frequently Asked Questions

How text-to-image AI keeps characters consistent

Character consistency comes from reference images, LoRAs, and IP-Adapters that lock in identity traits across generations. The strongest setups work from about three high-quality photos that show different angles and expressions. Platforms like Sozee rebuild likeness instantly from these photos without manual training. Strong visual anchors during setup, combined with reference-aware generation, keep the face stable across endless variations.

Legality of selling AI-generated photos in 2026

Selling AI-generated content that uses your own likeness is legal across major markets in 2026. US rules focus on blocking unauthorized use of someone else’s image, not on limiting your right to monetize yourself. EU AI Act rules require labels for public deepfakes but do not block private commercial use. Consent remains the key factor. You can sell content of yourself, but not of other people without permission. Private models also reduce copyright risk from public training data.

Most realistic text-to-image AIs for creators in 2026

Sozee leads for creator-focused realism and monetization features with minimal input. FLUX1.1 Pro delivers strong general realism with 3x faster inference and stable compositions. Stable Diffusion 3 offers high-end photorealism for users comfortable with technical workflows. Google’s Nano Banana models excel at natural scenes that avoid uncanny valley effects. GLM-Image works well for text-heavy images, and DALL-E 3 remains popular for artistic realism.

Practical ways to avoid the uncanny valley

Modern 2026 models already reduce uncanny valley issues through better data and architectures. You can push results further by using sharp reference photos, specifying natural lighting, and avoiding overly perfect symmetry. Negative prompts such as “no plastic skin” or “no glossy finish” help filter artificial looks. Platforms like Sozee add refinement tools that tune skin texture, lighting, and facial details toward natural human variation.

Recreating a specific likeness with AI

Modern text-to-image systems recreate specific likenesses reliably when they receive clear reference photos. The usual minimum is three to five images that show different angles and expressions. FaceID, IP-Adapters, and VAE encoders capture core facial structure and map it into a stable digital identity. Private, likeness-specific models reach the highest accuracy and keep data isolated. Strong source photos with varied angles and consistent lighting give the best results.

Conclusion: Turn Your Likeness Into Infinite Content

Text-to-image AI powered by diffusion models finally resolves the creator content crunch. These systems flip the economics of content by matching infinite demand with effectively infinite supply. Knowing the basics of CLIP, U-Net, and denoising helps creators guide results instead of leaving everything to chance.

Sozee represents this technology tuned specifically for monetization. Minimal input, instant likeness recreation, private processing, and creator-first workflows remove the realism and consistency problems that slow general tools. The outcome is hyper-realistic, endlessly scalable content that still looks exactly like you.

Creators who can publish without limits will own the next wave of the creator economy. Text-to-image AI makes that scale possible today for solo creators and agencies alike.

Go viral today, get started with Sozee and turn your likeness into an always-on content engine.