Key Takeaways

- Hyper-photorealistic AI content depends on precise control of resolution, detail, and data quality during LoRA training.

- Careful image curation, tuned training parameters, and clear prompts reduce plastic skin, deformed anatomy, and other visible AI artifacts.

- Negative prompts, post-processing, and the right balance between speed and quality help keep outputs consistent and monetizable.

- Creators and agencies gain better returns when AI images closely match professional photography standards and stay on brand at scale.

- Sozee removes the need for manual LoRA training and delivers consistent, hyper-realistic likenesses from a few photos, so creators can focus on content and revenue growth. Sign up for Sozee to streamline high-quality production.

The Creator’s Dilemma: Why Resolution and Detail in LoRAs Are Breaking Photorealism

Fans recognize artificial-looking content quickly, and low-quality visuals reduce trust, engagement, and earnings. Many custom LoRA workflows struggle with resolution and fine detail, which leads to waxy or plastic skin, drifting facial features, deformed hands, and images that clearly look AI-generated instead of like studio photography.

These problems affect business outcomes. Artificial-looking content lowers conversion rates, weakens fan loyalty, and makes agencies look unreliable. High-value creator workflows now treat strong resolution handling and precise detail control as baseline requirements, not optional enhancements.

Setting Up for Success: Prerequisites for Photorealistic LoRA Workflows

Essential Tools and Hardware for LoRA Photorealism

Photorealistic LoRA training works best with production-ready tools and hardware. Many creators use Stable Diffusion WebUI with automatic1111 for generation control, dedicated LoRA training scripts such as Kohya_ss for parameter tuning, and GPUs with at least 12 GB of VRAM to support higher-resolution datasets without aggressive downsampling.

Stronger hardware reduces the need for compression that can blur fine texture before the model ever learns it. Consistent settings across experiments also make it easier to identify which changes actually improve detail.

Core LoRA Concepts for Realistic Output

LoRA (Low-Rank Adaptation) fine-tunes the cross-attention layers that connect text prompts and images in Stable Diffusion. These compact adapters can be 10–100 times smaller than full checkpoints while still capturing detailed styles and subject likenesses. That size and flexibility make LoRAs useful for recreating specific faces and characters consistently across many images.

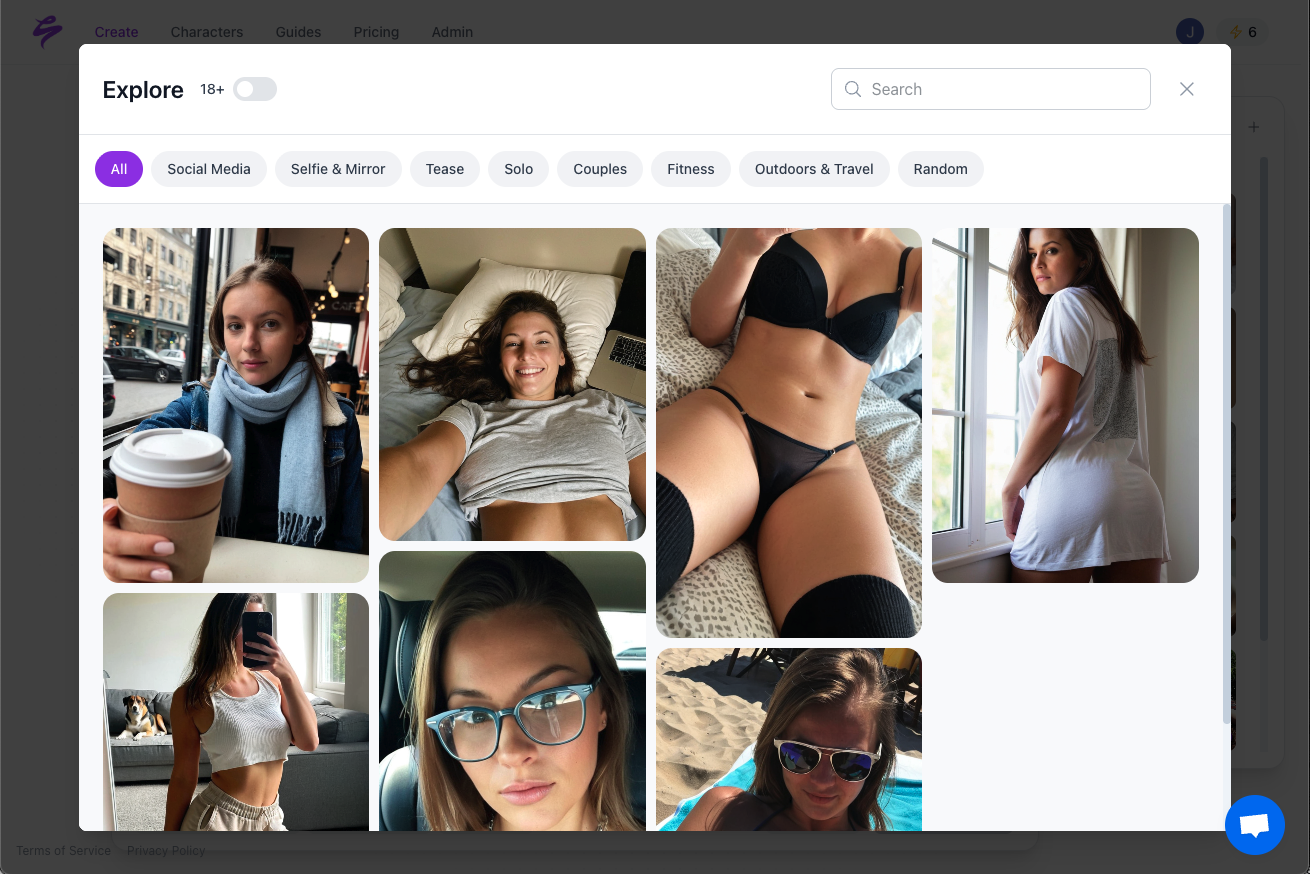

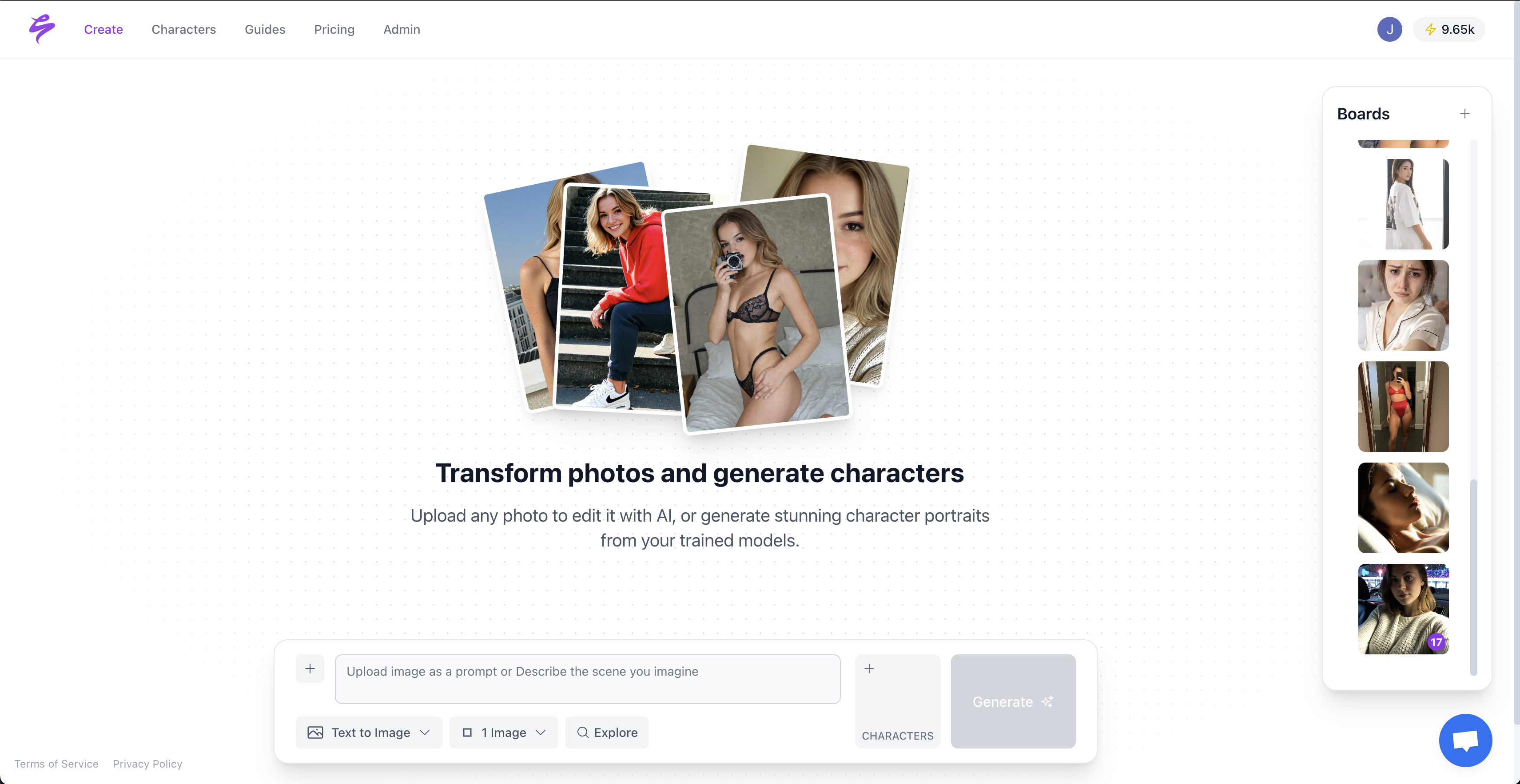

The Sozee Advantage: Hyper-Realism Without Technical Training

Manual LoRA training often takes days of experimentation and troubleshooting. Sozee removes that technical layer. Creators upload as few as three photos and receive a likeness model that produces consistent, hyper-realistic images without managing datasets, ranks, or learning rates. Create your Sozee account to compare manual tuning with an instant, production-focused workflow.

Step-by-Step Workflow: Handling Resolution and Detail in Custom LoRAs

Step 1: Curate Training Data for Detail Fidelity

High-quality data gives LoRAs enough information to reproduce realistic skin, hair, and facial structure. Use sharp, well-lit images that show pores, hair strands, and natural shadows. Avoid compressed, low-resolution, or heavily filtered photos that hide texture, since the model cannot learn detail that is not visible. Small but carefully curated datasets, sometimes as low as around 40 high-quality images, can still support strong results.

Include a mix of angles, expressions, and crops while keeping identity and style consistent. Close-ups of eyes, mouth, and hair help the model learn fine-grained detail. Remove any image with motion blur, harsh artificial lighting, or heavy beauty filters that would confuse what “real” skin or anatomy should look like.

Step 2: Tune LoRA Training Parameters for Sharp Detail

LoRA training settings shape how much detail the model preserves and how stable likenesses remain. Learning rate controls how quickly the model adapts. Values in the range of 1e-4 to 5e-4 often work well for likeness and texture detail. Higher rates can erase subtleties through overfitting, while lower rates may not capture unique traits.

Rank (dimension) adjusts how much information the LoRA can store. Higher ranks such as 32–128 handle more nuance but require stronger datasets and more compute. Smaller batch sizes, often between 1 and 4, can improve detail learning for individual subjects. Tighter control of regularization keeps outputs close to the desired style instead of drifting toward generic faces.

Step 3: Use Focused Prompts and Negative Prompts

Clear prompts help the model emphasize the right details. Positive prompts can call out terms such as “detailed skin texture,” “sharp focus,” “individual hair strands,” and “studio lighting” to guide the generation toward realistic photography. Short, direct phrasing works better than long, overloaded prompts.

Negative prompts serve as quality filters. Phrases like “plastic skin,” “blurry,” “low quality,” “uncanny valley,” “deformed hands,” and “merged fingers” discourage common LoRA artifacts that break realism. Treat these lists as reusable templates across projects to maintain visual standards.

Step 4: Iterate, Evaluate, and Combine Models When Needed

Short test runs reveal whether a LoRA is handling detail correctly before large-scale production. Generate small batches, compare skin texture, eyes, hands, and overall likeness, and keep notes on which settings and prompts work best for each subject or brand.

Some advanced workflows combine specialized LoRAs for skin, hair, and facial structure to reach higher realism levels. Detail-focused LoRAs can also be applied with positive or negative weights to dial texture up or down depending on the use case.

Common Detail Problems and How to Fix Them

Plastic or overly airbrushed skin often points to aggressive learning rates, smoothed source photos, or missing negative prompts. Deformed hands or facial features usually signal limited pose variety or prompts that do not clearly specify anatomy.

ControlNet and similar tools can stabilize proportions in difficult regions such as hands. When detail varies across generations, standardize prompts, sampler settings, and guidance scales, then adjust one variable at a time while you test.

Advanced Techniques for High-Resolution, Photorealistic Output

Subtle Post-Processing and Upscaling

Careful editing after generation improves final images without making them look fake. Light adjustments to color, contrast, and sharpness can match a creator’s brand or replicate a specific camera style. Upscaling tools such as ESRGAN help preserve micro-detail at larger sizes, but heavy smoothing or strong filters usually reintroduce the same plastic look the LoRA tried to avoid.

Strong results start with a detailed base image. Post-processing should enhance existing realism, not attempt to repair training issues.

Balancing Speed and Detail With Fast LoRAs

LCM and Turbo LoRA variants can generate acceptable images in far fewer steps, often with some loss of fine detail. These faster models work well for concept exploration, thumbnails, and rapid testing.

Detail-optimized LoRAs remain better suited for final deliverables such as campaign images or premium fan content. A mixed approach keeps workflows efficient while preserving quality where it matters most.

Defining Success: How Hyper-Photorealistic Detail Supports Monetization

Realistic AI images support measurable business gains. When outputs match professional photography, creators often see higher click-through rates, stronger fan retention, more repeat buyers, and faster approvals from agencies and brand partners. Small differences in perceived quality can decide whether a campaign underperforms or scales.

Reliable detail also makes it easier to fulfill custom image requests, maintain consistent posting schedules, and repurpose content across platforms. Agencies benefit from reduced dependence on live shoots and gain more predictable margins. Start using Sozee to replace manual experimentation with a repeatable, revenue-focused pipeline.

Sozee’s “Hyper-Realism or Nothing” approach supports these goals by keeping likeness and image quality consistent across generations, which protects creator credibility and long-term monetization.

Why Sozee Works Well as an AI Content Studio for Hyper-Realistic Output

Sozee vs. Traditional Custom LoRA Workflows

|

Feature |

Traditional Custom LoRA Workflow |

Sozee AI Content Studio |

|

Likeness Recreation |

Requires data curation, parameter tuning, and retraining |

Uses three photos to build stable, realistic likenesses |

|

Detail Management |

Depends on manual prompts and constant tweaking |

Uses a built-in photorealism engine with guided controls |

|

Content Output Scale |

Consumes time and GPU resources per concept |

Produces large volumes of on-brand photos and videos quickly |

|

Ease of Use |

Rewards users with technical ML and diffusion expertise |

Removes model training and infrastructure setup |

Sozee focuses on predictability and scale rather than experimentation. Creators and agencies can skip dataset tuning and infrastructure choices and instead move directly to planning shoots, angles, outfits, and storylines in the AI environment.

Frequently Asked Questions (FAQ) on LoRA Resolution and Detail

What is the ideal number of training images for a photorealistic custom LoRA?

Most likeness LoRAs perform well with 20–100 images. For faces, 30–50 sharp, well-lit photos that cover different angles and expressions usually provide enough variety. Image quality, consistent framing, and realistic lighting matter more than raw count.

How can I reduce the “uncanny valley” effect in LoRA-generated images?

Uncanny results often come from tiny asymmetry errors, unnatural reflections, or mismatched textures. Higher-quality source images, realistic expressions, and prompts that focus on natural skin texture and eyes help. Negative prompts that flag “plastic skin,” “fake,” or “artificial lighting” remove many subtle issues before they appear.

How does Sozee ensure hyper-realistic details without custom LoRA training?

Sozee uses pre-optimized neural models built specifically for likeness recreation and photorealistic rendering. The platform analyzes uploaded photos and builds a detailed representation of the creator or talent without requiring users to handle datasets, parameters, or checkpoints. This setup avoids common training mistakes and delivers consistent, high-detail results out of the box.

Conclusion: Detail and Control Shape the Future of Hyper-Realism

Resolution and detail management separate casual AI image generation from professional, monetizable content. Thoughtful data curation, tuned training parameters, well-structured prompts, and targeted post-processing all contribute to hyper-photorealistic results that stand next to studio photography.

Creators who pair this understanding with efficient tools gain a clear advantage. Manual LoRA work remains useful for experimentation, but Sozee shows that high-end results no longer require complex pipelines. Get started with Sozee’s AI Content Studio to produce consistent, on-brand, photorealistic content at the speed your audience and partners expect.