Key Takeaways

- Creators and agencies need clear data provenance and usage policies to protect likeness rights and comply with fast-changing AI regulations.

- Explicit, revocable consent for any AI-generated likeness is now a legal and commercial necessity for creators and talent managers.

- Private, isolated likeness models reduce the risk of data leaks, deepfakes, and unauthorized replication of a creator’s persona.

- Transparent AI content disclosures and embedded provenance metadata help meet new labeling laws and maintain audience trust.

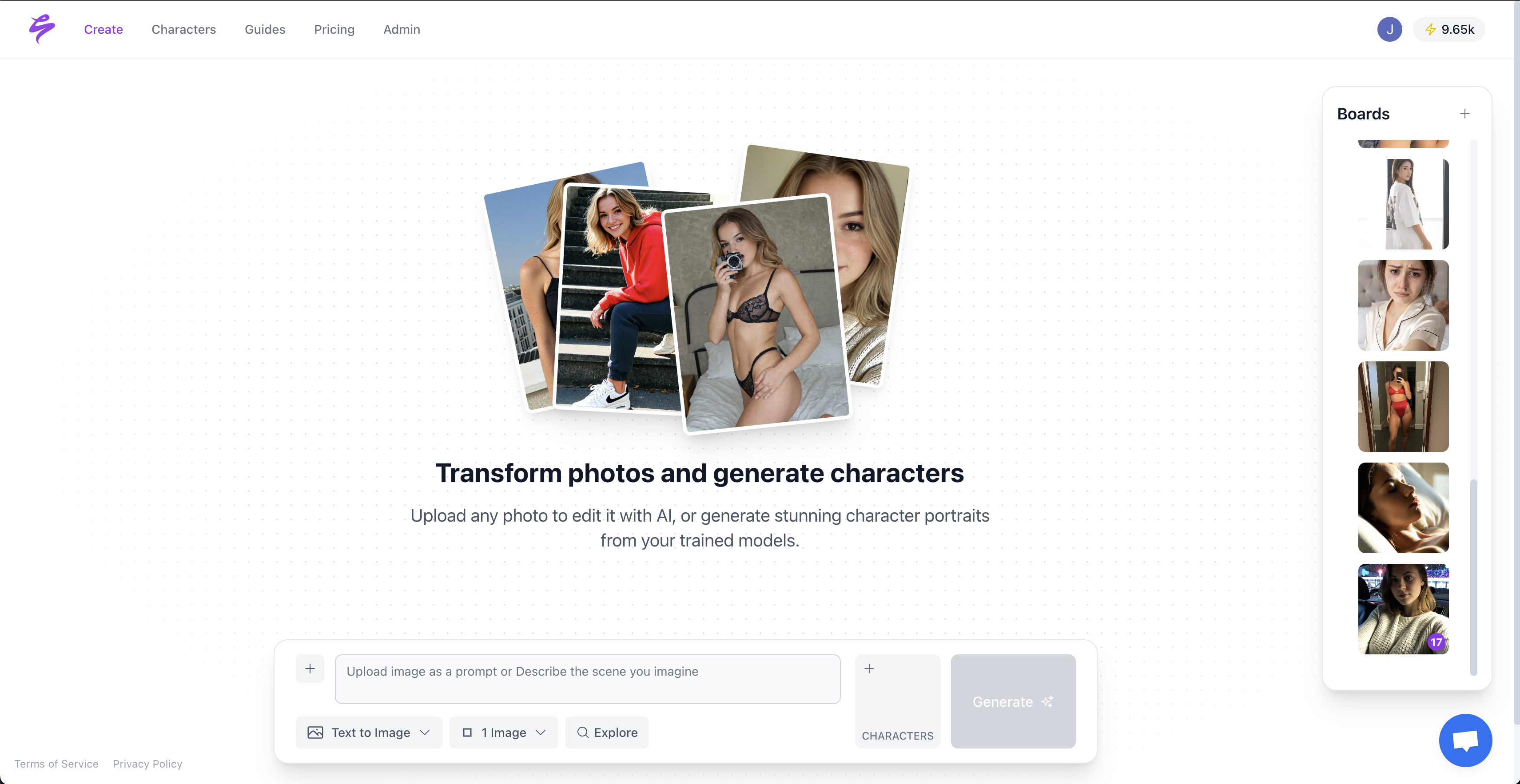

- Specialized tools like Sozee.ai combine monetization workflows with strong privacy controls, letting creators scale content safely; get started with Sozee today.

1. Mandate Transparent Data Provenance and Usage Policies for Digital Identity

Clear data provenance is the foundation of digital identity protection. Creators need to know what data trains an AI model and how any likeness-related data is stored, shared, and retained.

California AB 2013 requires large AI developers to disclose training data summaries, and California AB 1008 treats certain AI-generated outputs as personal information. These rules push platforms to document data origins and maintain trails that show where data lives at any point in time. Creators who rely on that documentation reduce the risk of hidden data misuse and surprise regulatory exposure.

Transparent Policies Strengthen Creator Trust

Strong policies around data handling protect brand and reputation. Platforms that describe what data they collect, how they secure it, and how they restrict use for training or sharing signal reliability to creators, agencies, and brand partners.

Data Provenance Checks for Your AI Platform

- Confirm that the platform documents where training data comes from and how it is updated or removed.

- Review contracts for explicit language on how likeness data is stored, processed, and retained.

- Require written confirmation that your data is not used for general model training or sold or shared with third parties.

- Look for an acceptable use policy that blocks misuse of your digital identity and provides remedies for violations.

- Claim your likeness with Sozee.ai’s private model guarantee to keep control of how your identity is used.

2. Implement Robust Consent Mechanisms for Digital Likeness

Explicit consent now sits at the center of digital likeness protection. Laws such as Illinois’s Digital Voice and Likeness Protection Act (HB 4762) and California AB 2602 on AI-generated likeness consent require clear permission before creating, using, or distributing digital replicas of a person. Illinois HB 4875, which amends the Right of Publicity Act, reinforces these protections for digital replicas.

Several state AI laws now require user-facing disclosures when AI interacts with individuals or generates content. These rules make informed consent and clear notice a baseline requirement for creators and agencies working with AI likenesses.

Consent as a Legal and Commercial Requirement

Consent agreements now need more detail than traditional talent releases. They must describe the types of content, platforms, monetization paths, and geographic reach, along with how long the consent applies and how it can be revoked.

Best Practices for Digital Likeness Consent

- Use clear language that explains how the likeness will be generated, edited, and distributed.

- Specify platforms and content types, such as SFW or NSFW, paid content, and promotional uses.

- Include simple processes for revoking or narrowing consent over time.

- Route agreements through legal or union review when working with professional talent.

- Choose tools like Sozee.ai that support creator-controlled consent settings and keep each likeness tied to a private model.

3. Prioritize Platforms Offering Isolated and Secure Likeness Models

Private, isolated models offer a higher level of protection than shared, general-purpose AI systems. When a likeness is trained inside a dedicated model that never feeds a public dataset, the chances of accidental exposure or imitation drop substantially.

Colorado SB24-205 treats certain applications as high-risk AI systems and requires risk management policies. That trend reinforces the value of platforms that separate each creator’s data and prevent cross-contamination between models.

How Isolated Models Protect Your Persona

Isolated models help ensure that photos, voice data, and performance styles remain unique to the creator. Sozee.ai builds on a principle of Privacy as a Promise, stating that models are private, isolated, and never used to train anything else, so a persona cannot be accidentally exposed or cloned by another user.

- Verify that your uploads never feed public or shared datasets.

- Confirm that the provider can delete or retire a likeness model on request.

- Check that agencies can manage separate models for each client without cross-use.

- Get started with isolated AI models to lock down your digital identity.

4. Demand Clear AI-Generated Content Disclosures and Provenance

Transparent labeling of AI-generated content now forms a key part of compliance and audience trust. California’s AI Transparency Act (SB-942) establishes labeling requirements for generative content, and its implementation timeline extends to August 2, 2026.

These rules distinguish between manifest disclosures, which are visible labels, and latent disclosures, which embed technical provenance data inside files. The federal Take It Down Act, which criminalizes certain non-consensual deepfakes, further highlights the need for traceable origins. New state laws continue to focus on labeling and disclosure standards for AI content.

Practical Steps for Disclosure and Provenance

- Label AI-generated content in descriptions, captions, or overlays in a way that is clear and permanent where feasible.

- Use platforms that embed metadata, such as system provider, model version, and timestamps, directly into files.

- Maintain records of when and how AI tools generated specific assets, especially for paid campaigns.

- Recognize that violations of disclosure requirements can carry civil penalties of $5,000 per incident, which makes reliable provenance a financial safeguard as well.

5. Use Creator-Centric AI Built for Monetization and Privacy

Creator businesses need AI workflows that support subscription platforms, fan communities, and brand deals without sacrificing control over likeness. Many general-purpose tools lack the consent management, approval flows, and model isolation that professional creators require.

Sozee.ai focuses on creator-first design, tying monetization workflows to strong privacy protections. Features such as separate SFW and NSFW funnels, reusable style bundles, and private model ownership help creators scale content while keeping legal, brand, and platform requirements in view.

Key Capabilities for Secure Monetization

- Private, creator-specific likeness models with clear ownership and control terms.

- Approval workflows that let agencies and managers review content before publication.

- Export options tailored to major platforms so that disclosures, sizing, and policies stay consistent.

- Compliance-aware pipelines that align with emerging digital identity and AI transparency rules.

- Create and monetize safely with Sozee while keeping your digital identity protected.

Conclusion: Protect Your Digital Identity While You Scale

Digital identity now operates at the center of the AI creator economy. Creators and agencies that secure data provenance, consent, model isolation, content labeling, and monetization workflows can scale faster with less risk.

Claim your likeness today with Sozee.ai to combine advanced content generation with clear, enforceable privacy controls.

FAQ: Your Digital Identity and AI Privacy

How do regulations like California’s AI Transparency Act impact content creators?

These regulations require clear disclosure when content is AI-generated, using both manifest labels and embedded metadata. Creators and platforms that publish AI content need tools that support labeling and provenance so they can help audiences understand what is synthetic while also avoiding civil penalties for non-compliance.

Can generative AI data be considered personal information under privacy laws?

California AB 1008 clarifies that personal information can include data generated by AI systems. That interpretation extends protections to AI-generated likenesses and digital profiles, giving creators rights to access, delete, and restrict use of their AI-related data.

What protections exist if someone uses my digital likeness without consent?

Illinois’s Digital Voice and Likeness Protection Act limits contracts for digital replicas unless they describe specific uses, and Illinois’s amendment to the Right of Publicity Act restricts distribution of unauthorized digital replicas. The federal Take It Down Act adds criminal penalties for certain non-consensual intimate images and deepfakes. These laws create both civil and criminal remedies for misuse of digital likeness.

What should agencies look for in AI platforms to protect clients’ digital identities?

Agencies should favor platforms that provide isolated models per creator, transparent data provenance, detailed consent management, and built-in disclosure features. Documented audit trails for model training and usage, combined with approval workflows and clear restrictions on data sharing, help agencies protect clients while still delivering scalable AI content.