Key Takeaways

- LoRa (Low-Rank Adaptation) models adapt large AI systems efficiently, which supports creator-specific styles, personas, and brand looks without retraining full models.

- Compact LoRa files reduce storage, cost, and training time, which makes multi-brand and multi-creator workflows practical for agencies and independent creators.

- LoRa-based workflows help creators, agencies, and virtual influencer teams scale content output while maintaining visual consistency and brand alignment.

- High-quality, diverse training datasets and clear objectives improve LoRa model reliability and keep outputs realistic and on-brand.

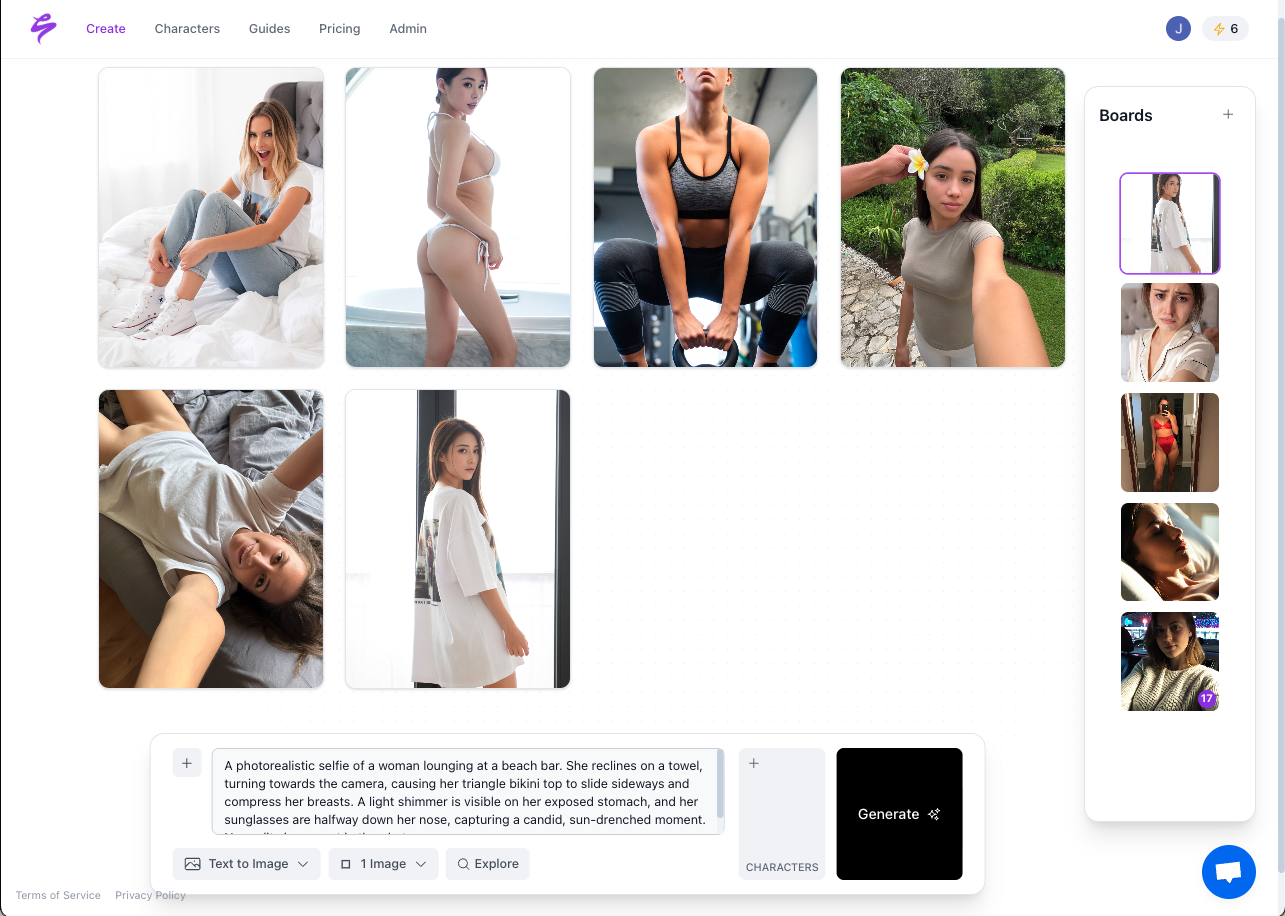

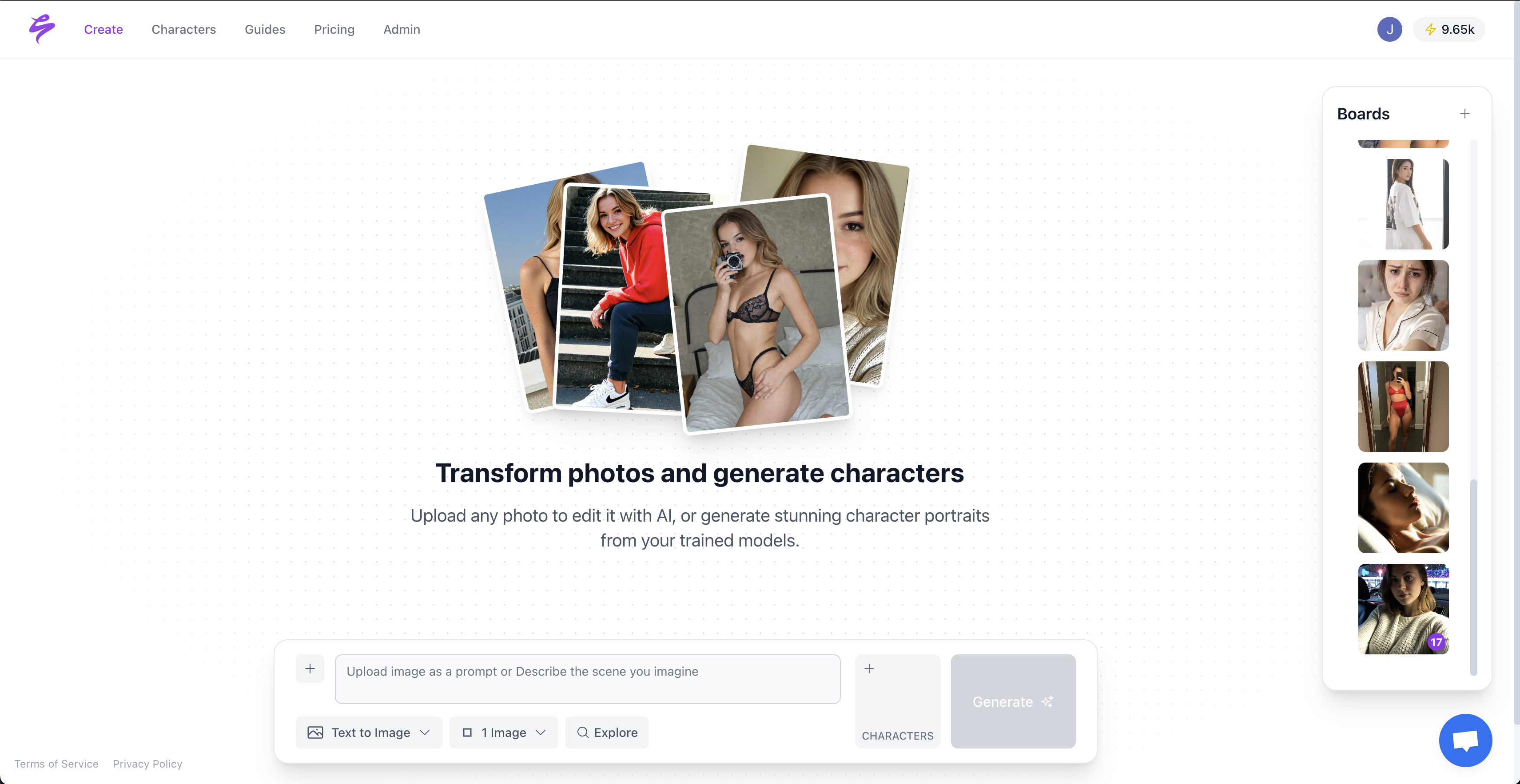

- Sozee.ai packages LoRa capabilities in an AI content studio built around creator monetization, privacy, and integration with existing content workflows.

- Creators who adopt LoRa tools can reduce production bottlenecks, protect their time, and create consistent photo-realistic content at scale.

What Are LoRa Models and How They Change Content Creation

Defining LoRa: Low-Rank Adaptation Explained Simply

LoRa (Low-Rank Adaptation) is a method for adapting large AI models. At its core, LoRa introduces small, trainable low-rank matrices into the frozen weight matrices of large models, enabling efficient and granular adaptation without altering the base model. This setup works like adding a specialized lens to a powerful camera. The main system stays intact, while the new component controls targeted behavior.

The technical approach focuses on efficiency. LoRa freezes the main model and only updates additional matrices, which reduces computational and memory requirements by training a small fraction of the parameters compared to traditional fine-tuning. Creators can access tailored results without the large computing costs that full-model training usually requires.

LoRa is especially useful for creators because it is accessible on common hardware. LoRa has minimal impact on inference speed and GPU memory during generation, making it feasible to deploy even on consumer-grade hardware. Independent creators and small agencies can use custom models without investing in enterprise-level infrastructure.

The Compact Power of LoRa for Creators and Agencies

One key benefit of LoRa for content creators is its efficient storage and management. LoRa models are typically small in storage size, ranging from a few MBs to a few hundred MBs, making them manageable for creators who need large libraries of custom models. This compactness helps agencies that support multiple creator personas or brands.

The implementation focuses on precision and resource efficiency. LoRa modifies cross-attention layers in models like Stable Diffusion, which enables training and deployment of specific styles, themes, or visual characters that might otherwise require much larger storage and more compute. Content creators can maintain many personas, styles, or brand looks without overloading their systems.

Why LoRa Models Help Resolve the Content Crisis in the Creator Economy

Scaling Content Output Without Sacrificing Quality

The content crisis stems from a mismatch between creator capacity and audience demand. LoRa models address this gap by enabling consistent, high-volume content generation while maintaining the quality and authenticity that audiences expect. LoRa allows creators to fine-tune large models for specific outputs without risking catastrophic forgetting, so the base model’s general knowledge and capabilities remain intact.

This preservation of base model integrity means creators can generate large numbers of content variations while keeping quality, style, and brand identity consistent. Whether you publish daily social posts or long-form premium content, LoRa helps each piece stay aligned with a clear visual standard.

Cost-Efficiency, Accessibility, and Speed for Custom Content

Traditional AI model training has been expensive and time-consuming for most creators and small agencies. LoRa lowers the barrier to custom AI generation. LoRa’s design substantially lowers training costs and time for agencies or independent creators who want to deploy custom AI applications for branded, on-demand content.

The speed advantage is significant. Traditional fine-tuning can take days or weeks, while LoRa adaptation often completes in minutes to hours. In fast-moving social environments, this speed supports timely responses to trends, improves testing cycles, and opens more opportunities to monetize content.

Ensuring Brand Consistency and Hyper-Realistic Personalization

Brand consistency is central to creator monetization, and LoRa supports this need. Training a LoRa model allows embedding unique features such as a person’s face or an artist’s style into a base model by using a relatively small, curated dataset. This approach makes highly personalized content feasible even for creators with limited resources.

This capability enables LoRa-trained models to generate diverse scenario-based content that stays visually consistent with the original brand or persona. Whether you prepare content for new platforms, seasonal campaigns, or special promotions, LoRa helps maintain clear brand alignment across all outputs.

LoRa Models vs. Traditional Fine-Tuning: A Comparison

|

Feature |

LoRa Models |

Traditional Fine-Tuning |

Creator Impact |

|

Storage Size |

Small (MBs to 100s of MBs) |

Large (GBs) |

Easy management, multiple personas |

|

Training Cost |

Low |

High |

Accessible to independent creators |

|

Training Time |

Fast (minutes to hours) |

Slow (days to weeks) |

Rapid iteration and testing |

|

Flexibility |

High (easily swap models) |

Low (full model swap needed) |

Supports multi-brand content strategies |

Practical Applications: How LoRa Models Empower Diverse Creators and Agencies

For Agencies: Scaling Content Production and Creator Retention

Agencies must scale content production across many talents while maintaining quality and consistency. LoRa models change how agencies operate by supporting predictable content streams that improve revenue stability and lower business risk. Get started with scalable content generation and modernize your agency’s production model.

- Predictable content streams. With LoRa models, agencies can maintain content output even when creators are traveling, unavailable, or on break. This predictability strengthens client relationships, supports more accurate revenue forecasting, and reduces operational stress.

- Faster content fulfillment. Client requests and trend-driven opportunities can be addressed quickly without arranging shoots, scouting locations, or coordinating large teams. Agencies can A/B test concepts at low cost before committing to full productions.

- Improved creator retention. Lowering the content production burden on talent can increase creator satisfaction, reduce burnout, and support higher earnings potential. Stronger creator relationships lead to longer partnerships and more stable rosters.

For Top Creators: Reclaiming Time and Supporting Sustainable Growth

Established creators often spend most of their time on production, which leaves less capacity for strategy, business building, or personal life. LoRa models help rebalance that workload.

- Content in minutes. Creators can generate large batches of high-quality content in a short session. There is less reliance on travel, large lighting setups, or extensive teams, which makes content pipelines more predictable.

- More time for life and business. Creators can maintain consistent posting schedules while taking time off, focusing on new product lines, or working on long-term projects. LoRa allows the channel to stay active while the creator manages other priorities.

- Broader reach with the same time investment. Higher content volume creates more audience touchpoints, more campaign slots, and more premium content options. Revenue can grow without a matching increase in production hours.

For Anonymous and Niche Creators: Privacy and Creative Flexibility

Many creators prioritize privacy or work in niches that require elaborate sets, props, or costumes. LoRa models offer a way to create consistent, detailed content without exposing identity or building complex physical environments.

- Total anonymity. Creators can publish compelling content without revealing their face or physical identity. This structure supports privacy-focused creators and those who operate in sensitive categories.

- Rich, imaginative worlds. LoRa models can represent fantasy settings, cosplay themes, or stylized universes without the cost of building them in the real world. Production constraints become less restrictive, so creative direction can lead.

For Virtual Influencer Builders: Consistency, Realism, and Scalability

Virtual influencers are an important part of digital brand building, yet maintaining consistency and realism has often required heavy technical investment. LoRa models reduce that burden.

- Consistent character portrayal. Virtual influencers can maintain a stable visual identity across content, which supports audience trust and long-term storytelling.

- Scalable production. Teams can generate daily posts, react to trends, and test new narrative directions without relying on slow traditional animation or CGI pipelines.

Best Practices for Maximizing LoRa Model Effectiveness in Content Generation

Curate High-Quality and Diverse Datasets

Effective LoRa models start with strong data. Best practices include curating a high-quality, diverse, and representative dataset for each creator or client persona to reduce overfitting and support realistic, authentic outputs.

Teams get better results when they include variety in lighting conditions, camera angles, expressions, and environments during data collection. This diversity helps LoRa models generate convincing content in many scenarios and across platforms.

Define Clear Objectives and Use Iterative Refinement

Clear objectives help each LoRa model perform a focused role. Teams can decide whether the priority is facial consistency, style replication, brand alignment, or a mix of these. Defined goals guide training choices and make evaluation more straightforward.

Iterative refinement improves results over time. LoRa’s fast training cycles allow creators and agencies to adjust datasets, prompts, or parameters between runs and respond quickly to changing content needs.

Integrate LoRa Into Existing Workflows

Successful LoRa use fits into existing content and monetization workflows. Planning where LoRa output will appear in social schedules, membership content, campaigns, and asset libraries keeps production efficient.

Sozee.ai addresses this need with features such as SFW-to-NSFW funnel exports, agency approval flows, a curated prompt library, and platform-optimized outputs that align with established creator business models.

Overcoming Common Challenges with LoRa Model Implementation

Addressing Dataset Quality and Preventing Overfitting

A common challenge in LoRa implementation relates to training data quality. Biased, low-quality, or narrow datasets can lead to inconsistent or unrealistic outputs. Overfitting occurs when models become too specific to their training data, which limits flexibility.

Creators and agencies can reduce these issues by building diverse, high-quality training sets and reviewing outputs for early signs of overfitting. Regular tests with a wide range of prompts help expose gaps and guide corrective updates.

Managing Technical Complexity While Preserving Artistry

LoRa is more accessible than full-model fine-tuning, but there is still technical detail to manage. Many creators prefer to focus on creative direction rather than model configuration.

Solutions like Sozee.ai offer interfaces that handle most of the technical steps behind the scenes. Creators can concentrate on prompts, visual direction, and strategy instead of infrastructure. The goal is to keep the creator’s voice and style at the center while using AI for scale and consistency.

LoRa models work best as tools that extend creativity rather than replace it. Start your unlimited content journey with Sozee.ai and explore how LoRa-based workflows can support your creative direction and business goals.

Frequently Asked Questions About LoRa Models for Unlimited Content Generation

Do LoRa models achieve hyper-realistic content that can stand alongside real shoots?

Well-trained LoRa models can produce content that closely resembles traditional photography or videography. Outcomes depend on dataset quality, training settings, and post-processing workflows. Sozee.ai focuses its LoRa implementation on high levels of realism so that outputs align with what audiences expect from professional creator content.

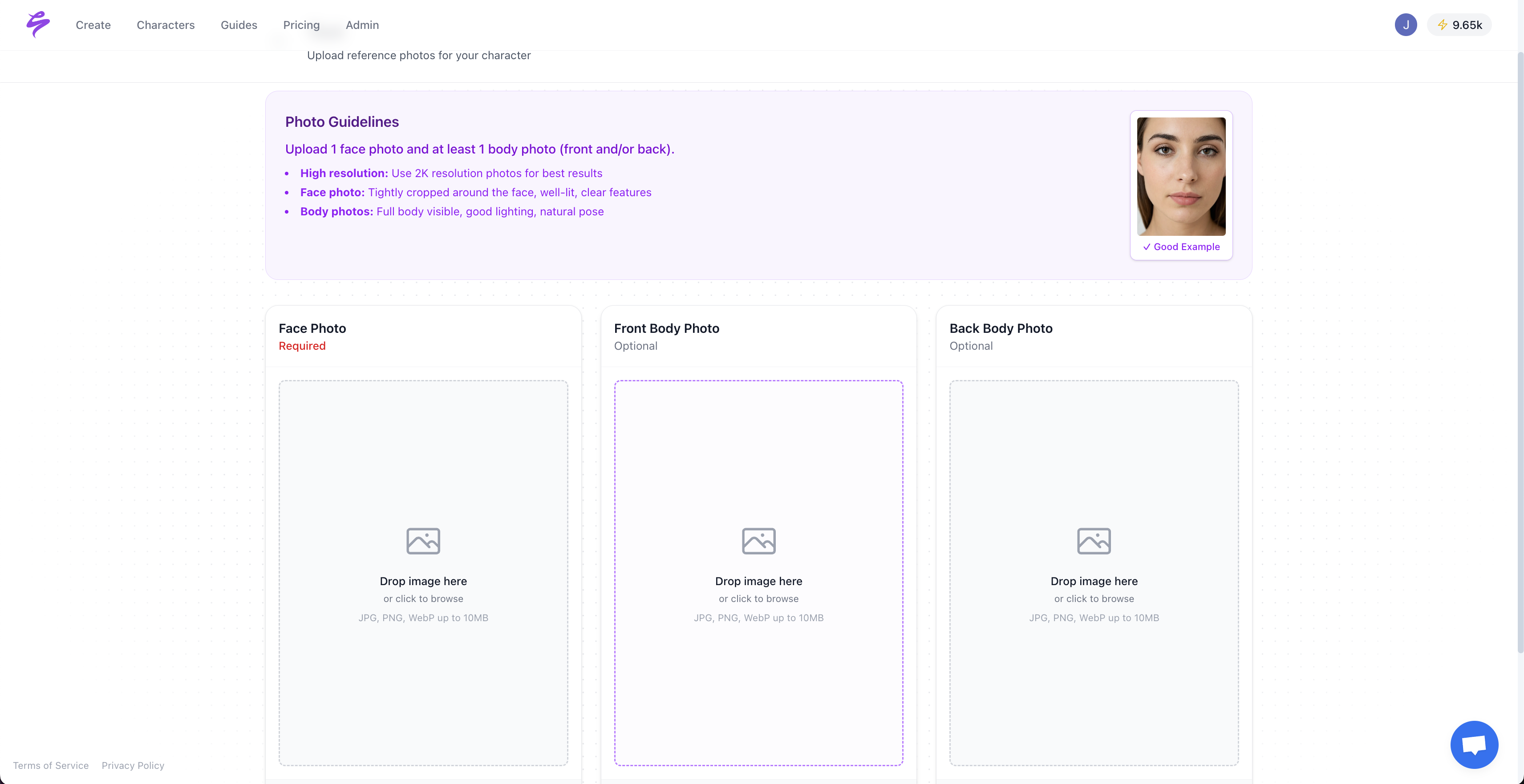

How much input data is needed to train an effective LoRa model for consistent results?

LoRa models are data-efficient compared to many traditional fine-tuning methods. Many use cases reach strong results with 15 to 30 high-quality images, while 50 to 100 images can improve consistency and versatility. Image quality and diversity matter more than raw quantity. Sozee.ai optimizes this process further and can begin generating consistent, high-quality content with as few as three photos.

Is using LoRa models for daily content generation expensive or resource-intensive?

LoRa models are designed with efficiency in mind. Their relatively small file sizes and modest computational requirements make them suitable for frequent use, even on consumer-grade hardware. Operating costs are often lower than traditional content production that relies on equipment, studios, styling, and location fees. For many creators, total costs per asset decrease while overall quality remains competitive.

Can LoRa models be used securely for both SFW and NSFW content creation?

LoRa models can support both SFW and NSFW content workflows when managed in a secure environment. Each creator’s model can remain isolated so that likenesses are not shared, reused, or exposed without consent. Sozee.ai emphasizes privacy and security by keeping creator likenesses separate, preventing cross-training between creators, and applying workflow controls for SFW and NSFW content to protect both safety and brand integrity.

How do LoRa models ensure consistent brand and character portrayal over time?

LoRa models encode specific visual characteristics, which can include facial features, body proportions, and stylistic elements. That structure helps content stay consistent across large batches of outputs. Unlike traditional shoots where lighting and conditions vary widely, LoRa models can maintain stable identity traits while still allowing creative variation in poses, environments, and scenarios. Periodic retraining or updates can incorporate new style choices while preserving the core identity.

Conclusion: Scale Content with LoRa Models and Sozee.ai

The content demands placed on creators, agencies, and virtual influencer teams now exceed what traditional production models can comfortably support. LoRa models offer a practical way to expand content output, reduce bottlenecks, and keep visual quality high without matching that scale with in-person shoots.

Through LoRa models, creators can protect their time while maintaining consistent quality. Agencies can plan predictable pipelines without being limited by travel schedules or studio availability. Virtual influencer builders can manage stable, engaging characters, and anonymous or niche creators can publish rich content without revealing their identity or building complex sets.

Sozee.ai applies LoRa technology inside an AI content studio designed around creator monetization. The platform combines privacy-focused model handling, workflow features for agencies and individual creators, and outputs that aim to match the expectations set by professional shoots.

Creators who adopt scalable production tools will be better positioned to meet audience demand and grow their businesses. Discover how Sozee.ai uses LoRa models to support your content strategy and start building a more flexible, sustainable content pipeline.