Key Takeaways

- AI image generators expose creators to serious legal risks, including copyright lawsuits from tainted training data, with over 70 cases filed by 2025.

- Uncopyrightable AI outputs leave content unprotected, while right of publicity violations can trigger damages up to $250,000 for non-consensual likeness use.

- Ethical issues like devaluation of human artistry, algorithmic bias, and trust erosion can damage creator reputations and fan relationships.

- Creators can reduce risk by using private, consent-based AI tools, hybrid human-AI creation, and clear disclosure to platforms.

- Scale safely without legal exposure by signing up for Sozee’s private likeness models designed for compliant creator workflows.

Top 5 Legal Risks Creators Face with AI Image Generators

The legal landscape for AI-generated content has turned into a minefield for creators, with at least four-dozen significant federal court cases in 2025 involving AI companies’ use of copyrighted content. These are the five most dangerous legal risks now threatening creators.

| Legal Risk | Creator Impact | 2026 Case Example |

|---|---|---|

| Copyright Infringement | Lawsuits, fines, content removal | Stability AI facing 70+ lawsuits |

| Uncopyrightable Outputs | No IP protection, revenue loss | US Copyright Office 2025 rulings |

| Right of Publicity | Likeness violations, damages | California AB 621 penalties up to $250,000 |

| Contract Breaches | Agency disputes, fan chargebacks | OnlyFans authenticity requirements |

1. Copyright Infringement from Training Data: Over 70 AI infringement lawsuits were filed by 2025 after generative AI models trained on copyrighted works without permission. Disney, Universal, and Warner Bros. sued China-based Minimax in September 2025 for using their copyrighted works to train Hailuo AI. Creators who use these tools risk secondary liability for infringement.

2. Uncopyrightable AI Outputs: The US Copyright Office has repeatedly ruled that AI-generated works cannot receive copyright protection. Creators cannot stop others from copying or reusing purely AI-generated content, which severely weakens long-term monetization.

3. Right of Publicity Violations: AI images of real people without consent can violate publicity rights. New York’s 2026 laws require clear disclosure when synthetic performers appear in advertising. California’s AB 621 adds potential damages up to $250,000 for non-consensual intimate deepfakes.

4. Contract Breaches with Platforms: OnlyFans and similar platforms now push for authentic, creator-produced content. Undisclosed AI-generated images can trigger contract violations, account suspensions, and revenue clawbacks.

5. Deepfake and Synthetic Media Liabilities: At least 40 new laws were enacted across more than 25 states by 2024 that criminalize AI-generated explicit content after the Taylor Swift deepfake scandal. Creators who ignore these rules risk criminal and civil penalties.

Ethical Red Flags Creators Face with AI Images

Ethical missteps with AI can destroy a creator’s reputation and fan trust, even when no lawsuit appears. These issues hit hardest for creators whose income depends on authenticity and personal connection.

• Devaluation of Human Artistry: The “Stealing Isn’t Innovation” campaign launched January 22, 2026 protests illegal use of copyrighted works for AI training. Creators argue that AI floods devalue human creativity and threaten long-term livelihoods.

• Algorithmic Bias and Stereotyping: AI image generators often repeat bias from training data that reinforces gender, race, or cultural stereotypes. This pattern can harm creators from marginalized communities and distort representation across platforms.

• Trust Erosion Through Misinformation: Real-world controversies like DeepNude and deepfakes for fake news show how easily AI images can be abused. These scandals erode public trust in all AI-generated content, including content from ethical creators.

• Privacy Violations: AI recreations of individuals without consent raise serious privacy concerns. Problems escalate when realistic images appear in commercial or intimate contexts without clear permission.

For OnlyFans creators and adult content producers, these ethical issues combine into an intense “AI flood” problem. Cheap, mass-produced AI content undercuts premium human-created work and threatens the sustainability of the entire creator economy.

Practical Ways Creators Can Reduce AI Risk in 2026

Leading creators now use clear risk mitigation strategies so they can benefit from AI while avoiding legal and ethical traps. The table below outlines a simple roadmap for safer AI adoption.

| Risk Category | Mitigation Strategy |

|---|---|

| Copyright Infringement | Use private likeness models with consent-based training |

| Uncopyrightable Outputs | Combine AI with human creativity for hybrid works |

| Publicity Rights | Generate only your own likeness with compliant tools |

| Platform Compliance | Disclose AI use according to platform rules |

1. Choose Private, Consent-Based AI Tools: Avoid public AI generators trained on scraped data. Instead, use platforms that build private models from your own photos with explicit consent and clear data controls.

2. Implement Clear Disclosure: Design persistent, understandable indicators for AI-generated content so you meet new disclosure rules and keep fan trust intact.

3. Audit Your AI Workflow: Review AI tools for copyright risks in both training data and outputs. This habit prepares you for emerging licensing systems and ongoing litigation.

4. Focus on Hybrid Creation: Blend AI generation with human direction, editing, and curation. This approach supports copyrightable derivative works and keeps your content aligned with your authentic style.

Start creating safely now with compliant AI tools built specifically for creator workflows and legal protection.

Scale Safely with Sozee.ai as Your Private AI Partner

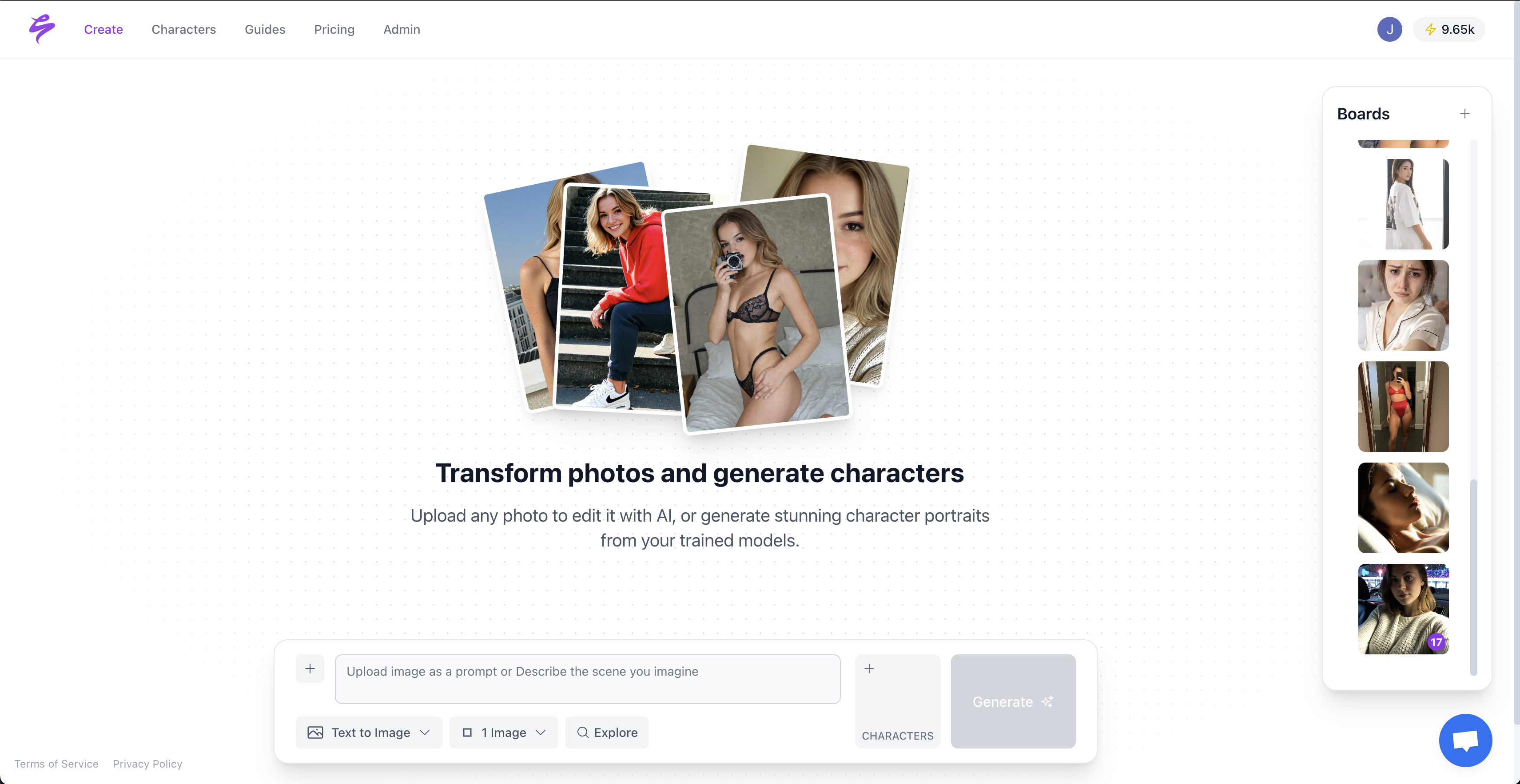

General-purpose AI generators often expose creators to legal risk and ethical controversy. Sozee.ai offers a different path with private, creator-first AI that solves the content crunch without those dangers.

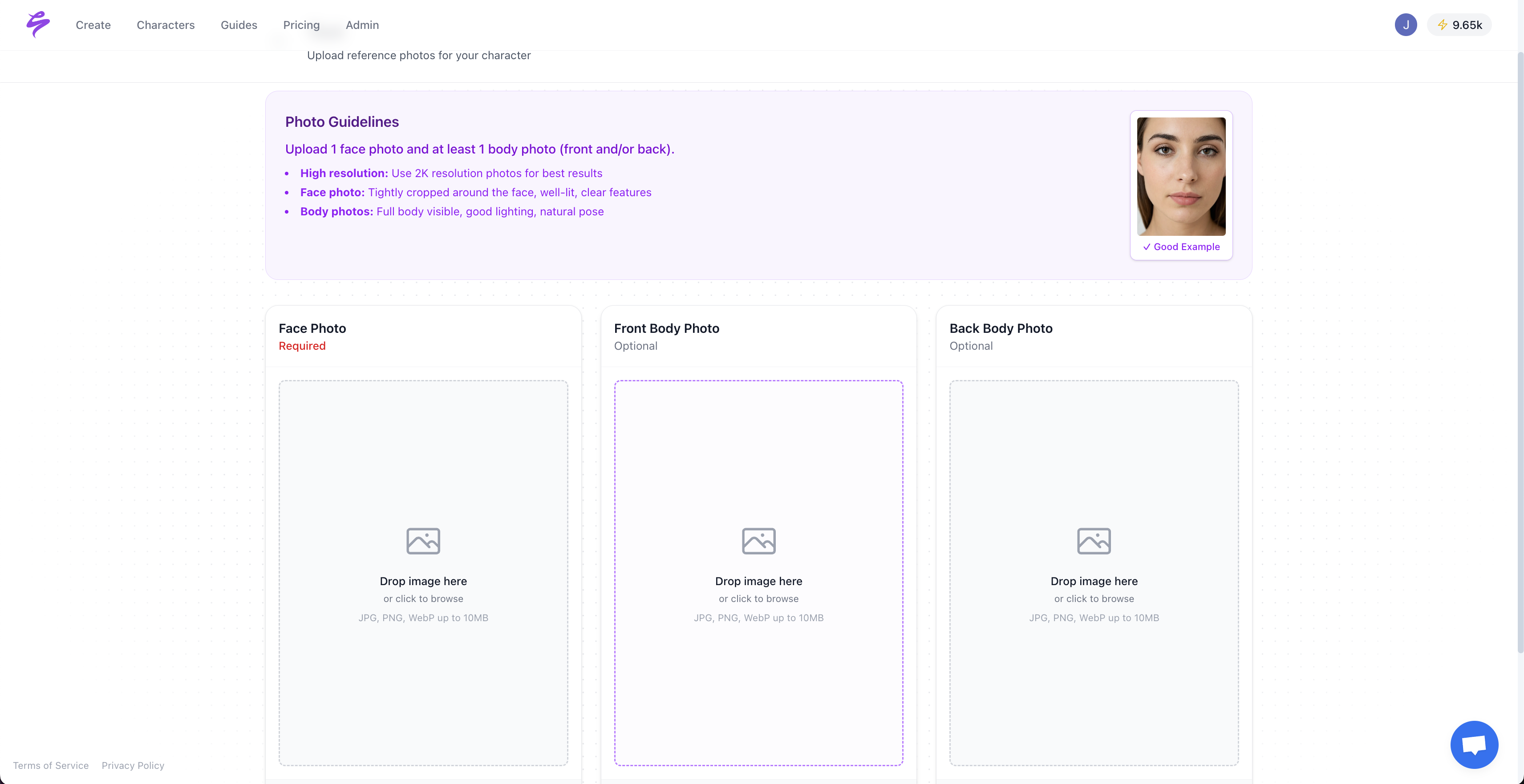

Private Likeness Models: Upload three photos to build a personal AI model that never touches public training data or leaks to other users. Your likeness stays private and under your control at every step.

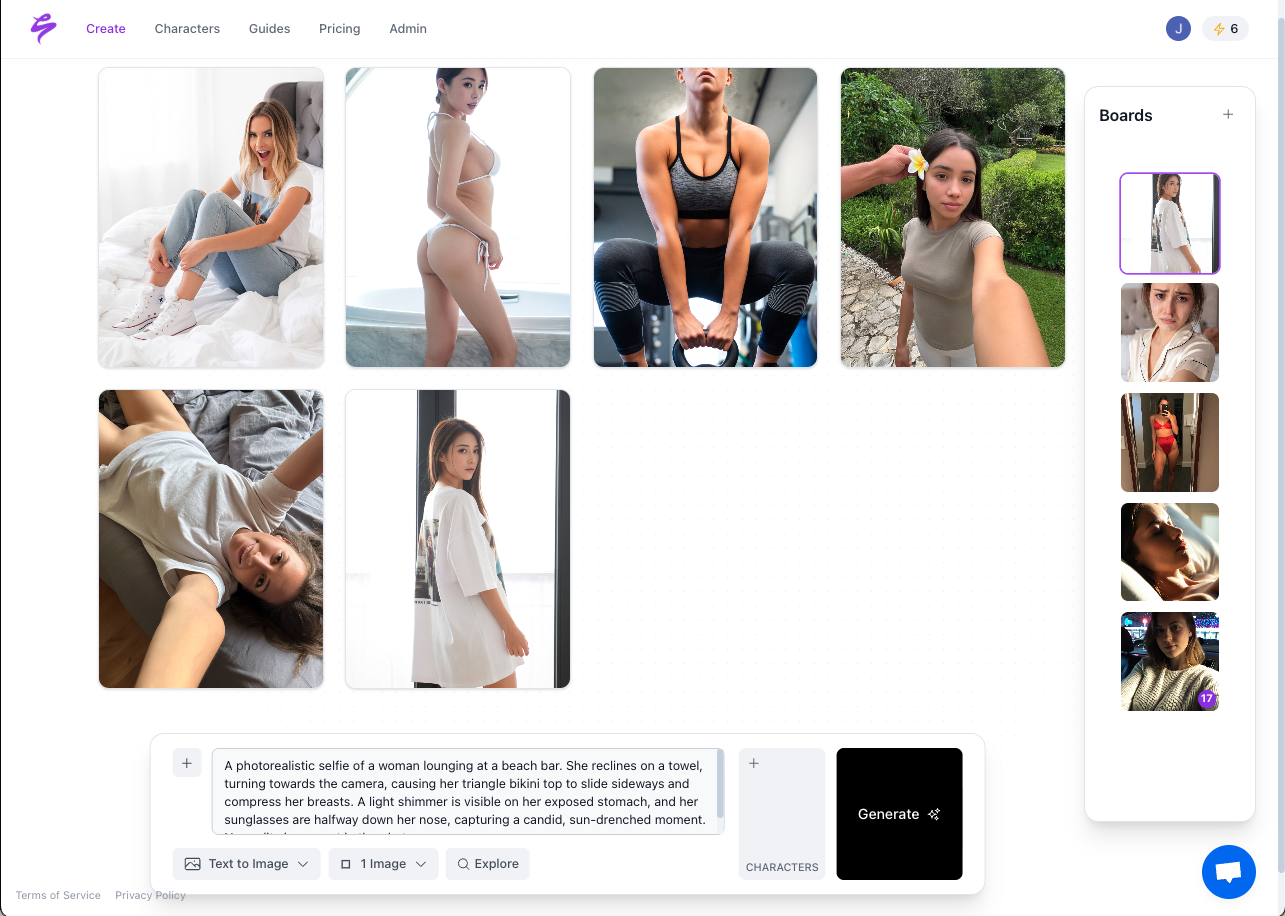

Hyper-Realistic Quality: Generate convincing SFW and NSFW content tailored for OnlyFans, Fansly, TikTok, and Instagram. Fans experience consistent authenticity while you scale content output dramatically.

Agency-Ready Workflows: Use built-in approval flows, brand consistency tools, and team management features designed for agencies that manage multiple talents and need safe, repeatable systems.

Privacy-First Architecture: Models stay private, isolated, and never train any other system. This structure removes many privacy exposures that affect public AI tools.

Unlike HiggsField, Krea, or other general-purpose generators, Sozee focuses only on creator monetization workflows. The platform turns the 100:1 content demand crunch into a scalable revenue engine without legal or ethical compromise.

Scale safely and go viral today with ethical AI designed for creators.

Real Creator Outcomes and 2026 Legal Wake-Up Calls

The creator economy now splits between those who scale safely with compliant AI and those who face harsh legal fallout. These scenarios highlight what that divide looks like in practice.

Agency Success Story: A major OnlyFans agency scaled content output across more than 50 creators using Sozee’s private model system. The agency protected creator privacy, kept control over likeness use, and increased revenue without extra shoot days.

Virtual Influencer Breakthrough: A virtual influencer built with Sozee grew followers quickly and secured sponsorship deals. Consistent visuals and safe likeness management gave brands confidence to invest.

Legal Warning: The New York Times sued Perplexity AI in December 2025 for stealing journalism, while media companies including Condé Nast and Forbes sued Cohere for copyright infringement. These cases show that AI companies and their users now face very real legal consequences.

The signal for 2026 is clear. Creators must choose between risky public AI tools and compliant private solutions built for their specific legal and ethical needs.

Frequently Asked Questions

Can you get sued for using AI-generated images?

Yes, creators face several lawsuit risks when they use AI-generated images. Copyright infringement claims appear when AI tools train on copyrighted works without permission. Right of publicity violations arise when creators generate images of real people without consent. Contract breaches occur when platforms require authentic content but creators secretly use AI. The safest path uses private AI tools like Sozee that build models from your own photos and avoid tainted training data.

Is it ethical to use AI art generators?

For creators, ethics depend on the specific tool and workflow. Public AI generators trained on scraped copyrighted works raise serious concerns about artist exploitation and lack of consent. Private AI tools that build models from your own photos with explicit consent can support ethical use when you disclose them properly. The priority is choosing tools that respect intellectual property, stay transparent with audiences, and avoid fueling the devaluation of human creativity.

What are the main legal risks of AI image generators?

The five primary legal risks include copyright infringement from training data, uncopyrightable outputs that lack protection, right of publicity violations for using likenesses without consent, contract breaches with content platforms, and deepfake liabilities under new state laws. Creators can reduce these risks by using private, consent-based AI tools and by following clear disclosure and compliance practices.

Do AI generated images have copyright protection?

No, the US Copyright Office has consistently ruled that purely AI-generated works cannot receive copyright protection. Creators cannot stop others from copying or reusing these images, which limits monetization. However, works that combine AI generation with substantial human creativity may qualify for copyright protection as derivative works.

What are the main ethical issues with AI art for OnlyFans creators?

OnlyFans creators face unique ethical challenges such as fan trust erosion when hidden AI use comes to light, devaluation of authentic human content through AI flooding, potential contract violations with platforms that require genuine content, and privacy concerns when generating realistic intimate content. The adult creator economy depends heavily on authenticity and personal connection, so ethical AI use becomes crucial for long-term success.

Conclusion: Why Private AI Is Now Non-Negotiable for Creators

Ethical and legal risks from AI image generators now pose existential threats to creators, from copyright lawsuits to broken fan trust. At the same time, the content crunch pushes creators toward AI support. Compliant, private tools like Sozee give creators a path to scale ethically while staying protected.

Get started with Sozee.ai today and address your ethical and legal AI risks while scaling safely.