Key Takeaways

- Creators need scalable content production that does not expose their likeness or identity to misuse, leaks, or unauthorized training.

- General AI platforms often rely on shared models and broad data policies that can put creator privacy, control, and revenue at risk.

- Specialized private model platforms use isolated models, strict retention rules, and advanced encryption to keep likeness data separate and secure.

- Clear consent, auditability, and role-based access help agencies and teams collaborate while still protecting sensitive creator content.

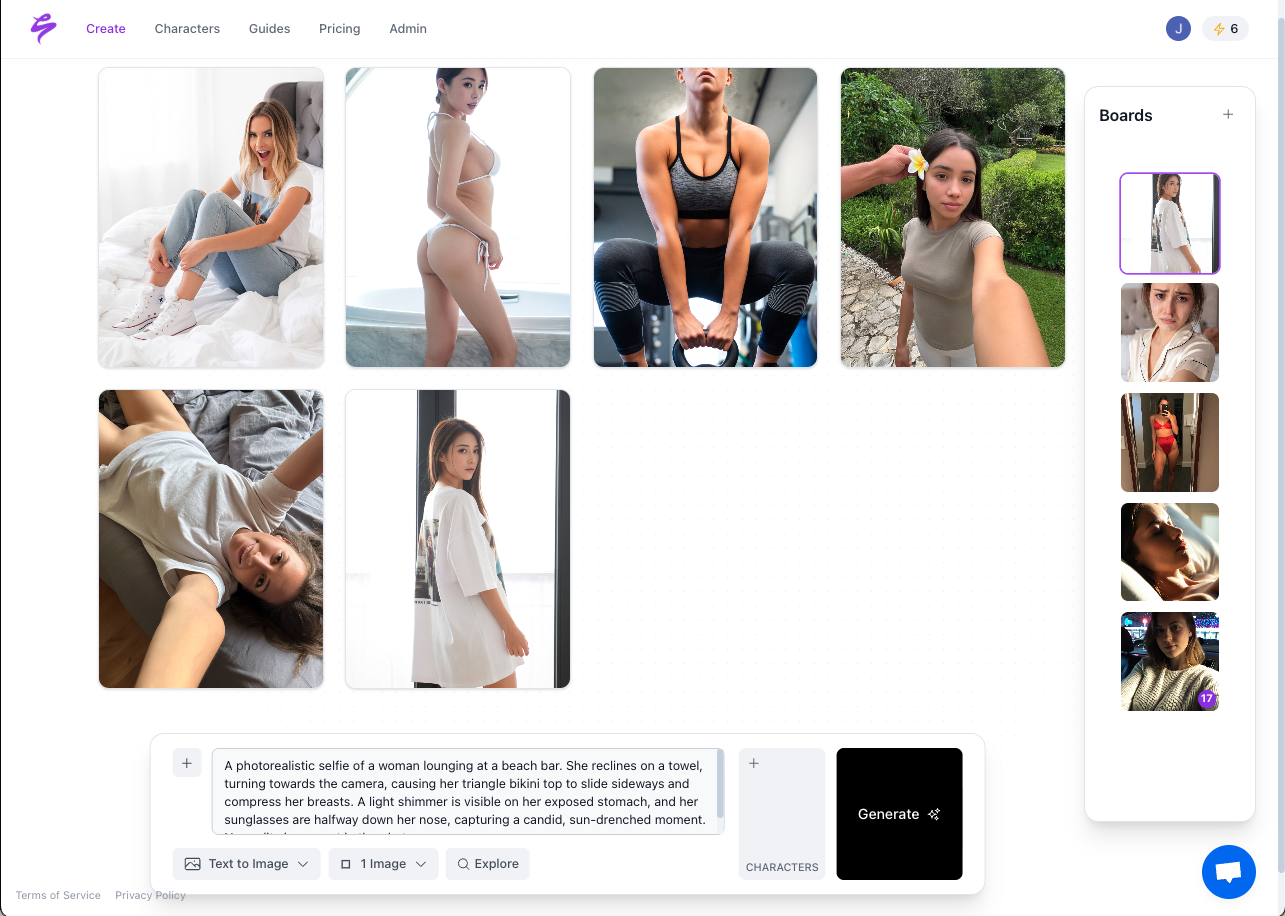

- Sozee provides private likeness models, creator-first controls, and secure workflows for scaling content safely, and creators can get started at Sozee.

Protecting Identity While Scaling Content

The creator economy rewards constant publishing, and more output often leads to more traffic, sales, and revenue. This demand has created a content gap where fan demand can exceed a creator’s capacity, which drives burnout and inconsistent posting schedules.

AI-generated content offers leverage, yet it also introduces serious risks around personal likeness, biometric data, and brand reputation. Misuse of images, unauthorized training, and model leakage can damage income and trust, especially as regulations like GDPR and the EU AI Act define strict rules for handling personal and biometric data.

Creators who earn from their image and brand must treat AI platform choice as a business decision, not just a technical one. A single breach or misuse of a likeness model can undermine years of audience building.

Comparing Privacy Options: General AI vs Private Likeness Platforms

The AI ecosystem splits into broad general-purpose tools and specialized private model platforms. Each path has distinct privacy and security tradeoffs for creators.

General AI Platforms: Broad Use, Broad Risk

General AI platforms serve many industries, so their designs favor versatility over niche creator needs. They often use data pooling where user uploads may feed global training models, apply basic access controls that do not match agency workflows, and maintain generic consent language that may not reflect likeness rights or paid content rules.

These platforms often operate under terms written for many business cases instead of the specific requirements of creators whose income depends on controlling their digital likeness.

Specialized Private Platforms: Privacy by Design

Specialized tools like Sozee start from a privacy-by-design and security-by-default mindset. Creator content is treated as protected intellectual property, not generic data.

These platforms typically provide isolated models for each creator, explicit data ownership terms, clear deletion procedures, and workflows tailored to monetizable content instead of casual experimentation. This structure supports scale while maintaining strict control of likeness data.

Head-to-Head: Data Privacy And Security Features

Selecting an AI platform for sensitive creator content calls for close review of four areas: model isolation, encryption, retention, and access controls.

Model Isolation And Training Data Use

General AI tools often train on large pooled datasets. User uploads can improve overall model performance, which raises the risk that elements of a creator’s likeness appear in content generated for someone else.

Sozee and similar private likeness platforms use a strict one-model-per-creator approach. Each creator’s photos train only their own model, which remains isolated from every other user. This design keeps likeness data out of shared datasets and out of other users’ outputs.

Encryption Standards And Secure Processing

Many general AI platforms encrypt data in transit and at rest, yet they may process data in plaintext during computation. Fully Homomorphic Encryption (FHE) enables some computations on encrypted data without decryption, which greatly reduces exposure during training and inference, but it remains uncommon in broad general tools.

Specialized platforms invest in techniques like FHE and Trusted Execution Environments. Combining encrypted computation with secure hardware keeps keys protected and prevents intermediate results from appearing in readable form, which strengthens privacy at the most sensitive stages.

Data Retention And De-identification

General tools often rely on broad retention rules that serve operational convenience, and de-identification may be shallow, leaving traces in logs or model weights.

Sozee supports strict, documented retention timelines and irreversible deletion paths. Techniques such as tokenization, redaction, and format-preserving encryption help prevent raw sensitive data from reaching AI models or storage systems, which lowers long-term privacy risk.

Access Controls And Audit Trails

General AI tools usually provide standard account logins and simple permissions, which may not match how agencies and teams manage brand assets, approvals, and multi-person workflows.

Sozee uses granular role-based access control so creators, managers, and editors can each receive appropriate permissions. Detailed audit logs and role-based controls give organizations visibility into who accessed which models and when, which supports compliance and internal accountability.

|

Feature |

General AI Platforms |

Specialized Private Model Platforms |

Sozee Focus |

|

Model training data |

Shared and pooled |

Isolated and per user |

One private likeness model per creator |

|

Encryption during compute |

Standard transit and rest |

Advanced encrypted compute |

Secure processing without exposing raw data |

|

Data retention |

Broad, platform-driven |

Defined and limited |

Transparent timelines and deletion options |

|

Access controls |

Basic user accounts |

Role-based permissions |

Agency-ready roles and audit trails |

See how Sozee applies these controls to creator likeness models so you can scale content with clear privacy boundaries.

Ethics, Creator Control, And Future-Ready Security

Technical defenses only solve part of the privacy problem. Strong creator rights, clear consent, and ethical guardrails are equally important for sustainable AI use.

Ethical AI And Ownership Of Likeness

Robust privacy requires transparent terms, understandable consent flows, and simple ways for creators to control how their likeness and outputs are used. Owners of digital likenesses need confidence that they can set boundaries, approve uses, and withdraw consent if needed.

Concerns about AI misuse in decryption and cybersecurity highlight why platforms must pair advanced technology with responsible policies and clear user controls.

Balancing Encryption With Performance

Advanced encryption can add computational overhead, so platform design must balance security with responsiveness. Hardware acceleration and model optimization techniques help encrypted AI systems run efficiently, which keeps generation speeds practical for daily creator workflows.

Sozee focuses on giving creators fast turnaround times while still preserving strong protections for likeness data and training assets.

Why Sozee Fits Private Likeness Models

Sozee was built specifically for monetizable creator workflows where the likeness itself is the core asset. This focus shapes every feature, from onboarding and training to export and team collaboration.

Each creator receives an isolated likeness model that never trains other users’ models or global systems. Output quality aims to match the creator’s authentic appearance and style, while the underlying controls keep that likeness locked to the rightful owner.

Platform workflows support content planning, SFW and NSFW routes, and export options that align with creator and agency business models. This approach allows content libraries to grow without sacrificing ownership or privacy.

Start building a private likeness model with Sozee and expand your content output while protecting your identity.

Frequently Asked Questions About AI Data Privacy

How does Sozee keep my likeness out of other users’ models?

Sozee trains a dedicated likeness model for each creator and keeps that model isolated from all others. Your uploaded photos feed only your model, which is not reused for global training or for other accounts. This separation prevents your likeness from appearing in another user’s generated content.

What happens to my data if I leave a private model platform?

Private model platforms that prioritize privacy, including Sozee, define clear retention and deletion policies. When you close an account, the platform schedules your likeness model and associated training data for secure erasure so that remaining traces cannot be reconstructed. These processes align with modern privacy regulations and best practices.

How can I evaluate whether an AI platform is truly privacy-focused?

Review the platform’s documentation for model isolation, encryption methods, retention timelines, and data ownership terms. Look for detailed audit trails, role-based permissions, and explicit statements that your likeness will not be used to train shared models. Compliance with data protection laws and visible security practices are strong indicators of a privacy-first approach.

Conclusion: Secure Growth For Monetizable AI Content

Creators and agencies that rely on personal likeness need AI tools that respect privacy as much as they enhance productivity. General AI platforms often favor pooled models and broad data use, which can conflict with the needs of professional creators.

Specialized private model platforms like Sozee show that creators can scale content, protect identity, and maintain ownership of their digital likeness at the same time. Careful platform selection, combined with clear security and consent standards, sets the foundation for sustainable growth in the AI-powered creator economy.