Executive summary

- AI photorealistic digital models give creators consistent, on-demand content without the limits of traditional shoots.

- Core technologies such as GANs, motion capture, and real-time rendering now compress months of visual work into minutes.

- Sozee enables quick digital twins and AI-native models from a few photos, fitting smoothly into existing creator and agency workflows.

- Ethical and transparent use of AI likenesses supports trust, privacy, and reduces issues related to the uncanny valley.

- Creators who adopt AI-driven photorealistic models can scale content output while maintaining brand identity and quality.

The Content Crisis Solved: Why Photorealistic Digital Models with AI are Essential

Why Photorealistic Digital Models Matter for Creators

Content demand now exceeds what human-only production can deliver. Creators face burnout as they try to meet constant posting schedules, agencies manage inconsistent output, and virtual influencer builders work through long development cycles that struggle to maintain visual consistency.

Photorealistic digital models address these limits by providing AI-generated or enhanced representations of humans that can look very close to real photographs. These digital personas go far beyond simple filters or avatars. They function as complete, reusable characters that appear in many content scenarios with consistent visual identity.

For modern creators and agencies, these models reduce physical constraints. They support 24/7 content production without fatigue, travel, or scheduling issues, and they keep brand visuals consistent across platforms and campaigns. This consistency helps audiences recognize creators and brands even as content volume increases.

Audience engagement also benefits. In an era of digital fatigue, people respond to content that feels familiar and coherent over time. Photorealistic models allow creators to explore new environments, styles, and concepts without the overhead of constant shoots, while still presenting a recognizable appearance that supports trust and long-term engagement.

How AI Shifted Photorealism from Specialist Work to Everyday Tools

Early attempts at digital humans depended on complex computer graphics and expensive CGI. Teams built detailed 3D models, recorded motion capture sessions, and spent months in post-production. These methods remained mostly out of reach for individual creators and smaller agencies.

The shift came with advanced AI methods such as Generative Adversarial Networks (GANs) and large model architectures that learn patterns from data at scale. Modern systems take a small set of inputs, sometimes only a few photos, and reconstruct highly detailed digital personas in minutes instead of months.

This change opened high-end visual capability to a much wider group. Individual creators and lean agencies now access tools that once required studio-level budgets. As a result, smaller teams can compete more directly with traditional media producers on visual quality and content volume.

Core Technologies Behind AI-Driven Photorealism

A clear view of the core technologies helps creators use AI tools more effectively. Generative Adversarial Networks (GANs) are foundational for generating initial photorealistic faces: a generator creates images while a discriminator refines them by distinguishing real from fake, resulting in highly realistic synthetic faces.

The GAN setup relies on an adversarial training process with two networks that improve together. One network generates images that aim to mimic real samples, while the other network evaluates whether each image looks real or synthetic. As training continues, both networks become more accurate, and the generated images become increasingly convincing.

3D scanning and photogrammetry techniques are used to construct precise digital twins, often requiring hundreds of high-resolution photos from all angles. Newer AI systems reduce these requirements and sometimes achieve strong likeness from a small photo set.

Motion capture (MoCap) transfers real human gestures and expressions onto avatars, driving natural movement. Paired with AI-driven animation, this motion data helps digital models move, react, and emote in ways that feel more natural to viewers.

Real-time rendering completes the pipeline by simulating how light interacts with skin, hair, and clothing. Accurate handling of reflections, shadows, and surface properties gives digital models visual depth and texture that resemble high-quality photography.

Mastering the Art: How AI Creates Indistinguishable Digital Models

Foundations of Lifelike AI Models

Truly photorealistic digital models depend on several visual elements that work together. Photorealistic digital humans require correct facial and body proportions, lifelike textures, and especially believable eyes, the lack of which leads to perceptions of artificiality.

Anatomical accuracy provides the basic structure. AI systems must learn not only surface appearance but also the underlying bone and muscle relationships that make faces and bodies look natural from many angles. Advanced models draw on large datasets of human anatomy to keep proportions realistic across poses, lighting, and expressions.

Texture and material quality form the next layer. Human skin shows complex behavior, including subtle color variation, pores, fine hair, and changes under different light sources. AI-generated skin that ignores these details often looks flat or plastic. Strong systems capture these micro-details, including small imperfections, which helps digital humans feel more authentic.

Eyes play a central role in perceived realism. Viewers focus on eyes to judge emotion and authenticity. AI systems need to model not just iris detail and pupil size, but also reflections, moisture, and small involuntary movements. Small inaccuracies in eye behavior often signal that a model is synthetic, even when other elements look convincing.

Dynamic Realism That Makes Models Feel Alive

Static images can look realistic, but movement and expression often reveal whether a model feels convincing. Dynamic realism depends on accurately capturing natural motion and micro-expressions (e.g., subtle muscle movements, eye blinks), not just static realistic faces.

Micro-expressions and detailed facial rigs help digital models show fine muscle activity. Realism is furthered through detailed animation of micro-expressions, natural non-verbal cues, and voice synthesis tuned to individual speech patterns and emotional tones.

Natural motion systems go beyond facial animation. Critical modules include voice synthesis for natural speech and advanced animation systems for realistic breathing, blinking, weight shifts, and facial expressions. These systems guide how a digital model stands, walks, breathes, and reacts.

Motion capture data and AI-driven behavior models help digital characters avoid stiff or repetitive movement. Idle animations, subtle shifts in weight, and small gestures around the eyes and mouth create the sense that a model is present in the scene, not just placed into it.

Advanced Rendering for Higher Realism

Rendering quality strongly influences how realistic a digital model appears. Advanced rendering techniques (like real-time ray tracing) simulate natural light interactions on digital skin and hair, which helps reduce artificial or synthetic looks.

Physically based rendering and ray tracing model how light bounces, reflects, and passes through materials. These methods create layered shadows, soft reflections, and nuanced highlights that resemble real-world photography. When a digital model reacts correctly to different lighting conditions, it becomes easier to place that model into many environments without visual mismatch.

Automated visual effects also support efficiency. The pipeline reduces manual VFX work via automation in facial rigging, animation, tracking, and motion retargeting, which lets creators spend more time on creative direction rather than technical setup.

Modern rendering engines process these calculations in real time or near real time. Creators can see changes immediately, adjust poses or lighting, and export final content without long waits. This speed turns photorealistic digital model creation into an interactive creative process rather than a slow production line.

Ready to create photorealistic digital models with AI for your content library? Start creating now with Sozee’s AI platform for creators.

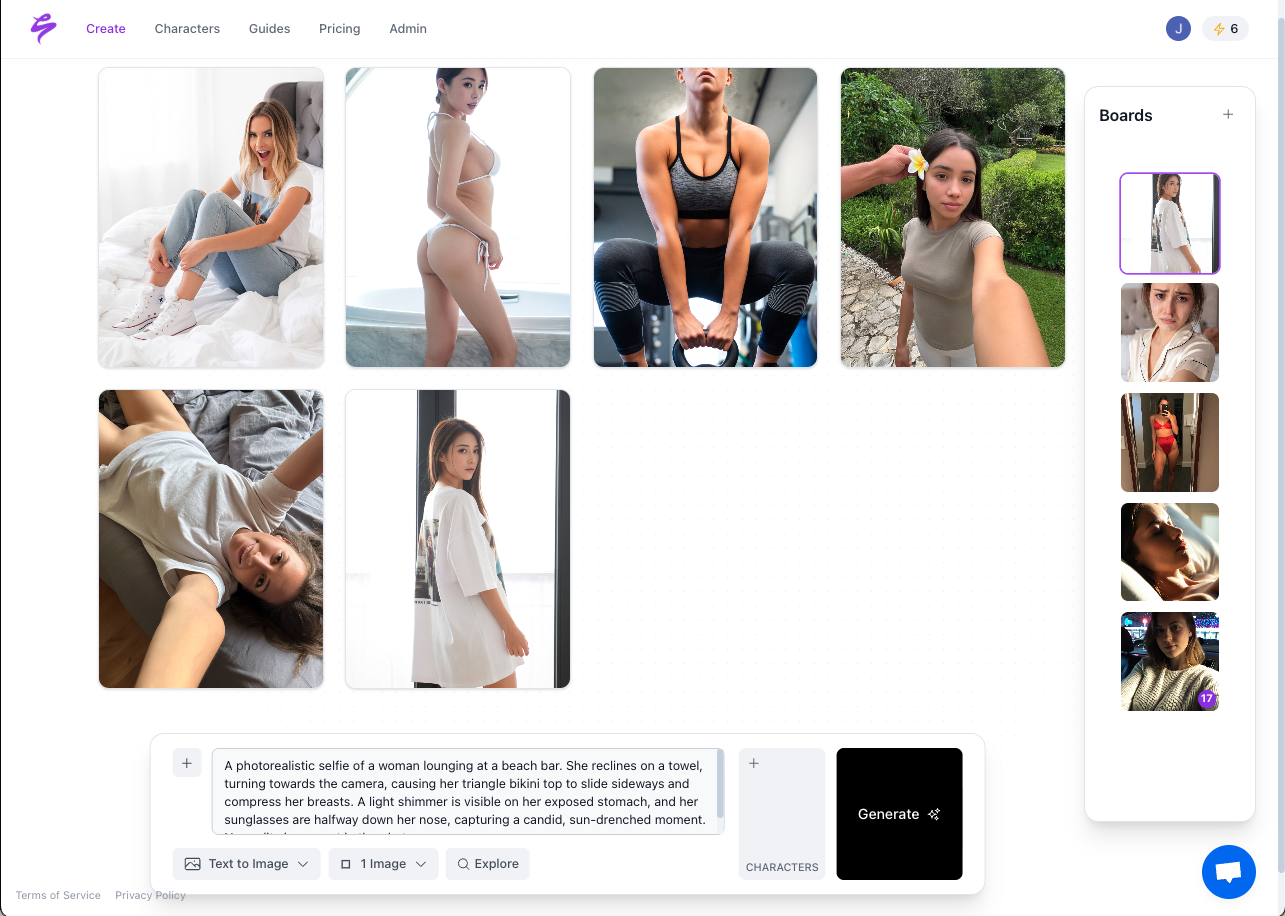

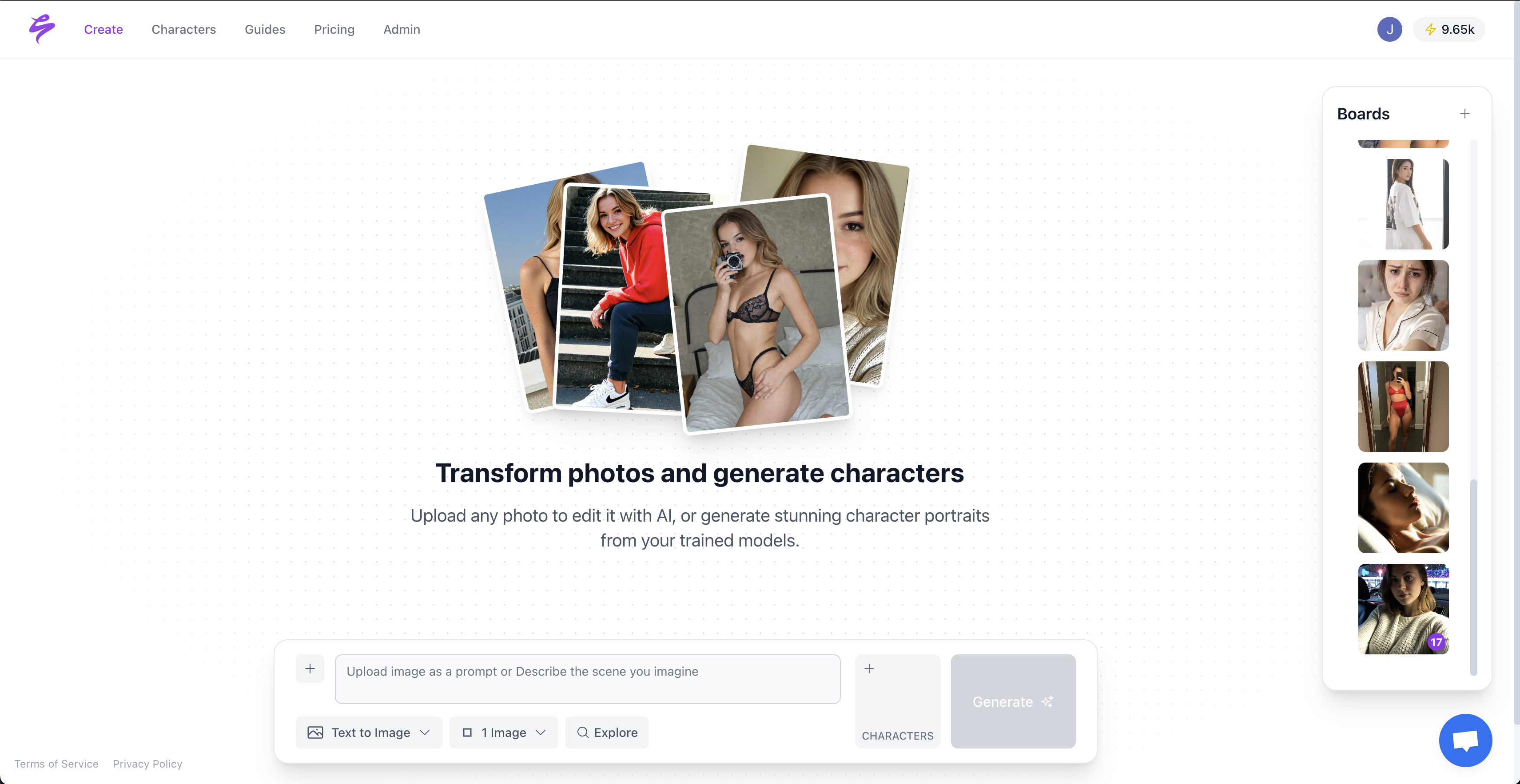

Practical Strategies to Create Photorealistic Digital Models with Sozee

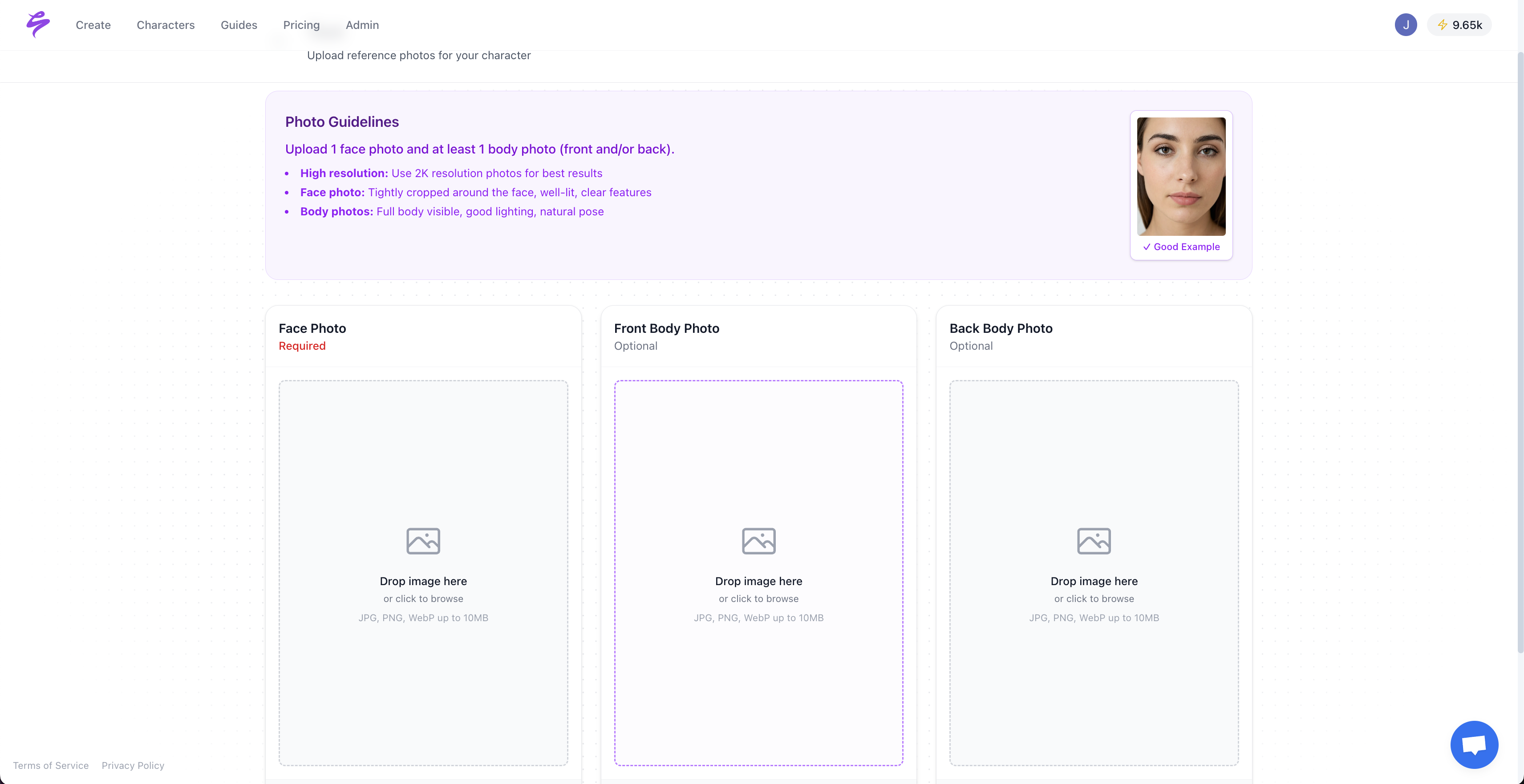

Fast Digital Twins for Real Creators

Traditional digital doubles relied on long scanning sessions, many reference photos, and weeks of manual work. Modern AI platforms such as Sozee change this workflow by reconstructing detailed likenesses from a small number of high-quality images.

Sozee analyzes a short photo set to capture facial structure, skin texture, and distinctive features. From as few as three clear photographs, the system can produce a digital twin that holds up across multiple poses, lighting setups, and content formats.

The creation of 3D photorealistic digital humans benefits from modular, distributed architectures that support scalable workflows. Systems built on these ideas help creators generate models and content quickly instead of waiting for long processing or manual revisions.

Instant or near-instant turnaround is a major advantage of AI likeness reconstruction. Traditional methods often took weeks before a usable model appeared. Modern tools shorten this to minutes, making it easier to react to trends, deliver custom content, and keep a consistent posting schedule.

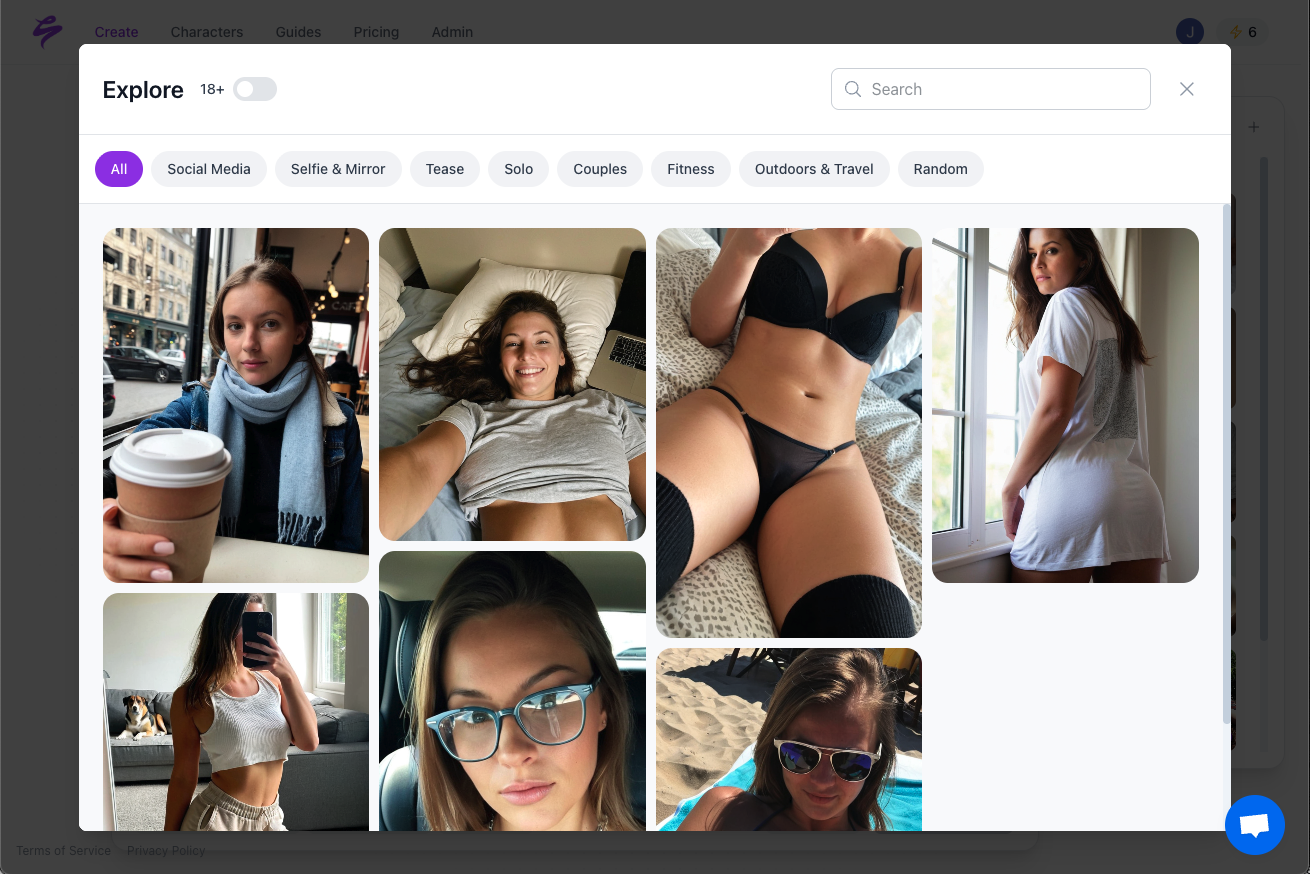

AI-Native Models for Unlimited Creative Variations

AI-native models offer another path beyond recreating existing people. These digital personas are designed specifically for content creation, marketing, or virtual influence, and do not need to match a real individual.

Consistency becomes easier to manage with AI-native characters. Human creators often change hair, style, or energy across shoots, but AI models maintain a stable appearance. This reliability helps brands and creators keep a clear identity across campaigns and platforms.

High-volume and diverse content production becomes practical at scale. Creators can place AI-native models into many scenes with different clothing, backgrounds, and actions, without planning new shoots. This flexibility supports:

- Rapid testing of creative concepts and formats

- Quick adaptation to audience feedback or platform trends

- Localized content variations using the same core persona

Personality and behavior can also be tuned. Creators and agencies can define tone of voice, typical expressions, and interaction styles, then keep those traits consistent across videos, images, and campaigns.

Integrating AI Models into Daily Creator Workflows

Efficient use of photorealistic AI models depends on how well they fit into existing workflows. Prompt engineering plays a central role because it shapes how the AI interprets instructions and style references.

Effective prompts combine clear visual direction with room for controlled variation. Creators often build internal prompt libraries that match their brand tone, lighting preferences, camera angles, and styling. These reusable instructions reduce guesswork and support consistent results over time.

Agencies managing multiple creators or virtual influencers benefit from structured workflows. Sozee supports features such as client approval steps, style bundles, and reusable content templates that help maintain visual standards across teams and brands.

Integration with common publishing and management tools keeps AI-generated assets aligned with current processes. Direct exports to social platforms, connections to content calendars, and links to monetization tools reduce friction when shifting from test images to live campaigns.

Creators who want to experiment with AI-driven content at scale can explore Sozee’s environment. Get started with the AI Content Studio built for creators and agencies.

Navigating the Landscape: Challenges, Ethics, and the Future of AI Photorealism

Reducing the Uncanny Valley Effect

The uncanny valley describes the discomfort people feel when a digital human looks almost real but not quite. This effect has limited past attempts at digital characters and remains a central challenge for photorealistic models.

The “uncanny valley” remains a challenge: even highly realistic visuals can evoke distrust if subtle cues or timing seem off, which highlights the need to focus on psychological and emotional cues, not only technical detail.

Modern AI systems address this effect by improving small timing and motion cues. Realistic eye movements, natural breathing, slight asymmetries in facial expressions, and consistent reactions to context all help digital humans feel more comfortable to viewers.

Users tend to trust digital humans more when avatars exhibit authentic micro-expressions, natural body language, and responses that match social context. These factors support a sense of presence and reduce the feeling that a model is artificial.

Success in overcoming the uncanny valley depends on more than surface realism. Systems must capture how people behave and respond, not just how they look. Emotional timing, reaction patterns, and small behavioral details all contribute to how viewers judge authenticity.

Transparency, Trust, and Responsible AI Use

Growing sophistication in photorealistic AI raises important questions about how these tools are used. Audiences care about whether an image or video shows a real person, a digital double, or a fully synthetic character.

CGI-based avatars, by avoiding strict photorealism, foster honest user relationships and clearer brand identity, which aligns with approaches that prioritize transparency and trust.

Privacy and security sit at the center of any workflow that uses real human likenesses. Security and privacy features are central in deployment, and ongoing work on standards aims to protect personal data and limit misuse.

Ethical deployment of AI likenesses requires clear consent, secure handling of uploaded images, and well-defined usage rules. Platforms that treat creator models as private and isolated assets help prevent unauthorized use or sharing.

Successfully deployed avatars can improve engagement and break digital fatigue, but demand ethical transparency and user consent to reduce legal and social risks related to impersonation and misuse.

Choosing Between Photorealism and Stylized CGI

|

Feature |

Photorealistic Digital Models (AI) |

Stylized CGI Avatars |

|

Visual goal |

Very close to real photographs |

Clearly different from reality (cartoonish or stylized) |

|

Primary use cases |

Scaling authentic-looking content, virtual influencers, marketing campaigns |

Brand mascots, intentional non-human representation, customer service agents |

|

Trust factor |

Requires transparent disclosure, risk of uncanny valley if details are off |

Can foster trust through clear distinction from real humans |

|

Content scalability |

High, supports large volumes of automated content |

Moderate, often requires ongoing artistic input for major variations |

Creators who value privacy and ethics can build workflows that respect user expectations. Get started with Sozee to explore responsible AI model creation and deployment.

Frequently Asked Questions (FAQ) about Creating Photorealistic Digital Models with AI

How long does it take AI to create a photorealistic digital model?

Advanced AI platforms such as Sozee shorten model creation from months to minutes. Likeness reconstruction typically runs shortly after photo upload, and content generation can happen in real time. Creators can produce many variations without long waiting periods, unlike earlier methods that needed extensive scanning, manual modeling, and lengthy post-production.

Can AI-generated photorealistic models truly overcome the “uncanny valley”?

Modern AI systems have reduced the uncanny valley effect through improvements in micro-expressions, motion, and behavioral realism. Leading platforms focus on details such as eye movement, breathing, and context-appropriate reactions that support a more natural feel. Photorealism now includes both visual accuracy and behavior patterns that align with how people expect humans to act.

What input is typically required to create a photorealistic digital model using AI?

Current AI platforms need far less input than traditional capture pipelines. Many systems, including Sozee, can build detailed likenesses from as few as three high-quality photos. These inputs allow the AI to analyze facial structure, skin texture, and unique features to create a comprehensive digital persona. More advanced setups may still use 3D scanning or photogrammetry for special cases, but these are no longer required for standard content creation.

Is it ethical to create photorealistic digital models of real people using AI?

Ethical creation of photorealistic models depends on explicit consent, clear disclosure, and strong data protection. Responsible platforms keep models private to each creator, prevent sharing across accounts, and define acceptable use cases. Respect for privacy laws, platform policies, and individual rights helps creators use these tools in ways that support trust over time.

How can content creators ensure consistency when generating photorealistic digital models with AI?

Consistency comes from a combination of stable model training and structured workflows. Creator-focused tools offer reusable style bundles, saved prompts, and high-fidelity likeness recreation that keep a model’s appearance steady across formats. Advanced systems maintain key attributes such as skin tone, facial structure, and expression patterns, while also supporting consistent lighting and behavior across large content libraries.

Conclusion: Why Photorealistic AI Models Define the Next Phase of Content

The content demands placed on creators, agencies, and virtual influencer teams continue to grow. AI-powered photorealistic digital models provide a practical response by separating content volume from human time and energy.

These tools do more than increase efficiency. Creators can explore many settings, aesthetics, and concepts without organizing constant shoots, while still preserving a familiar on-screen identity. Agencies gain more predictable content pipelines and can reduce creator burnout by shifting some production to digital doubles or AI-native models.

As AI systems improve, audiences will focus less on whether content is AI-generated and more on whether it feels relevant, authentic, and engaging. Teams that learn how to integrate AI responsibly now can build a lasting advantage in reach, responsiveness, and creative flexibility.

Creators who want to scale their content without losing quality or control can explore photorealistic digital models as a core part of their toolkit. Start creating now with Sozee and build AI-powered models that support consistent, high-quality content at scale.