Key Takeaways

- Realistic Vision v6.0 and Juggernaut XL are leading models for hyper-realistic faces with strong skin detail and eye rendering.

- Use 5-7 core prompt descriptors like “hyper-realistic portrait, detailed skin pores, 8k” plus targeted negative prompts to avoid distortions.

- ControlNet with IP-Adapter v2 keeps faces consistent across images by combining structure control with facial feature preservation.

- LoRA stacking and precise inpainting repair artifacts like distorted eyes and hands without retraining full models.

- Scale content production with Sozee, which creates hyper-realistic faces from 3 photos with no training or setup.

7 Stable Diffusion Techniques That Deliver Hyper-Realistic AI Faces

1. Realistic Vision v6.0 and Juggernaut XL for Photoreal Faces

Realistic Vision is the best realistic model for Stable Diffusion, especially good at generating realistic humans with real faces and eyes, while Juggernaut XL is a well-finetuned SDXL model especially good at generating realistic style photos. Together they form a strong base for professional face generation in 2026.

Realistic Vision v6.0 focuses on skin pore detail and natural eye rendering, which suits tight portraits and beauty shots. Juggernaut XL delivers cinematic lighting and balanced composition that feel like high-end photography. Both models outperform older SD 1.5 variants in facial accuracy, expression control, and consistency across batches.

Download these models from Civitai and pair them with Euler a sampler, 20-30 steps, CFG scale 7-9, and 1024×1024 resolution. Use lower denoising strength between 0.35 and 0.5 for img2img when you want consistent likeness across multiple generations.

Prompt: hyper-realistic portrait, detailed skin pores, natural lighting, raw photo, 8k uhd, professional photography Negative: cartoon, anime, plastic skin, disfigured eyes, blurry

2. Prompt Engineering with Simple, High-Impact Descriptors

Clear, focused prompts create more realistic faces than long, cluttered descriptions. Prioritize quality descriptors in positive prompts first, such as “8k resolution, ultra-fine details, professional illustration” to establish technical baselines before you describe facial traits.

Keep prompts to 5-7 core descriptors that focus on realism. Use phrases like “hyper-realistic portrait, detailed skin texture, cinematic lighting, raw photo, 8k” and then add (keyword:1.2) style weights for elements that matter most. This approach reduces overprompting, which often introduces warped features and noisy backgrounds.

Support your main prompt with strong negative prompts. Include terms such as “cartoon, disfigured eyes, plastic skin, blurry, distorted proportions” to block the most common artifacts that break realism.

| Setting | Value | Purpose |

|---|---|---|

| Steps | 20-30 | Balance between quality and speed |

| CFG Scale | 7-9 | Controls how closely images follow the prompt |

| Resolution | 1024×1024 | Preserves facial detail and texture |

3. ControlNet and IP-Adapter v2 for Consistent Faces

ControlNet with IP-Adapter v2 keeps the same face recognizable across many images. IP-Adapter maps image embeddings from a CLIP encoder to sequence features using a projection model for enhanced face and scene consistency, which improves likeness retention in 2026 workflows.

Set IP-Adapter weight between 0.7 and 1.0 and pair it with ControlNet Canny or OpenPose for pose and structure control. This combination locks in facial geometry while you still experiment with lighting, camera angles, outfits, and backgrounds. You get a character that stays recognizable from shot to shot.

Use reference photos with clear faces, neutral lighting, and minimal motion blur. Run them through ControlNet preprocessing to extract edge maps or pose data, then apply IP-Adapter to preserve facial identity while you generate new scenes.

ControlNet Settings: - Model: control_v11p_sd15_canny - Weight: 0.8-1.0 - IP-Adapter Weight: 0.7-0.9 - Control Mode: Balanced

4. LoRA Stacking for Realism Without Full Retraining

Z-Image LoRA training enables consistent AI influencer face generation using 15-30 high-quality images for repeatable facial features, and training completes in 30-40 minutes instead of hours.

Stack multiple LoRAs at 0.6-0.8 strength to push realism further. Combine a character LoRA for likeness with a general realism LoRA that improves skin, lighting, and lens effects. This method upgrades image quality without the cost and complexity of retraining a full base model.

Z-Image Turbo LoRA training completes in 30-40 minutes on RTX 5090 GPUs, enhancing face detail consistency with better skin texture and more natural lighting than base models alone.

Get started free with instant face generation on Sozee if you want professional results without any LoRA training at all.

5. Inpainting to Repair Eyes, Teeth, and Hands

Inpainting gives you precise control when you need to fix specific flaws in an otherwise strong image. Focus on problem zones such as eyes, teeth, and hands, and use targeted masks with denoising strength between 0.4 and 0.6 for smooth blending.

Create tight masks around distorted features and keep the same model and sampler settings you used for the original render. This approach keeps the overall style intact while you refine small areas. ComfyUI workflows work well for iterative inpainting, so you can run several passes without degrading the rest of the image.

For eye fixes, mask only the iris and pupil, drop denoising to around 0.35-0.45, and add “detailed eyes, sharp focus” to your inpainting prompt. This method corrects distortions while preserving the subject’s identity.

6. Sampler Choices and Strong Negative Prompts

Use negative prompts to exclude common distortions like “no blurriness, no distorted fingers, no strange perspective” to avoid undesirable traits in faces. Sampler selection then shapes how natural the final skin and lighting appear.

Euler a and DPM++ 2M Karras at 20-50 steps with CFG 5-8 often create the most natural skin textures and reduce uncanny valley effects. Higher step counts add detail but can introduce noise, while moderate CFG values prevent harsh contrast and over-saturated colors.

Extend negative prompts with technical terms such as “jpeg artifacts, compression, oversaturated, plastic, artificial lighting” when you want images that pass as real photos for commercial use.

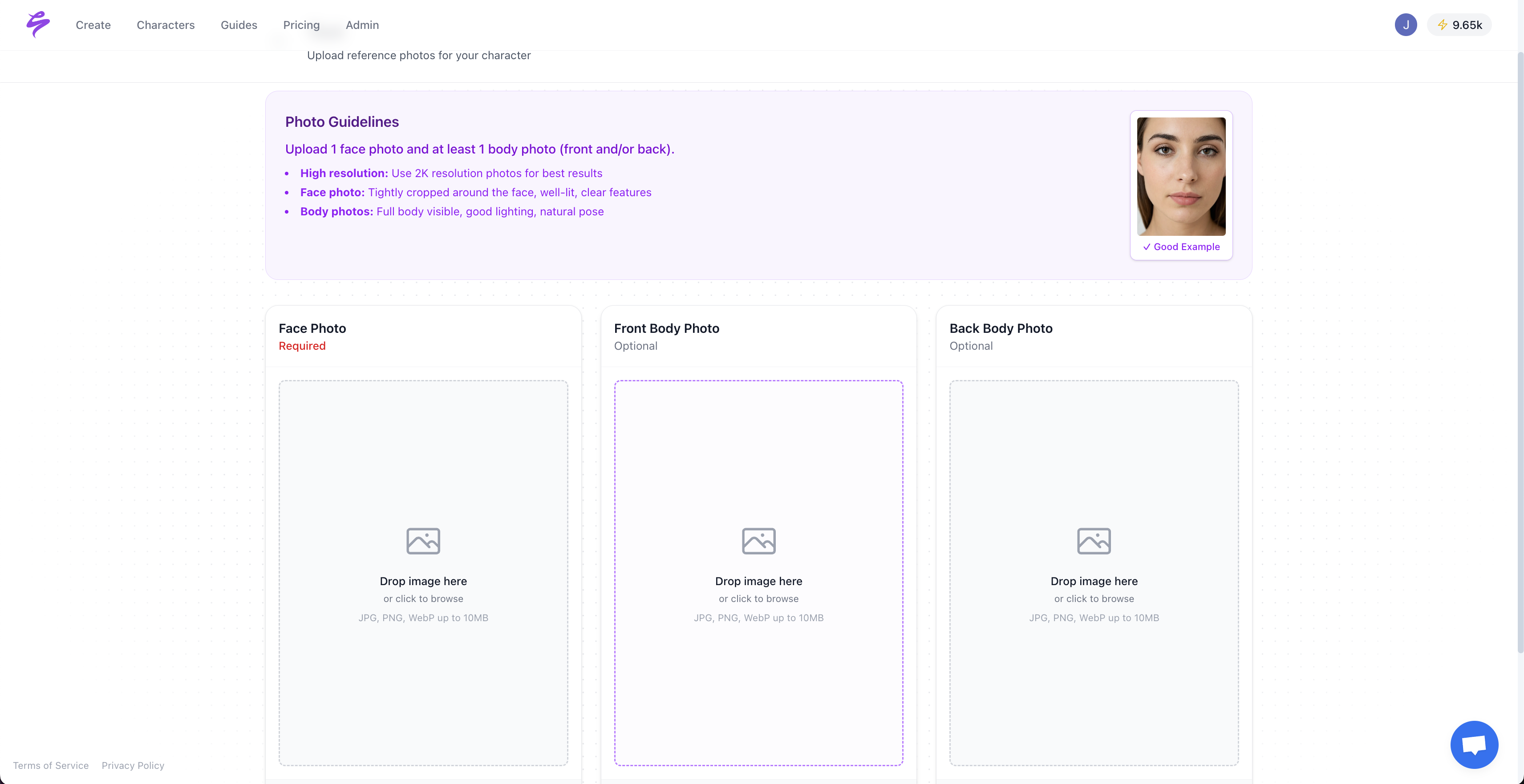

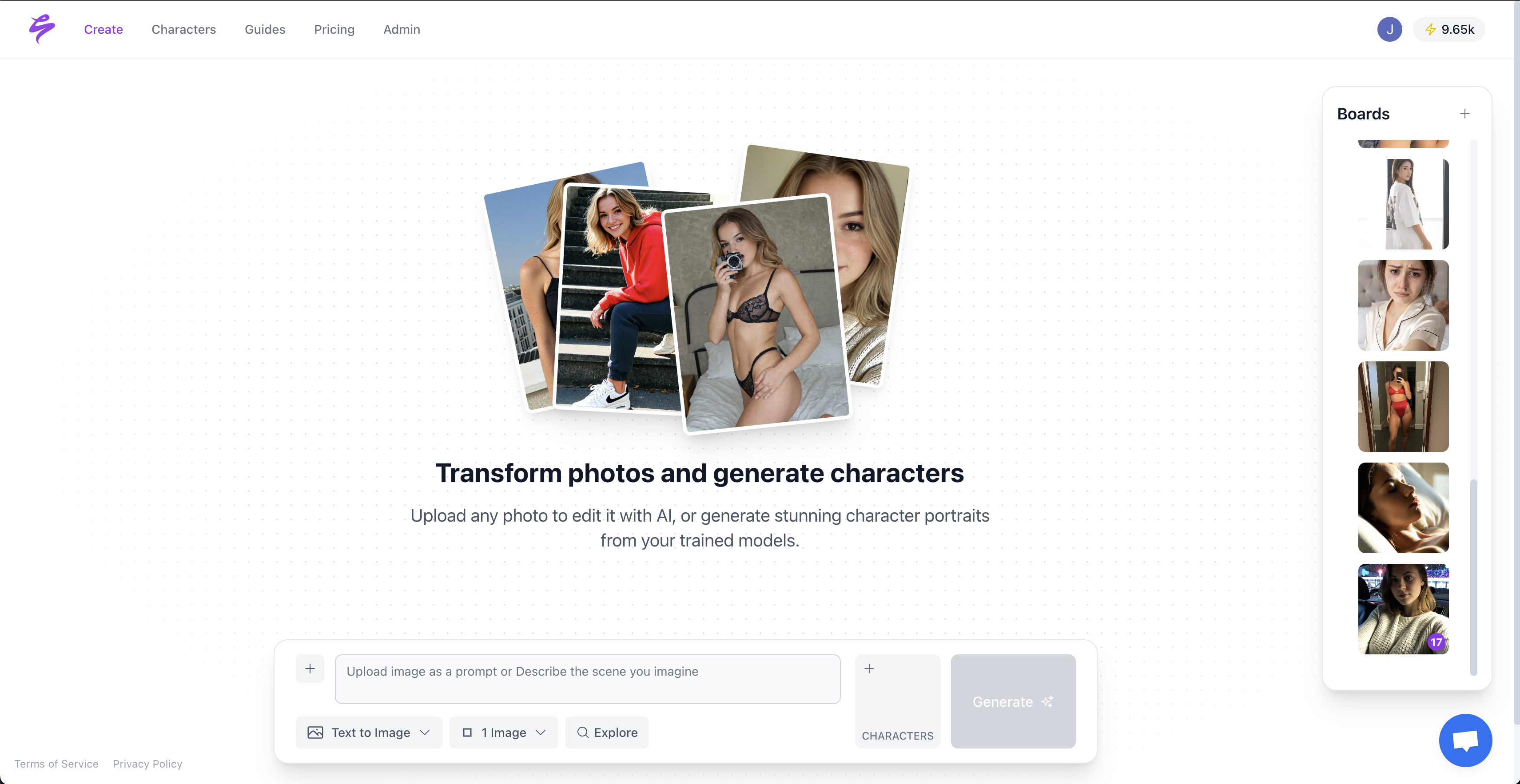

7. Sozee.ai for No-Training, Creator-Ready Faces

Sozee.ai removes the setup, training, and tuning that Stable Diffusion usually requires. You upload three photos and receive photorealistic faces with consistent identity across every output.

Sozee’s proprietary system focuses on hyper-realistic faces and stable likeness, which lets agencies and creators scale content while keeping the same character across campaigns, thumbnails, and paywalled sets.

Creators on OnlyFans, TikTok, and virtual influencer projects can skip GPUs, LoRAs, and ControlNet graphs. Upload three photos, generate unlimited content, and grow revenue without the technical learning curve that blocks many Stable Diffusion users.

Troubleshooting Common Stable Diffusion Face Problems

| Issue | Cause | SD Fix | Sozee Fix |

|---|---|---|---|

| Distorted eyes | High denoising | Lower to 0.35, then inpaint | Auto-refined instantly |

| Wonky hands | Prompt variance | Use ControlNet OpenPose | Perfect anatomy guaranteed |

| Inconsistent likeness | Random seed variation | Use fixed seeds plus LoRA | 3-photo instant consistency |

| Uncanny valley | Model limitations | Combine multiple SD techniques | Hyper-realistic by default |

Conclusion: Grow Beyond Stable Diffusion Limits

These seven techniques cover the most effective ways to generate realistic Stable Diffusion faces in 2026. Realistic Vision v6.0, Juggernaut XL, ControlNet, IP-Adapter, LoRAs, and inpainting each solve a specific problem in building monetizable AI characters.

Traditional SD workflows still demand time, hardware, and technical skill that many creators and agencies lack. Even with the right models and prompts, scaling consistent, high-quality faces across hundreds of assets remains a challenge.

Start creating now with Sozee’s three-photo face generation and get hyper-realistic, consistent results without complex setups, training runs, or constant troubleshooting.

FAQ

What is the best Stable Diffusion model for faces in 2026?

Realistic Vision v6.0 leads for detailed facial features and natural eye rendering, while Juggernaut XL excels at cinematic portrait quality. For creators who want instant results without model training, Sozee.ai delivers hyper-realism from three uploaded photos with consistent identity across every generation.

How do you generate realistic people in Stable Diffusion?

Use a strong base model such as Realistic Vision, then add a focused prompt like “hyper-realistic portrait, detailed skin pores, 8k” with matching negative prompts. Combine IP-Adapter for face consistency, ControlNet for pose control, and samplers such as Euler a at 20-30 steps with CFG 7-9. Finish with inpainting to repair artifacts in eyes, teeth, or hands.

What is the most realistic Stable Diffusion model available?

SD 3.5 Large reaches the highest overall fidelity with an ELO score of 1,245, and Flux 2 produces strong cinematic scenes. Juggernaut XL remains a top choice for SDXL-based realistic portraits. Platforms such as Sozee focus specifically on creator workflows and deliver hyper-realistic faces tuned for consistency and speed.

How can I avoid the uncanny valley in AI face generation?

Pair ControlNet with IP-Adapter for stable facial structure, then refine negative prompts to block distortions and plastic textures. Use samplers like DPM++ 2M Karras with CFG 5-8 and apply targeted inpainting for any remaining artifacts. Sozee removes uncanny valley issues by using advanced likeness reconstruction that prioritizes natural skin, eyes, and expressions.

Is there a free AI face generator that works with photos?

Sozee offers instant hyper-realistic face generation from uploaded photos without technical setup or model training. Many free SD tools exist, but they usually require configuration, hardware, and prompt tuning, while Sozee focuses on fast, professional outputs with consistent characters.

What is the best free AI face generator compared to Stable Diffusion?

Stable Diffusion is free but demands hardware, time, and technical knowledge to reach professional quality. Sozee’s platform provides higher-quality, ready-to-use faces with zero setup, which makes it a practical option for creators and agencies that need reliable AI faces without managing complex SD pipelines.