Key Takeaways

- AI makes content creation faster but also increases risks around deepfakes, data exposure, and copyright disputes for creators.

- Creator-focused AI privacy tools use private model training, content authentication, and automated monitoring to protect likeness, data, and IP.

- Public AI tools can expose prompts and creative concepts to logging, discovery, and potential leakage, so creators need clear policies and careful tool selection.

- Platforms that prioritize privacy, human oversight, and transparent ownership terms give creators more control, autonomy, and peace of mind.

- Sozee offers a privacy-first AI content studio for creators; start protecting your likeness and workflows by signing up for Sozee.

The Creator’s Conundrum: Why Privacy Is the Core Challenge in the AI Era

Creators operate in an environment where AI accelerates production but also amplifies risk. Likeness, personal data, and intellectual property now sit within systems that can copy, remix, or misuse them at scale.

AI content creation introduces legal risks such as copyright infringement, unclear ownership of AI-generated works, and personal liability when outputs are inaccurate or harmful. Generative models trained on copyrighted material can produce work that is identical or confusingly similar to existing content, which exposes creators to infringement claims even when they believed outputs were original.

The threat surface continues to expand. AI growth is driving concern over deepfakes, misinformation, and data privacy, with more than half of Canadian respondents reporting worry about AI-enabled deepfake content. Malicious actors can now assemble convincing fake videos or images with only a handful of public photos.

Creators also remain accountable for how they use AI. Professional standards emphasize that humans cannot shift responsibility to AI for errors or misconduct, which mirrors broader expectations in other fields.

The impact of weak privacy protection can include stolen likeness, unauthorized derivative content, leaked personal data, copyright disputes, reputational harm, and serious financial loss. Effective tools and workflows now form part of a basic safety net for professional creators.

AI Tools for Creator Privacy Protection: What This Solution Category Covers

AI tools for creator privacy protection use advanced technology to defend against AI-driven risks. These tools aim to secure the same assets AI can exploit: a creator’s likeness, data, and IP.

Core capabilities often include:

- Private model training that isolates creator data within dedicated models

- Content authentication systems that verify which outputs are legitimate

- Deepfake detection that flags unauthorized likeness use

- Secure data handling and encryption to prevent leakage

- Automated monitoring that scans platforms for misuse

General cybersecurity tools help, but they rarely address likeness rights, ownership of AI-supported work, or the day-to-day workflows of creators and agencies. Creator-focused privacy tools close this gap by aligning with how creators work and earn money.

These tools also support growth. Creators can scale content production with less risk, maintain consistent visual identity, work under pseudonyms if they choose, and spend more energy on creative direction instead of damage control.

Start creating with privacy-aware AI tools that keep your likeness and content under your control.

How Sozee Supports Creator Privacy Protection

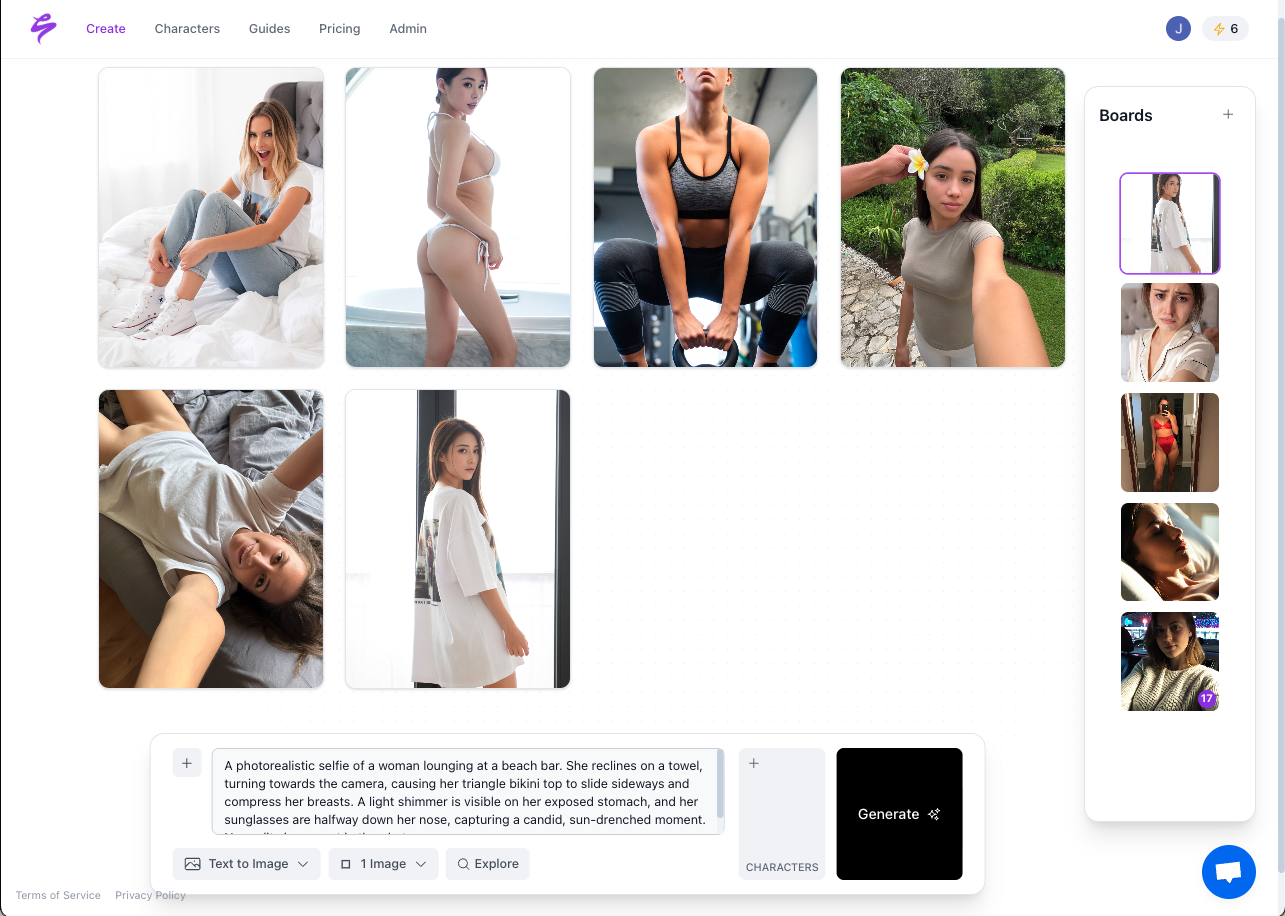

Sozee is an AI content studio designed for creators, agencies, and virtual influencer builders with privacy and control as core principles. The platform helps protect likeness and data while still enabling large-scale content creation.

Key ways Sozee supports creator privacy include:

- Private likeness models. Sozee creates isolated models for each creator, and those models are not reused to train other systems. This approach keeps your likeness exclusive to your account and use cases.

- Hyper-realistic output with creator control. The platform produces content that reflects real cameras, lighting, and skin detail while keeping the creator in charge of style, scenarios, and usage.

- Protected sensitive inputs. The minimum set of photos required to recreate a likeness stays inside a private model, which reduces the chance of misuse or leakage and supports data sovereignty.

- Secure workflows for agencies and teams. Approval flows and role-based access help agencies enforce brand standards and reduce unauthorized use when multiple people work on a project.

- Anonymity for niche or private creators. Creators can build digital personas that never reveal their real identity, which supports privacy-first strategies and niche content with low production cost.

This model turns privacy from a limitation into a design constraint that still allows high-volume content creation while keeping control with the creator.

Explore Sozee’s privacy-focused workflows for creator and agency teams.

Beyond Protection: How AI Tools Support Autonomy and Peace of Mind

Keeping Your Likeness Out of Deepfakes

Private AI models help limit the material that attackers can use to generate convincing deepfakes. When likeness data resides inside an isolated and access-controlled system, it becomes harder for outsiders to obtain the training data needed for abuse.

AI-enhanced social engineering and deepfakes already pose serious risks for individuals and organizations. Tools that combine secure training, access control, and content verification give creators clearer evidence of what is real and what is not.

Clarifying Ownership of AI-Influenced Content

Copyright rules around AI are evolving. Human involvement still plays a major role in whether AI-assisted work qualifies for protection.

Regulators and courts are tightening expectations on confidentiality, privacy, and human oversight in AI workflows. At the same time, AI-generated material can raise difficult questions about authorship and eligibility for copyright.

Creator-focused platforms that document prompts, edits, and approvals help build a record of human creative input. Clear terms on ownership and licensing further reduce ambiguity.

Improving Data Security and Confidentiality

Public AI tools introduce additional exposure for sensitive prompts, draft scripts, and client information. A 2026 U.S. court order that required production of de-identified ChatGPT logs in copyright litigation illustrates how AI chat logs can become part of legal discovery.

Creators can reduce risk by:

- Establishing policies that prohibit entering confidential or client-identifying data into public AI tools

- Reserving sensitive work for platforms that offer private or enterprise-grade environments

- Reviewing how each provider stores logs, trains models, and handles deletion requests

Create with tools that align with professional confidentiality standards and creator workflows.

Traditional vs. AI-Powered Creator Privacy Protection

|

Feature |

Traditional Methods |

AI-Powered Solutions |

|

Likeness protection |

Manual monitoring and legal takedowns that respond only after misuse appears |

Private likeness models, automated content identification, and deepfake detection that run continuously |

|

IP ownership clarity |

Human-only authorship with fewer gray areas but limited support for AI-assisted work |

Provenance tracking, human-in-the-loop workflows, and clear privacy and ownership policies |

|

Data security |

General IT security practices and manual control over file sharing |

Encrypted and isolated training environments with defined rules for data input, retention, and deletion |

|

Efficiency and scale |

Reliance on manual review, which slows growth |

Automated monitoring, real-time risk assessment, and scalable content generation with guardrails |

Frequently Asked Questions about AI Tools for Creator Privacy Protection

Can AI-generated content be copyrighted, and how do privacy tools help?

Copyright protection usually depends on meaningful human creativity. Purely automated output may not qualify under U.S. law, while work that involves human selection, editing, and direction can have a stronger claim. Privacy-focused creator tools help by documenting prompts, edits, and approvals, and by offering clear terms on who owns final outputs. Platforms such as Sozee prioritize creator control over generated content within their workflows.

What privacy risks come with public AI tools for content creation?

Public AI tools may log prompts and outputs, use some data for training, and become subject to legal discovery, depending on product tier and settings. Model inversion and privacy leakage attacks can potentially expose training data, including creative concepts or personal details. Some major providers offer enterprise products with stricter data handling, so risk levels vary by plan. Creators still benefit from assuming that anything entered into a public model could be stored, analyzed, or requested in legal proceedings.

How can AI tools stop my likeness from being used in deepfakes?

Creator privacy tools reduce deepfake risks by isolating likeness data, controlling access, and authenticating real content. Private models keep raw likeness material out of public training sets. Detection systems can scan platforms for suspicious content, and some solutions add invisible watermarks or use blockchain-based verification to help prove authenticity. A combined approach of secure training, monitoring, and response offers the strongest defense.

What should creators look for in a privacy-focused AI platform?

Effective platforms provide:

- Private or dedicated model training that does not reuse your data for other users

- Clear and accessible policies on ownership, licensing, and deletion

- Documented security controls such as encryption, access management, and regular audits

- Content authentication or provenance features

- Workflows built for creator and agency use, not just generic AI experimentation

Are AI privacy tools worth paying for compared to free options?

Free tools often fund development through data collection or broader usage of prompts and outputs, and they usually provide limited contractual protection if issues arise. Professional creators rely on their likeness and IP for income, so losses from misuse or disputes can far exceed subscription costs. Paid, privacy-focused platforms function more like core business infrastructure than optional extras.

Conclusion: Building a Future of Confident Creation

AI now sits at the center of many creator workflows, which makes privacy and security part of day-to-day creative practice rather than an afterthought. AI tools for creator privacy protection help creators capture the benefits of automation while reducing the most significant risks.

Current regulatory trends point toward stricter standards for responsible, transparent AI use, which increases the importance of privacy-first tools and clear governance.

Platforms like Sozee are building that future by focusing on private likeness models, secure workflows, and creator-first controls. These capabilities allow creators to scale output while maintaining sovereignty over their image, data, and catalog of work.

Creators who invest in privacy-conscious AI workflows can focus more time on new ideas and less time worrying about misuse. That shift enables a more sustainable and confident approach to building a digital presence.