The creator economy faces a growing imbalance. Demand for new content is estimated to exceed supply by 100 to 1, and creators, agencies, and virtual influencer builders often struggle to produce consistent, high-quality, hyper-realistic visual content at scale. This gap contributes to creator burnout, stalled agencies, wasted time, and slow brand growth.

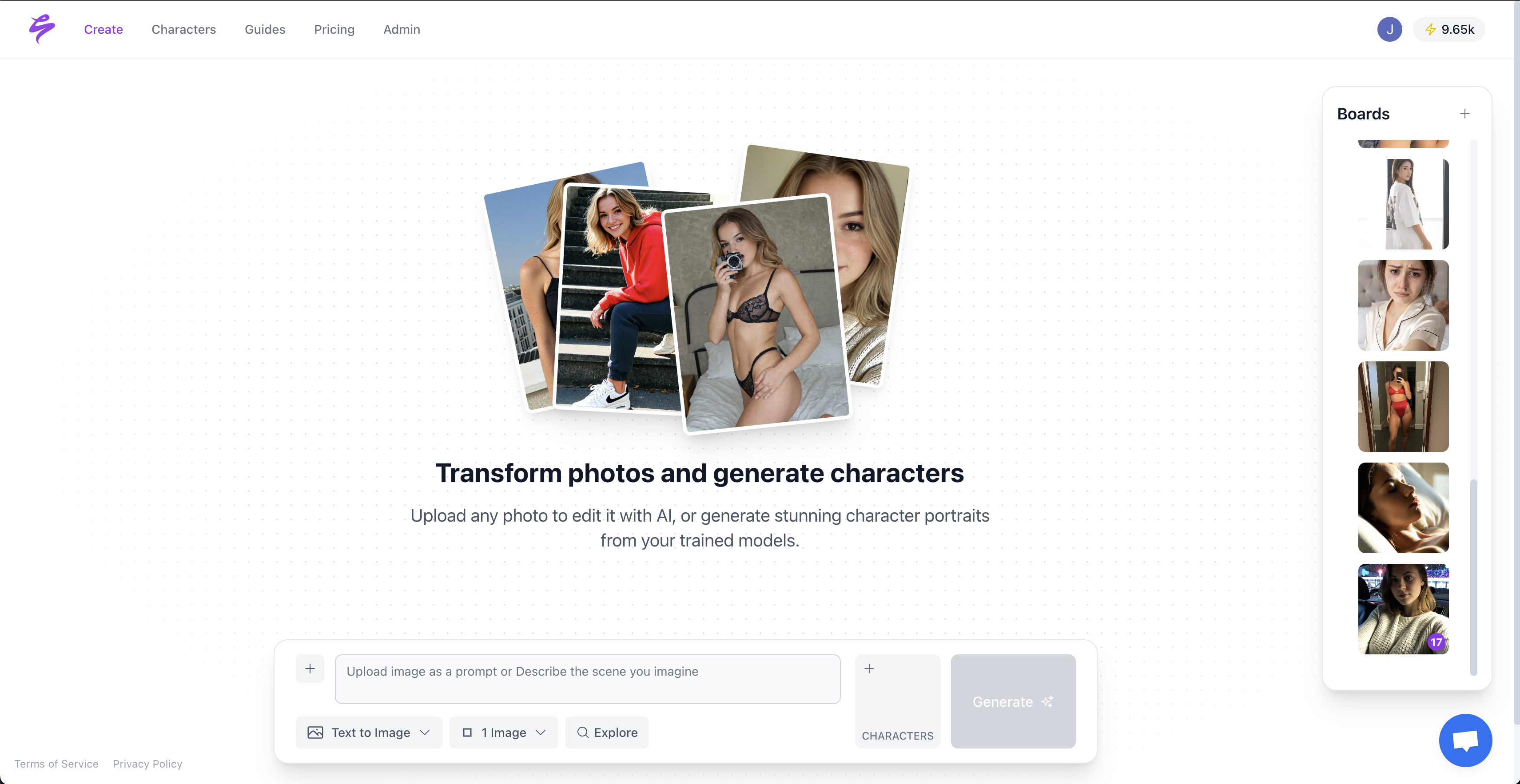

LoRA (Low-Rank Adaptation) training offers a practical way to generate hyper-realistic photos with strong consistency and efficient use of resources. It allows creators to scale visual content while keeping quality under control. At the same time, platforms such as Sozee.ai provide a faster, non-technical path to similar results, giving creators instant access to realistic AI content without building a full training pipeline.

This guide explains how to master LoRA training step by step and shows where platform solutions like Sozee.ai can simplify content production.

The Creator’s Content Crisis: Why Hyper-Realistic LoRA Matters

The modern creator economy runs on a simple equation: more content drives more traffic, which drives more sales and revenue. Creators, however, have limited time and energy, while audiences expect constant output. This mismatch creates a Content Crisis: mounting pressure to deliver consistent, high-quality visuals without burnout or unsustainable production costs.

Traditional content production requires significant resources, including:

- Professional cameras and lighting

- Location scouting and set design

- Wardrobe changes and styling

- Hours of shooting and post-production

For agencies managing many creators or teams building virtual influencers, these constraints scale into major bottlenecks that affect both revenue and operations.

LoRA training shifts this model. LoRA introduces efficient, task-specific adapters by decomposing weight updates into low-rank matrices, focusing training only on these components while freezing the main model parameters. This structure allows creators to generate hyper-realistic photos that stay consistent across many variations, which makes scale much easier to reach.

The method is also parameter-efficient. Its design reduces computational cost while keeping inference speed the same. For creators and agencies, this leads to faster turnaround, lower operating costs, and the ability to fulfill custom content requests more quickly. LoRA enables quick multi-task learning and task switching using lightweight adapters instead of separate model instances, which works well for teams that manage several brand aesthetics or creator personas at once.

Call to action: Discover how to unlock scalable hyper-realistic content with Sozee.ai, get started today.

Getting Started: Essentials You Need Before LoRA Training

Foundational understanding of AI image generation

LoRA builds on top of foundation models such as Stable Diffusion, which generate images from text prompts through a diffusion process. LoRA differs from full model fine-tuning by keeping the original weights frozen and training only low-rank adapter matrices, which greatly reduces memory and computation requirements.

This structure lets creators customize pre-trained models for specific people, styles, or concepts without the heavy compute needed to train a model from scratch. A basic understanding of these principles will help you make informed choices about training settings and dataset design.

Required hardware and software setup

Effective LoRA training depends on having the right technical setup.

For hardware, you will typically need:

- A GPU with at least 8 GB of VRAM, 16 GB or more is recommended

- A modern consumer or professional GPU, for example RTX 4080 or RTX A6000

For software, you will need:

- Python 3.8 or higher

- PyTorch with CUDA support

- Access to a base model such as Stable Diffusion XL

- Supporting libraries such as Diffusers and Transformers

The Diffusers library from Hugging Face offers straightforward LoRA implementations for image models such as Stable Diffusion, with detailed control over training configuration and adapter management. This toolkit removes much of the boilerplate work and provides ready-made functions for both training and inference.

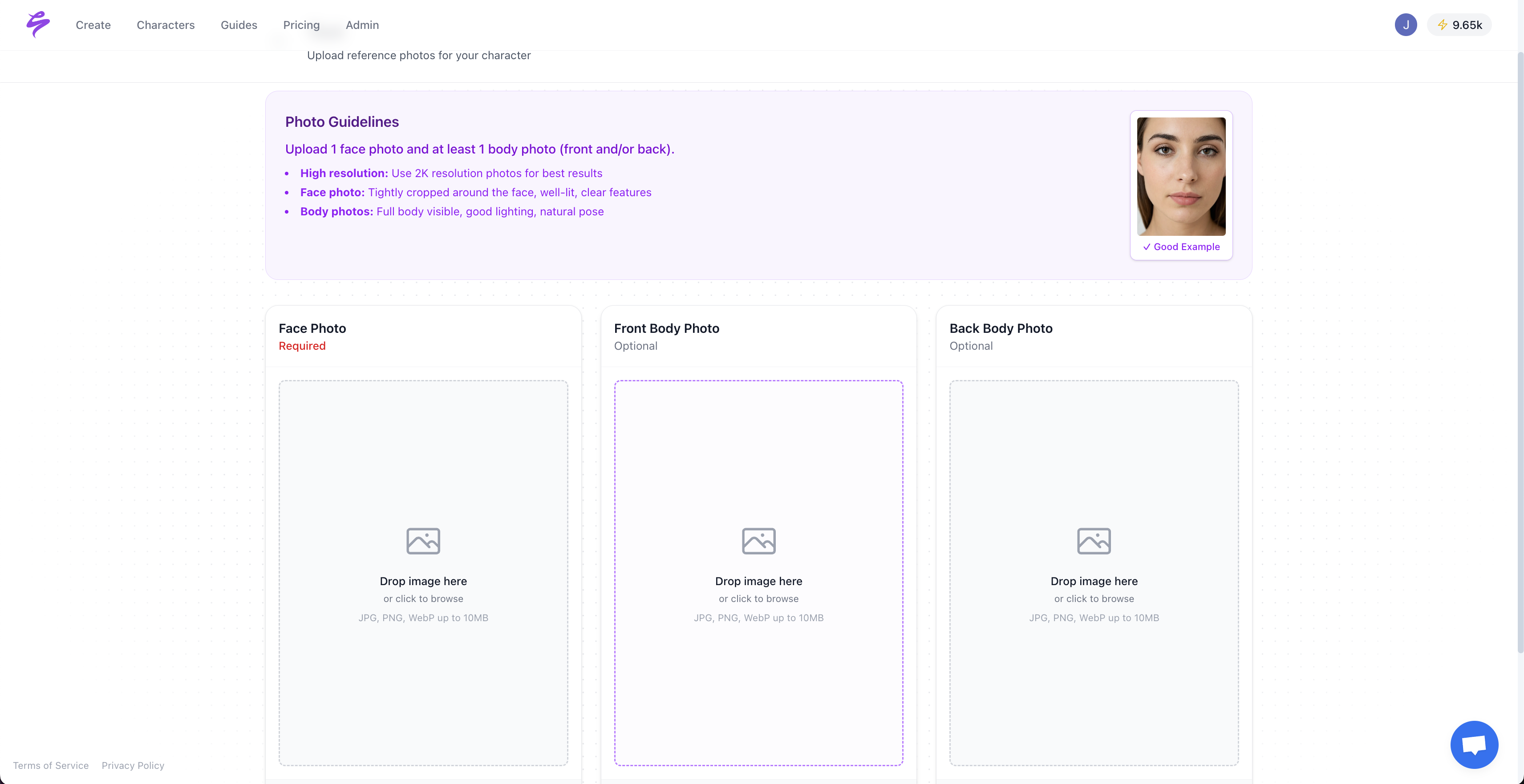

Curating your dataset for realistic LoRA results

Dataset quality has a direct effect on realism and consistency. A relatively small set of dozens to a few hundred high-quality, diverse images can be enough for effective adaptation, but broader variety and better curation provide more creative control.

Focus on images that meet these standards:

- High resolution, ideally 1024×1024 or higher

- Consistent and clear lighting

- Sharp focus and visible facial features

- Diverse poses, expressions, and angles

Avoid blurry, low-resolution, or heavily filtered photos, since they reduce the quality of the trained adapter.

You can improve organization by:

- Using a consistent naming convention that encodes pose, lighting, or mood

- Grouping images by angle or scene type

- Keeping a simple log of which images perform best during testing

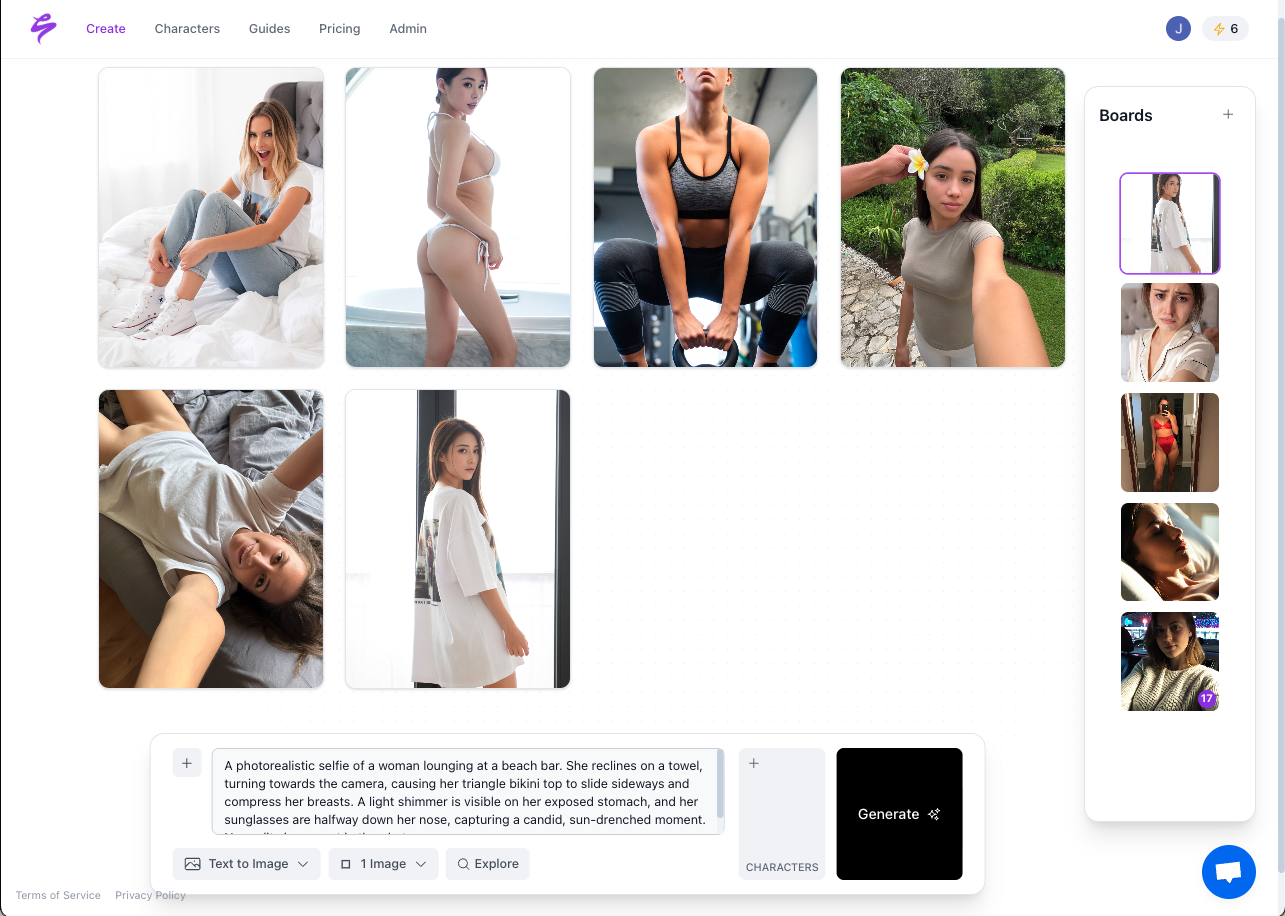

Pro tip: LoRA workflows require hardware setup and careful dataset curation. Sozee.ai reduces that effort. You can upload as few as three photos and generate hyper-realistic content within minutes, without technical setup.

Step-by-Step Guide to LoRA Training for Hyper-Realistic Photo Generation

Step 1: Prepare your hyper-realistic image dataset

Strong LoRA results start with focused dataset preparation. Aim to collect 50 to 200 high-resolution images of your subject that cover a range of lighting, poses, facial expressions, and camera angles. Useful preprocessing steps include keeping resolution consistent, cropping images so the subject is prominent, optionally removing backgrounds for consistency, and writing descriptive tags that improve prompt-based control.

Crop each image so the subject sits clearly in frame, often with a 1:1 aspect ratio. Remove distracting backgrounds when possible, since clutter can confuse the training process and reduce the adapter’s ability to learn the subject’s key features.

Write short captions for each image. Include:

- Pose and body position

- Lighting type and direction

- Clothing and accessories

- Distinctive traits such as tattoos, hair color, or makeup style

Store images in organized folders and keep filenames descriptive. This structure simplifies later iterations and comparison between versions.

Step 2: Set up your technical environment and base model

Preparing your environment before training reduces friction later. Create a dedicated Python virtual environment and install PyTorch, Diffusers, Transformers, and supporting libraries.

Next, download your chosen base model. Stable Diffusion XL is a strong choice for photorealistic generation and works well as a base for LoRA adapters.

Then complete a quick technical check:

- Verify that your GPU drivers are current

- Confirm that CUDA is available and recognized by PyTorch

- Load the base model and generate a simple image to test the pipeline

This basic test often prevents hours of debugging later in the process.

Prepare your training script using the Diffusers library. Example scripts in the documentation can be adapted for your dataset and hardware, which reduces the amount of custom code you need to maintain.

Step 3: Configure LoRA parameters for realistic results

Training quality depends heavily on your choice of hyperparameters. Important hyperparameters include rank (r, often between 4 and 64 for images), alpha (a scaling factor, often 1 to 2 times the rank), and dropout (commonly between 0.05 and 0.2, based on dataset size and quality).

A practical starting point for many creator-focused projects is:

- Rank: 16

- Alpha: 32

- Dropout: 0.1

Higher rank values increase the adapter’s capacity to learn detail but also increase training time and memory usage. Alpha controls how strongly the adapter modifies the base model. Values that are too high can cause overfitting and artifacts, while values that are too low can lead to weak adaptation.

Freezing different matrices during training and using importance-based pruning strategies can further refine expressivity and efficiency. These techniques work well once you are comfortable with the core workflow and want more precise control.

Step 4: Execute LoRA training for fine-tuning

Start your training run using the prepared dataset and chosen parameters, and monitor progress from the start. Track:

- Training loss

- Validation loss, if you use a validation split

- Sample images generated at checkpoints

Most LoRA training sessions for image generation finish within one to four hours on modern hardware, depending on dataset size, batch size, and rank.

Use a learning rate scheduler that gradually lowers the learning rate during training. Many workflows start around 1e-4 and reduce the rate by half every few hundred steps. This pattern supports stable convergence and reduces instability in later stages.

Watch for common issues:

- Overfitting, visible when generated images closely copy training examples with little variation

- Underfitting, visible when images do not capture the subject’s identity or key details

You can respond to these issues by adjusting rank, learning rate, or training duration until results reach a balanced level of detail and flexibility.

Step 5: Test, evaluate, and iterate your LoRA model

After training completes, generate test images using a range of prompts. Focus on prompts that vary:

- Lighting and time of day

- Pose and camera angle

- Scene and background type

- Clothing and styling

A strong LoRA adapter maintains subject identity across these variations while still allowing creative flexibility.

Evaluate key aspects of realism, including:

- Skin texture and tone

- Facial symmetry and expression

- Lighting direction and shadows

- Details such as hands, eyes, and hair

Document issues that appear often, such as distorted hands or inconsistent lighting. These patterns will guide the next round of improvements.

If results fall short of your standards, you can:

- Refine the dataset by removing poor-quality images

- Add more diverse examples for weak scenarios

- Adjust hyperparameters for more or less capacity

- Extend training for additional steps

This iterative cycle is often the key to reaching professional-grade quality suitable for monetized content.

Step 6: Deploy your LoRA adapter for consistent content creation

Once the adapter performs well, integrate it into your production workflow. Adapters can be merged with the original weights for a permanent effect, or kept modular for flexible task switching. Modular setups let you maintain multiple adapters for different creators or styles while using a single base model.

Build a prompt library that contains tested, high-performing prompts. Include dedicated prompt sets for:

- Professional headshots

- Lifestyle and casual scenes

- Fashion and lookbook content

- Niche or platform-specific scenarios

LoRA weights act as modular, plug-and-play components that support rapid switching between visual styles or subjects. A structured prompt library multiplies the value of these adapters.

To keep quality consistent over time, set simple quality control steps, such as:

- Standardized prompt formats for specific use cases

- Regular visual checks of sample outputs

- Light post-processing rules for color, sharpness, or framing

LoRA vs. Sozee.ai: Choosing the Best Path to Hyper-Realistic Content

LoRA training offers deep customization and control, while platform-based tools offer speed and simplicity. Understanding the trade-offs helps you choose a path that matches your goals, skills, and timelines.

| Feature | LoRA Training (DIY) | Sozee.ai (Platform) |

|---|---|---|

| Technical Skill Required | High, including Python and basic machine learning | No technical skill required |

| Setup Time | Days to weeks | Near-instant setup |

| Input Required | 50 to 200 or more curated photos | As few as 3 photos |

| Output Quality | Highly realistic, when tuned by experienced users | Hyper-realistic results suitable for creator workflows |

LoRA retrains models for specialized tasks with strong performance by reducing the number of trainable parameters, which makes fine-tuning large models practical for new computer vision domains such as hyper-realistic image generation. That capability is powerful but also introduces technical and operational overhead that not every creator wants to manage.

Creators and agencies that prioritize speed and scale often benefit from platform-based solutions that handle this complexity in the background. Sozee.ai focuses on instant likeness reconstruction from a small number of input photos, which directly addresses the core challenge of the Content Crisis: the need for nearly unlimited, high-quality content without complex setup.

Measuring Success: What Hyper-Realistic LoRA Training Can Deliver

Well-implemented LoRA workflows can improve several key metrics that matter to creators and agencies.

Content output often increases once the workflow is stable, making it easier to maintain consistent posting schedules that support engagement and revenue. Agencies can standardize visual quality across multiple creators, since LoRA reduces variables such as lighting conditions, location access, and scheduling.

Production costs can decrease when fewer live shoots are required. Savings may come from reduced spending on photographers, studios, equipment rentals, travel, and long editing sessions.

Time-to-content can shrink. Traditional photo shoots may require days of planning and execution, while LoRA-generated content can be created in hours once training is complete.

Monetization-focused creators can also gain a competitive edge by handling more custom requests. Fans increasingly expect personalized content, and LoRA can help creators offer more variations without extending physical production time.

Call to action: Reach both realism and scale for your hyper-realistic content. Start creating now with Sozee.ai.

Advanced Techniques and Next Steps for LoRA Mastery

Optimization techniques for efficient LoRA training

After you are comfortable with basic LoRA training, more advanced optimization can improve efficiency and quality. Optimization methods such as pruning, parameter freezing or sharing, and quantization can further reduce trainable parameters while maintaining output quality.

You can use pruning to remove less important adapter parameters after training. A common approach is magnitude-based pruning, which removes parameters below a chosen threshold, for example 10 to 20 percent of the maximum absolute value in the adapter weights.

Quantization reduces the precision of adapter weights from 32-bit to 16-bit or even 8-bit formats. This change can cut memory usage significantly while keeping most of the visual quality, which is useful for deployment on hardware with limited memory.

Multi-LoRA blending for complex styles

Experienced users can combine more than one LoRA adapter to build richer visual styles. LoRA supports the creation and coexistence of multiple task-specific adapters for a single base model, which allows flexible and rapid switching between different content styles.

A common pattern uses weighted blending. During inference, you can assign different strength values to multiple adapters, for example one for the subject and one for a specific artistic or photographic style. This structure helps produce personalized content in defined styles while maintaining photorealism.

This blending approach expands creative options without requiring a new training run for every style combination, which keeps both time and compute requirements manageable.

Ethical considerations in hyper-realistic generation

Hyper-realistic generation introduces important ethical and legal responsibilities. You should use training data that is collected with consent and within the bounds of local law and platform policies. Clear documentation of data sources and usage rights helps reduce risk.

Technical safeguards are worth considering, such as invisible watermarking or metadata tags that mark content as AI-generated. These practices support transparency and help reduce misuse.

Internal guidelines can also help. Many teams define rules for when and how AI-generated images can resemble real people, how to disclose AI use to audiences, and which scenarios require extra review. Clear standards help maintain audience trust and protect both creators and brands.

Frequently Asked Questions about LoRA for Hyper-Realistic Photos

Ideal dataset size for hyper-realistic LoRA training

The ideal dataset size depends on your use case and the level of detail you need. For many creator-focused scenarios, 50 to 100 high-quality, varied images are enough for effective LoRA training. This requirement is modest compared to traditional machine learning workflows that may need thousands of examples.

Quality matters more than quantity. A smaller set of sharp, well-lit, high-resolution images usually beats a larger set of noisy or inconsistent photos. Include a range of poses, expressions, lighting setups, and angles so the adapter can generalize.

Specialized use cases that demand very strict consistency or complex style combinations may benefit from 100 to 200 images. Beyond that range, returns often diminish unless the subject matter itself is highly diverse.

How LoRA improves efficiency compared to full model fine-tuning

LoRA improves efficiency by updating only a small set of adapter parameters instead of the entire model. Full fine-tuning would adjust millions or even billions of parameters, while LoRA focuses on compact low-rank matrices that contain thousands of parameters.

This reduction often cuts the number of trainable parameters by 90 to 95 percent, which lowers both memory usage and training time. Training runs that might take days or weeks with full fine-tuning can often complete in hours on a consumer-grade GPU.

The modular structure of LoRA adapters also supports efficient workflows. Teams can switch between different subjects or styles by loading different adapters, without reloading or retraining the base model itself.

Merging LoRA models with the base model for consistent output

LoRA adapters support two main deployment patterns: modular and merged. In modular deployment, the adapter remains separate from the base model and can be switched on or off as needed. This approach is helpful when you manage several creators or brands.

Merged deployment combines the adapter weights with the base model to form a new model version that is specialized for your subject or style. This setup reduces file management and can provide a slight speed improvement at inference.

Modular deployment is often best when you need flexibility. Merged deployment works well once you have a finalized configuration that you plan to use for an extended period.

Most critical hyperparameters for LoRA photo realism

Three hyperparameters have the most impact on photo realism.

Rank (r) controls how expressive the adapter can be. Values between 8 and 32 are common for photorealistic work. Higher ranks allow more detail but also increase memory usage and the risk of overfitting.

Alpha is the scaling factor, often set to one or two times the rank value. Alpha determines how strongly the adapter modifies the base model. If alpha is too low, the adaptation may be weak. If alpha is too high, artifacts or unstable behavior can appear.

Dropout rate helps prevent overfitting by randomly disabling a fraction of parameters during training. Values around 0.1 work well in many cases. Smaller datasets may benefit from slightly higher dropout to reduce memorization of specific images.

Sozee.ai compared to DIY LoRA training for hyper-realistic results

Sozee.ai focuses on removing the technical barriers that keep many creators from using advanced AI tools. DIY LoRA training offers deep control but requires time, hardware, and comfort with scripting and model management.

With Sozee.ai, creators can upload as few as three photos and begin generating content shortly after onboarding. LoRA training typically requires 50 to 200 curated images, plus hardware setup and experimentation with training settings.

The Sozee.ai workflow is optimized for platforms such as OnlyFans, Instagram, and TikTok, so creators can focus on content strategy and audience interaction instead of infrastructure.

Conclusion: Build Infinite Content Capacity with Hyper-Realism

LoRA training provides a structured path to generating hyper-realistic photos at scale. When used well, it can reduce production costs, shorten timelines, and give creators and agencies more control over visual output.

The core strengths of LoRA, including parameter-efficient adaptation, modular deployment, and relatively fast training cycles, address many long-standing limits in creator workflows. At the same time, the setup and experimentation required may not fit every creator’s schedule, budget, or technical comfort level.

Creators who prioritize speed and simplicity can turn to platform solutions like Sozee.ai. By enabling high-quality content from a small number of input photos, Sozee.ai offers a direct route to scalable content production without building a custom ML pipeline.

The choice between DIY LoRA training and a platform depends on your goals and resources. Both paths aim at the same outcome: reliable, high-quality visual content that supports engagement and revenue without forcing creators into constant live production.

Future-ready creators will rely less on physical availability and more on repeatable, AI-assisted workflows. The tools to support that shift already exist and are accessible today.

Stop waiting and start creating. Get started now to generate hyper-realistic content at scale and grow your influence with less strain on your time.