Key Takeaways

- Privacy-safe AI helps creators and agencies reduce the risk of data breaches, likeness misuse, and regulatory penalties when scaling content.

- Core practices such as data minimization, role-based access controls, and clear transparency notices build a stronger foundation for compliant AI content workflows.

- Understanding rules like the EU AI Act, CCPA, and China’s watermarking requirements keeps global distribution strategies aligned with emerging AI regulations.

- Private, isolated AI models give creators more control over their digital likeness and reduce exposure compared with traditional, broadly trained AI generators.

- Sozee provides privacy-first AI tools that protect creator data and likeness while enabling fast, scalable content production, and you can sign up here to get started.

The Imperative of Privacy-Safe AI for Creators and Agencies

The creator economy now must balance rapid AI adoption with growing privacy expectations and stricter regulations. Creators and agencies that rely on AI to scale output face real risks, including data breaches, misuse of digital likeness, and legal exposure that can undermine brand trust.

More than 40% of AI-related data breaches by 2027 are projected to come from improper cross-border use of generative AI, which raises the stakes for anyone using AI tools with audience or likeness data. Weak controls can lead to penalties and lasting damage to reputation, which directly affects monetization.

Privacy-first practices provide clear upside. Strong data protection reduces the likelihood and cost of incidents, while transparent AI usage reinforces audience confidence and can support higher engagement and conversions. A privacy-safe approach also keeps content strategies more resilient as new laws take effect.

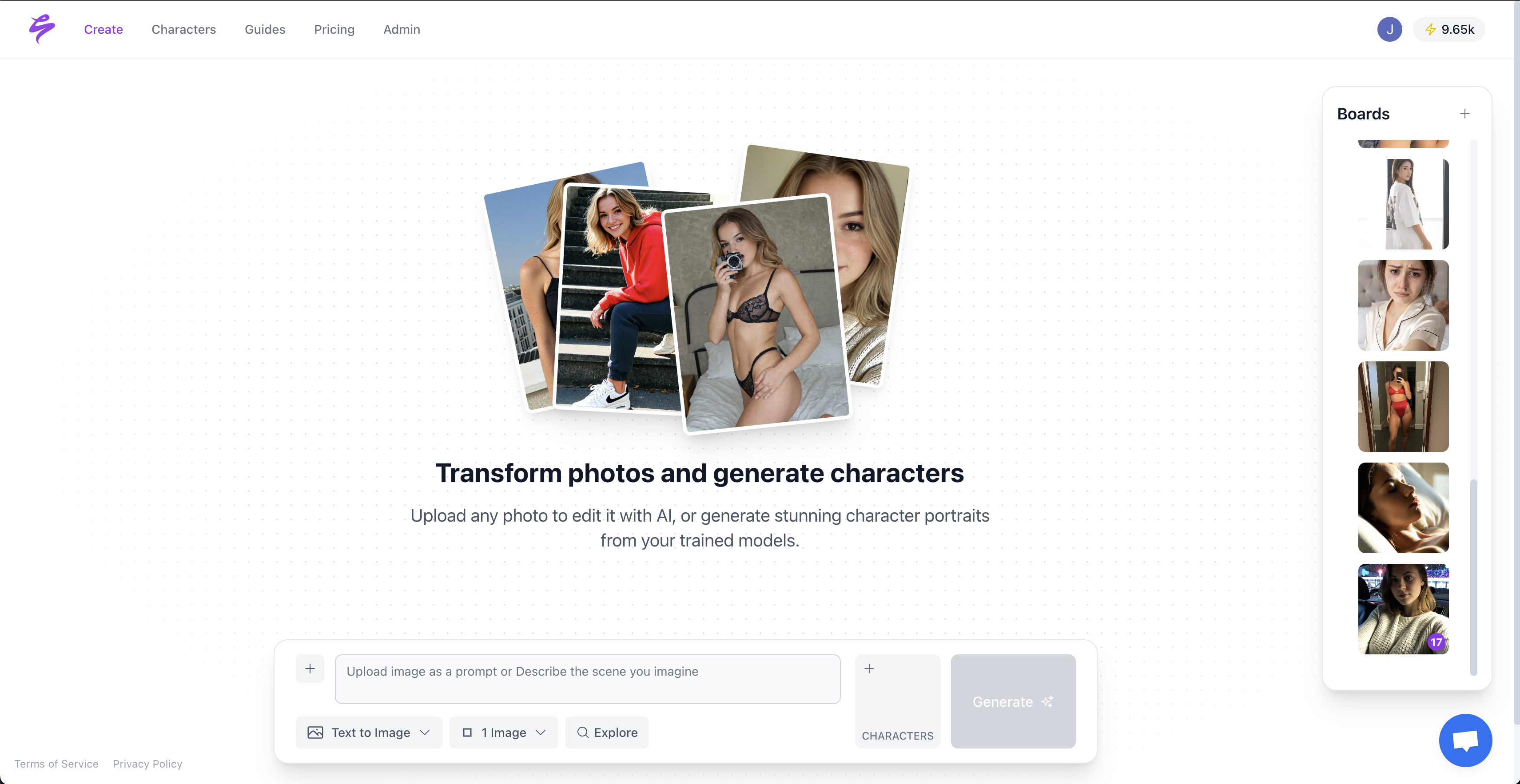

Platforms built on private, isolated models, such as Sozee, address these needs by keeping creator likeness separate from public training data while still producing realistic, on-brand content. This structure lets creators scale their catalogs without sacrificing control over their digital identity.

Sign up for Sozee to start building privacy-safe AI content workflows that protect your brand as you grow.

Foundational Principles for Privacy-Safe AI Content

Data Minimization and Purpose Limitation

Privacy-safe AI starts with using only the data needed for a defined purpose. Creators can regularly review prompts, reference files, and training assets to confirm that each item has a clear role in content generation and is not retained longer than required.

Anonymization vs. Synthetic Data Generation

Organizations are encouraged to rely on anonymization and synthetic data for development and testing environments where production data is not required. Creators can follow the same approach by reserving real likeness data for tightly controlled production use, while testing prompts and workflows on synthetic datasets.

Role-Based Access Controls in GenAI

Role-based access controls restrict who can view, edit, or export sensitive creator data and models. Agencies that manage multiple creators can assign permissions by role or client, which reduces internal misuse risk and simplifies compliance reporting.

Transparency and Explainability in AI Content

Clear communication about AI usage builds trust and supports global privacy rules. Privacy notices that explain how AI is used, what is automated, and how long data is stored help audiences understand where AI appears in content without revealing creative trade secrets.

Data Subject Rights Management Workflows

Workflows that let people access, correct, or delete their data even after AI systems process it are now a core part of global privacy compliance. Creators benefit from tools and processes that can locate data tied to an individual and update or remove it, while preserving the overall content library.

Create AI content with privacy-first settings on Sozee to support audience trust from day one.

Navigating Global AI Regulations for the Creator Economy

The EU AI Act’s Impact on Content Creation

The EU AI Act takes full effect by August 2, 2026, with potential penalties up to €35 million or 7% of global annual turnover. Creators must understand how their use of AI is classified, then apply the correct disclosures and safeguards.

AI-generated content in the EU must carry machine-readable labels that identify it as artificially generated, which is especially important for synthetic audio, deepfake-style video, and text where human authorship is expected.

CCPA and US State Regulations for AI Content

California’s CCPA will require formal AI risk assessments for automated decision-making systems starting around 2026–2027. Agencies that target US audiences, and especially minors, need policies for age-gating, content filters, and documentation of how AI tools affect viewers.

China’s AI Regulations and Content Watermarking

China’s rules introduce mandatory watermarking for AI content, including audio Morse codes, encrypted metadata, and VR-based labels. Creators publishing to Chinese platforms must adopt technical watermarking that survives compression and reposting.

Balancing AI Transparency with User Privacy

Transparency requirements sometimes collide with GDPR rules when full detail about AI logic would reveal personal or confidential data. Many creators will benefit from legal guidance and standardized templates so disclosures remain accurate without oversharing.

Use Sozee to support compliant AI content strategies that align more easily with regional rules.

Advanced Strategies for Privacy-Centric AI Content Workflows

Secure AI Model Training for Likeness Protection

Private training environments give creators more control over their likeness. Isolated datasets and air-gapped infrastructure reduce the chance that face, voice, or style data leaks into public models or other users’ projects.

Building AI Governance Programs

Structured AI governance programs, ongoing risk assessments, and regulatory monitoring help agencies manage AI use across teams. Clear policies for tool selection, approvals, and reviews keep experimentation aligned with privacy goals.

Content Labeling and Provenance

Metadata and durable watermarks support both regulation and audience clarity. Labels should remain intact after platform processing so automated systems and viewers can still identify AI involvement.

Vetting Third-Party AI Content Tools

Due diligence on AI tools now includes questions about data retention, model isolation, export controls, and deletion guarantees. Creators gain leverage by preferring vendors that publish clear privacy documentation and third-party security attestations.

Privacy-by-Design in Creative Workflows

Privacy-by-design means considering data protection from first prompt to final post. When privacy checks appear in briefs, templates, and review steps, compliance becomes part of the creative process instead of a last-minute hurdle.

Securing Digital Likeness in AI Content Generation: Sozee vs. Traditional AI

| Feature/Aspect | Traditional AI Generators | Sozee.ai Privacy-First |

|---|---|---|

| Likeness Training | Public or shared datasets, possible reuse for general model training | Private, isolated models per creator, likeness reserved for that creator |

| Data Security | Inconsistent protections, higher risk of data leakage or secondary use | Encrypted infrastructure, creator data remains under creator control |

| Control and Ownership | Limited control, unclear IP and reuse rights | Clear ownership terms for content and likeness usage |

| Regulatory Alignment | Generic terms of service, limited support for specific laws | Privacy-by-design architecture that reflects global privacy and AI rules |

The main difference lies in design priorities. Traditional generators often optimize for volume and feature breadth, while privacy-first platforms such as Sozee place security, consent, and control at the center of their systems.

Sozee’s isolated model structure keeps each creator’s likeness separate from other users and avoids use in general training. This setup supports transparent labeling and metadata while preserving privacy, which becomes increasingly important as regulators and audiences focus on AI-generated identity use.

Conclusion: The Future Is Privacy-Safe, AI-Powered Content

The next phase of AI in the creator economy will reward privacy-aware strategies. As laws tighten and audiences become more informed about AI, creators and agencies that invest in secure, transparent workflows will stand out for reliability and long-term consistency.

Privacy-safe AI is not only about avoiding fines. It also helps build stronger relationships with viewers who value control over how their data and likeness appear in digital spaces.

Frequently Asked Questions about Privacy-Safe AI Content

How does the EU AI Act apply to individual content creators or small agencies using AI tools?

The EU AI Act classifies AI systems by risk level, and each level carries different duties. General-purpose tools may only need basic transparency, while systems that personalize content or influence user behavior can face more detailed disclosure and documentation requirements. Creators should map each AI use case against the Act’s definitions, especially where biometric data, profiling, or automated decision-making appears. High-risk cases need stronger risk management and records, while limited-risk cases focus more on clear labeling and user information.

What are the key steps for agencies to implement an AI governance program focused on privacy?

Agencies can start by inventorying all AI tools and mapping how each one touches personal data or likeness. From there, teams can create policies that define acceptable use, data flows, retention periods, and approval steps for new tools. Training for staff on privacy basics and AI-specific risks keeps daily decisions aligned with policy. Ongoing actions, such as vendor reviews, regulatory monitoring, and periodic audits, help the agency show that it manages AI responsibly over time.

Can synthetic data truly replace real data for AI content generation without compromising quality or authenticity?

Synthetic data can often stand in for real data during development, testing, and experimentation, especially where privacy risk is high. Modern generators can mirror the patterns and structure of real datasets closely enough to train or validate many creative workflows. Real likeness or brand assets usually remain necessary for final tuning of models that must match a specific face, voice, or visual style. A blended strategy works best, with synthetic data used for most testing and real data reserved for carefully controlled training and production.

How can creators ensure their digital likeness, cloned by AI, remains private and is not misused?

Creators gain the most protection by choosing platforms that commit to model isolation and explicit limits on data reuse. Terms of service and privacy policies should state that likeness data will not be used to train general models or support other users. Strong access controls, logging, and deletion options add further safeguards. Regular reviews of provider policies, along with copies of consent and license agreements, give creators documentation to point to if a dispute about likeness use ever arises.

What specific labeling requirements do creators need to follow for AI-generated content across different platforms?

Labeling rules differ by jurisdiction and platform, so creators often need a common baseline plus regional adjustments. The EU AI Act calls for machine-readable labels for AI-generated content, especially for synthetic audio, deepfake-style video, and AI-written text. China requires more technical watermarking, such as audio codes and encrypted metadata that survive processing. Major social platforms are also rolling out their own disclosure tools. A practical approach combines visible notices for audiences with embedded metadata and watermarks that persist across uploads and shares.