Key Takeaways

- Ultra-realistic human faces in Stable Diffusion work best with photorealism-focused models like Realistic Vision XL, Juggernaut XL, and Flux.1 dev for lifelike skin, eyes, and anatomy.

- Detailed prompts with technical specs such as “detailed skin pores, subsurface scattering, 8k raw photo” plus strong negative prompts reduce uncanny valley issues.

- ControlNet (OpenPose, Face detection, IP-Adapter), LoRAs for skin textures, and HiRes Fix at 0.4-0.6 denoising keep faces consistent and high resolution.

- Post-processing with GFPGAN, CodeFormer, and ESRGAN upsampling fixes artifacts and adds polish suitable for paid creator content.

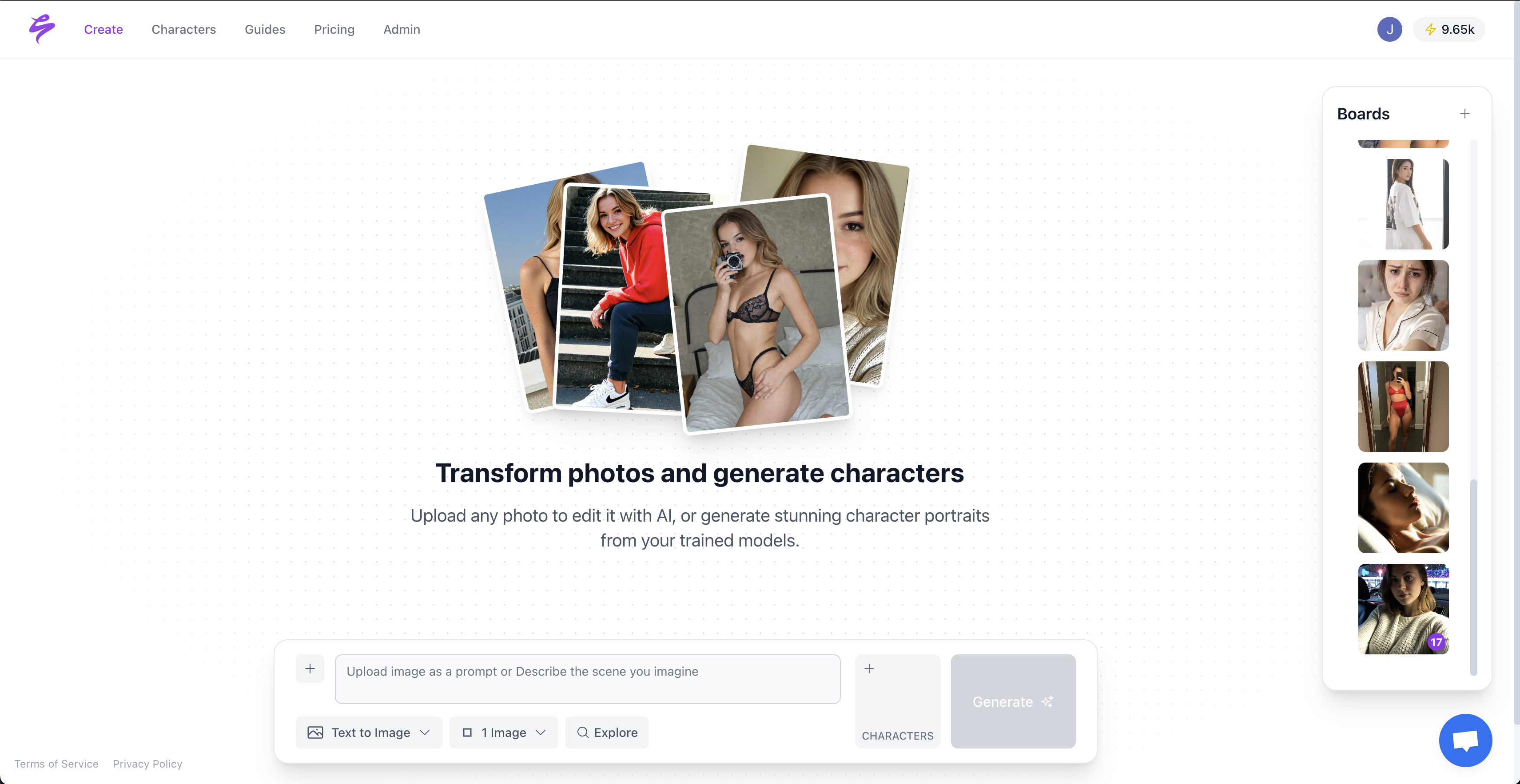

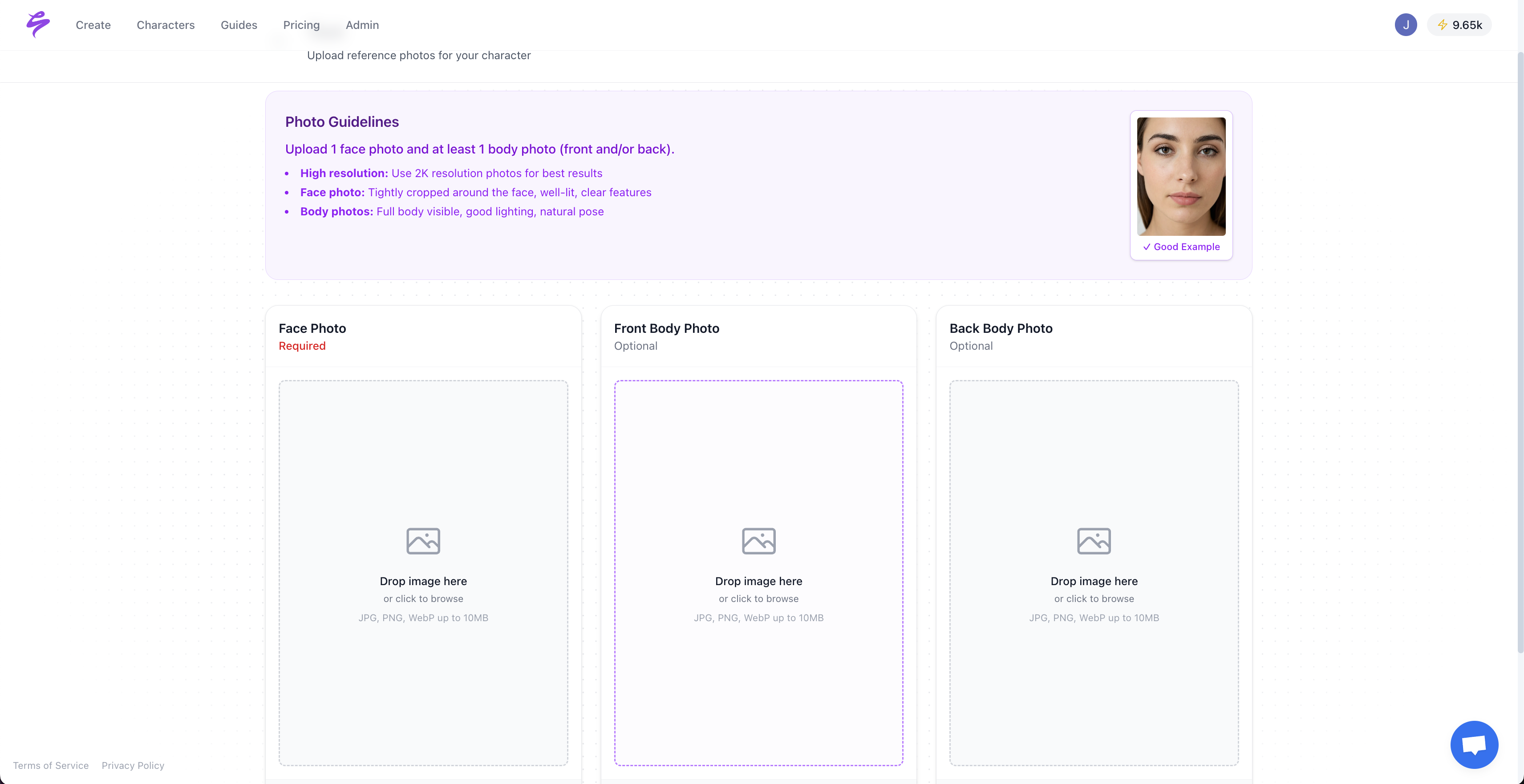

- Skip manual workflows with Sozee.ai, which delivers likeness-perfect, repeatable faces from just three photos.

Prerequisites for Ultra-Realistic Stable Diffusion Faces

Set up your tools before you chase ultra-realistic faces. Install AUTOMATIC1111 WebUI as your main interface, learn basic prompt writing, and create a Civitai account for downloading specialized models. This guide assumes you already understand Stable Diffusion basics and care about how consistent, high-quality faces affect creator revenue.

Creators who want fast results without any setup can get started with Sozee.ai now and skip configuration entirely.

Step-by-Step Workflow for Ultra-Realistic Human Faces

Hyper-realistic faces in Stable Diffusion come from a clear workflow that combines strong models, precise prompts, control tools, high-resolution passes, and smart post-processing. Follow the steps below and refine each stage for your niche and audience.

The five core techniques include choosing photorealistic models like Realistic Vision XL, writing detailed prompts with technical parameters, using ControlNet for structure and consistency, applying HiRes Fix for resolution, and adding LoRAs that refine skin and lighting.

Step 1: Pick 2026’s Top Models for Realistic Human Faces

Realistic Vision is the top Stable Diffusion model for producing lifelike human images, excelling in realistic facial features, eyes, and clothing, which makes many outputs look like real photos. This model focuses on photorealistic humans and usually beats general-purpose checkpoints.

Juggernaut XL offers another strong option for photorealistic faces. It shines with detailed skin, natural wrinkles, and pore-level detail that sells older or weathered characters. Stable Diffusion 3.5 Large is the pinnacle of open-source image generation with full model weights, unmatched customization, and high Elo score of 1,198.

For cutting-edge results, Flux.1 dev by Black Forest Labs excels in excellent realistic images, legible text generation, and prompt adherence. Newer FLUX.2 variants improve consistency across many images, which matters when you want the same face across a full content series.

Step 2: Write Hyper-Realistic Stable Diffusion Face Prompts

Strong prompts for ultra-realistic faces use specific, concrete details instead of vague phrases. Be detailed and specific in prompts for realistic human faces, avoiding simplistic prompts like ‘a woman on street’ which produce poor results.

Use copy-paste portrait prompts such as: “ultra realistic portrait, detailed skin pores, subsurface scattering, natural lighting, 8k raw photo, professional photography, sharp focus, depth of field.” Pair them with negative prompts like “cartoon, anime, painting, sketch, blurry, low quality, plastic skin, artificial lighting.”

Dial in technical settings for stability and detail. Use 30-50 steps, CFG scale between 7 and 12, and sampler DPM++ 2M Karras. Frame prompts affirmatively and positively instead of using negations for better results in Stable Diffusion XL, improving from 0% to 100% success rate.

Step 3: Use ControlNet, LoRAs, and HiRes Fix to Clean Up Faces

ControlNet locks in facial structure and keeps characters consistent. Key ControlNet models for faces include OpenPose for body and head position, Face detection for feature alignment, and IP-Adapter for matching a reference image. ControlNet in Stable Diffusion provides precise control over perspective, layout, and depth, improving face realism by ensuring designs stay true to intent.

LoRA models trained on skin and lighting push realism further. Popular choices include Skin Detail LoRA, Pore Detail LoRA, and Natural Lighting LoRA. Stable Diffusion XL base models can be fine-tuned with LoRAs using as little as five images for specific subjects like faces, enhancing likeness consistency.

HiRes Fix settings for sharp faces follow a simple pattern. Turn on HiRes Fix, choose R-ESRGAN 4x+ as the upscaler, set denoising strength between 0.4 and 0.6, and upscale by 1.5x to 2x. This pass adds fine detail and removes the blocky look from low-resolution generations.

Step 4: Post-Process Faces for Professional Polish

Post-processing removes common Stable Diffusion face flaws and pushes images to a professional level. Apply Face Restoration tools like CodeFormer in AUTOMATIC1111 GUI or install VAE updates to fix eyes and improve realism.

GFPGAN focuses on facial restoration and often fixes eye asymmetry, uneven skin, and subtle anatomical mistakes. Upsampling with Real-ESRGAN or other ESRGAN models then adds crisp detail and prepares images for print, thumbnails, or paywalled galleries.

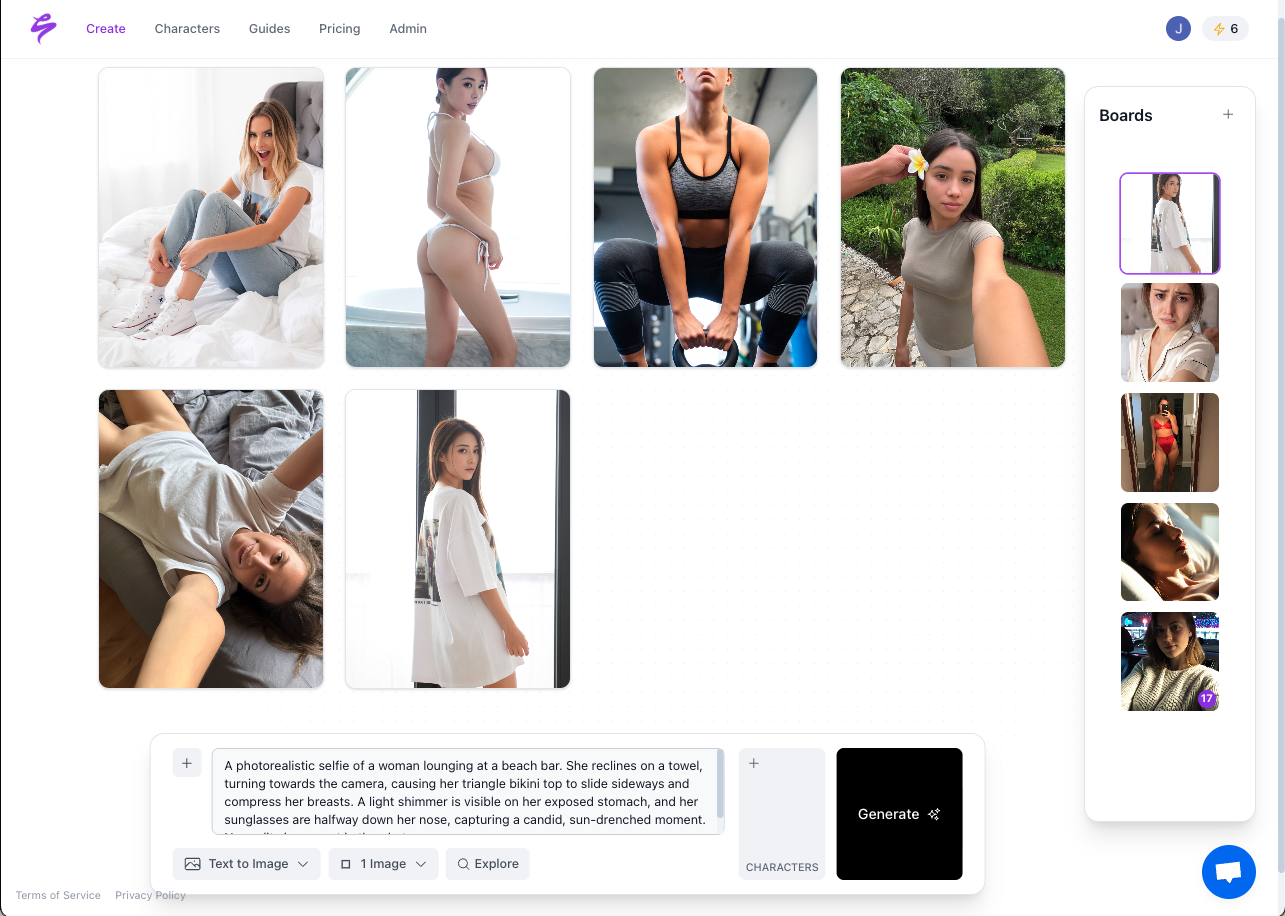

Step 5: Keep Faces Consistent Across Content with Sozee

Face swapping and reference workflows still struggle with perfect consistency in classic Stable Diffusion pipelines. Even advanced setups demand manual tweaking and often create small differences that loyal fans notice quickly.

Sozee.ai removes this bottleneck by rebuilding your exact likeness from three photos. You avoid training time, complex settings, and long iteration cycles. Upload your photos once, then generate unlimited content that keeps your face identical across every output, which matches what serious creator monetization requires.

Best Practices and Fixes for Realistic Stable Diffusion Faces

Brand your prompts with a consistent style and lighting recipe so your content library feels unified. Test different model and prompt combinations side by side to discover which setups drive the strongest engagement and revenue.

Handle common issues with targeted fixes. Uncanny valley faces often improve with skin-focused LoRAs and better lighting prompts. Poor eyes respond to ControlNet depth maps and eye-specific negative prompts. Inconsistent features across images usually need reference images or face-swapping workflows.

Sozee.ai handles these problems automatically through its likeness reconstruction system. Start creating flawless faces today without manual debugging or advanced technical skills.

Success Metrics for Hyper-Realistic Face Generation

Professional-quality face generations should pass as real photos during normal scrolling and viewing. Track fan engagement, conversion rates on paywalled content, and time saved compared with traditional shoots.

Many creators report producing content ten times faster and doubling pay-per-view conversions when they use consistent, realistic faces. Sozee.ai supports this by generating private likeness models that keep your identity intact while you scale output as much as you want.

Advanced Scaling: Blend Stable Diffusion with Sozee.ai

Hybrid workflows give you creative freedom and reliable production. Use Stable Diffusion for experimentation and style discovery, then move winning looks into Sozee.ai for consistent, monetizable runs.

Sozee’s workflow stays simple. Upload three photos, generate unlimited sets, and export directly to your main platforms. Unlike HiggsField or Krea, Sozee focuses on creator monetization with minimal inputs and highly consistent outputs.

Advanced creators rely on Sozee’s prompt libraries, style-locking tools, and SFW-to-NSFW pipeline support to build long-term content businesses without the usual Stable Diffusion maintenance burden.

Conclusion: Master Ultra-Realistic Faces and Scale with Sozee

Manual Stable Diffusion workflows can reach impressive realism but demand time, skill, and constant troubleshooting. Creators who want to grow faster can sign up at Sozee.ai and get instant hyper-realism that removes the technical grind while keeping results consistent and professional.

FAQ: Stable Diffusion Realistic Faces Answered

What is the best Stable Diffusion model for realistic humans in 2026?

Realistic Vision XL and Juggernaut XL currently lead for photorealistic human generation, with Realistic Vision excelling in lifelike facial features and eye detail. Stable Diffusion 3.5 Large offers the highest overall fidelity, while FLUX.2 provides strong consistency across multiple images. For creators who need perfect likeness consistency without complex setups, Sozee.ai uses reconstruction technology instead of standard model training and delivers more reliable identity matches.

What are the most effective Stable Diffusion prompts for ultra-realistic faces?

Effective prompts mix technical and natural language. Use phrases like “ultra realistic portrait, detailed skin pores, subsurface scattering, natural lighting, 8k raw photo, professional photography, sharp focus” and pair them with negative prompts such as “cartoon, anime, painting, plastic skin, artificial lighting.” Frame prompts positively rather than relying on negations, since this greatly improves SDXL success rates. Include 30-50 steps, CFG 7-12, and sampler DPM++ 2M Karras.

How do I fix inconsistent faces in Stable Diffusion?

Fix inconsistent faces by combining ControlNet with face detection models, IP-Adapter reference matching, and stable seed values. LoRAs trained on specific faces or features help preserve identity, while face-swapping workflows can carry that identity across new poses and scenes. These methods still require time and tuning, and small variations often remain visible, which can affect monetization for loyal audiences.

Can I generate realistic faces in Stable Diffusion for free?

Yes, you can use free models like Realistic Vision and Juggernaut XL from platforms such as Civitai. However, reaching consistent, monetizable quality usually demands many hours of testing, technical knowledge, and sometimes paid tools for post-processing. The “free” route often becomes costly once you factor in time and lost revenue from inconsistent results.

How does ControlNet improve face generation in Stable Diffusion?

ControlNet improves face generation by guiding structure through depth maps, pose detection, and reference matching. Key models include OpenPose for pose, Face detection for feature placement, and IP-Adapter for likeness preservation. Aim for moderate control strength between 0.6 and 0.8 and use the correct preprocessor for each control type. ControlNet raises anatomical accuracy and makes it easier to keep characters consistent across a full set of images.